Background

This post is about my 2017 Wolfram Summer School project; this project was an attempt to create a system that algorithmically created a homework problem for each student, auto-graded the submitted result, and then aggregated the results from all the students for entry into a course management system such as BlackBoard. Before I go into detail about the project, though, I want to say a bit about Wolfram Summer School. In short, it was an amazing experience. I had the opportunity to work closely with two wizards at programming, Ian Johnson and Kyle Keane, and they were very, very helpful. I also had the opportunity to attend lectures from Wolfram experts from all over the world. This across-the-globe experience can be best illustrated by describing one conversation thread that arose from talking to Paul Abbott after his lecture on using the Wolfram Language for education in Australia. Paul gave me the name of a contact in the UK that might be able to answer a question that I had. Jon McLoone responded to my email in just a few hours and told me that the person I should talk to was Gerli Jogeva from Estonia. Fortunately, Girli was an instructor at WSS17 and we were able to sit down and discuss my question.

Project Details

The goal of my project was to build a system that algorithmically created homework and quizzes, distributed these homework and quiz notebooks to students through the cloud, collected the finished notebooks from the students, and then aggregated the results. The homework and quiz notebooks were to be completed by students using the free version of Wolfram Programming Lab. This was a key feature since my school does not have a Mathematica site license and it is always easier to ask for funding for licenses after some initial successes have been shown.

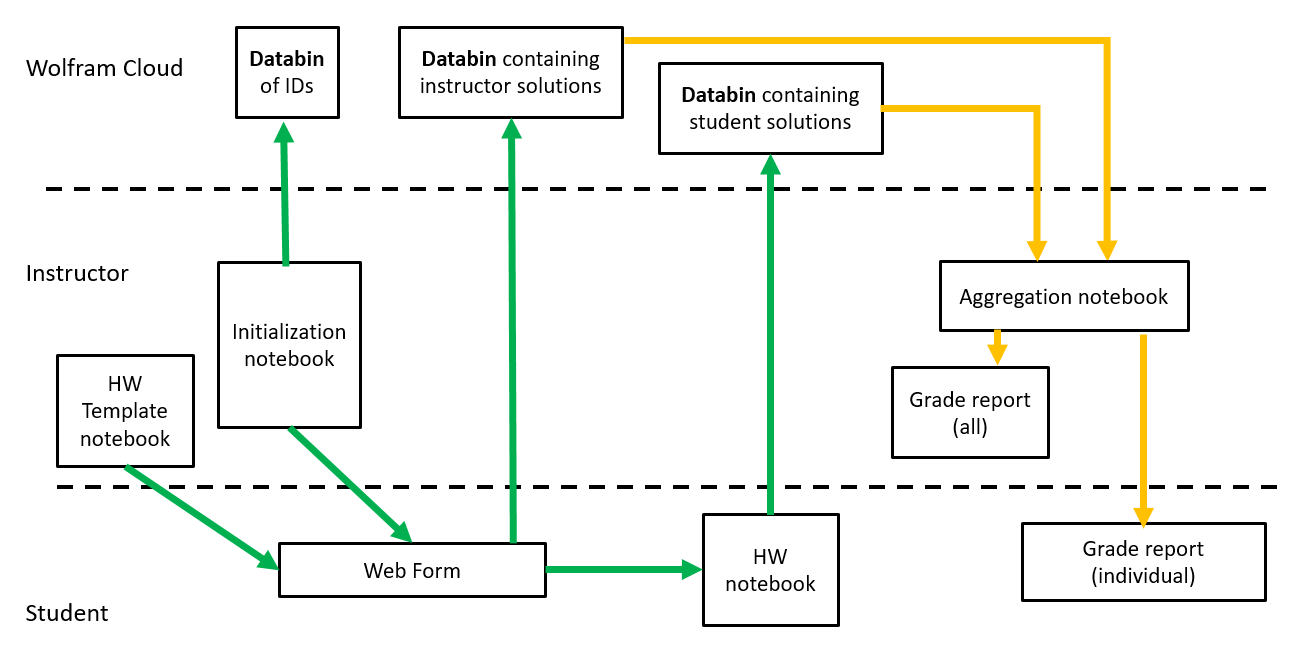

The schematic below shows an outline of the system as planned. Roughly 2/3rds of the the diagram was coded during WSS17. Although the goal was to auto-grade homework, it was expected that some homework such as essays, coding, or diagrams could not be auto-graded. After discussion with Ian Johnson, he created some prototype code that implemented a dynamic grading rubric. This capability is similar to the features in a grading tool at www.gradescope.com. Basically the code allows the grader to enter new errors as found with some number of points to be subtracted. A check box list appears then appears when grading subsequent homework and grading proceed more rapidly as similar mistakes are often make.

Future Work

Additional work needs to be finished to complete the system. This proof-of-principle project verified that it is possible, with some limitations, to implement the above system in the Wolfram Programming Lab. It was found that it was not possible at this time to directly include manipulates in homework notebooks or to use drawing tools as they are not included in Wolfram Programming Lab. The Wolfram Cloud, though, is an important enabling technology that makes the schema above possible. It is also important to note that the system above would allow easy access to homework data for extensive analytics once the database was built up.