All the roads leads to deep learning : mapping satellite pictures

Pixel segmentation for feature extraction from satellite pictures with neural networks

The development of cheaper satellite imaging in the last few years allows the access to a large amount of precise and high-resolution pictures. My goal was to setup a workflow to extract streets and road map features using deep learning from these high-resolution pictures. I implemented the recent convolutional neural network architecture eNet, presented the recent paper ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation, by A. Paszke et al., and after unsatisfying results, changed to an "edge detection" type of network, based on this paper : [Edge Detection Using Convolutional Neural Network][2]

Part 1 - Crafting a dataset

The first part of the project consisted in the gathering of topographic data from the Wolfram database. The workflow consisted in importing matching sets of satellite pictures and street maps, which were later processed to obtain binary masks for pixel segmentation. I used the Wolfram cities database to get a fast and easy to use list of geographic coordinates of cities around the world. The natural language input was a useful tool here to gather the data, along with the new function GeoImage from the geographics module of Mathematica 11.2 .

GeoImage[GeoPosition[#]]&/@ SemanticInterpretation["10 largest cities in Europe locations"]

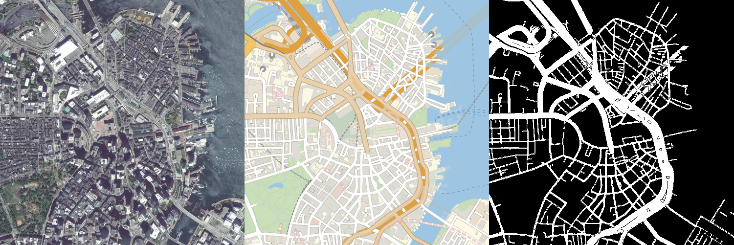

The images are later processed to extract a mask, from the street map, based on the color of the roads and highways.

higwayColor = {0.9098039215686274`, 0.8156862745098039`, 0.6823529411764706`};

freewayColor = {0.894117647059`, 0.627450980392`, 0.211764705882`};

ringroadsColor = {0.9490196078431372`, 0.7686274509803922`,0.38823529411764707`};

generateMask[streetMap_] := Block[{streets, highways, freeways, ringroads},

streets = Binarize[streetMap, 0.99];

highways = Binarize[ColorDistance[streetMap, RGBColor @@ higwayColor], {0, 0.05}];

freeways = Binarize[ColorDistance[streetMap, RGBColor @@ freewayColor], {0, 0.05}];

ringroads =Binarize[ColorDistance[streetMap, RGBColor @@ ringroadsColor], {0, 0.4}];

DeleteSmallComponents[ImageAdd[streets, highways, freeways, ringroads], 10]]

The process was then automatized to generate a full training set of pictures from a simple natural language input:

extract[coord_, range_, resolution_] := Block[{image, sat},

sat = GeoImage[coord, GeoRange -> range, GeoRangePadding -> None,

GeoServer -> "DigitalGlobe", ImageSize -> resolution];

image =

GeoImage[coord, "StreetMapNoLabels", GeoRange -> range,

GeoRangePadding -> None, ImageSize -> resolution];

sat = ImageResize[sat, resolution];

image = ImageResize[image, resolution];

{sat, image, generateMask[image]}]

getData[geoset_, range_, resolution_, cut_] := Block[

{extracted, partitioned},

extracted = extract[#, range, resolution] & /@ geoset;

partitioned = Map[ImagePartition[#, cut] &, extracted, {2}];

Transpose[Flatten[#, 2] & /@ Transpose[partitioned]]

]

generateTrainingSet[geoset_, range_, resolution_, cut_] :=

Rule @@@ (getData[geoset, range, resolution, cut][[All, {1, 3}]])

This process was not done without problems : the datasets need to be thoroughly formatted before the training, reduced in size and for the masks, converted to binary matrices.

Part 2 - Building a neural network

Pixel segmentation with eNet

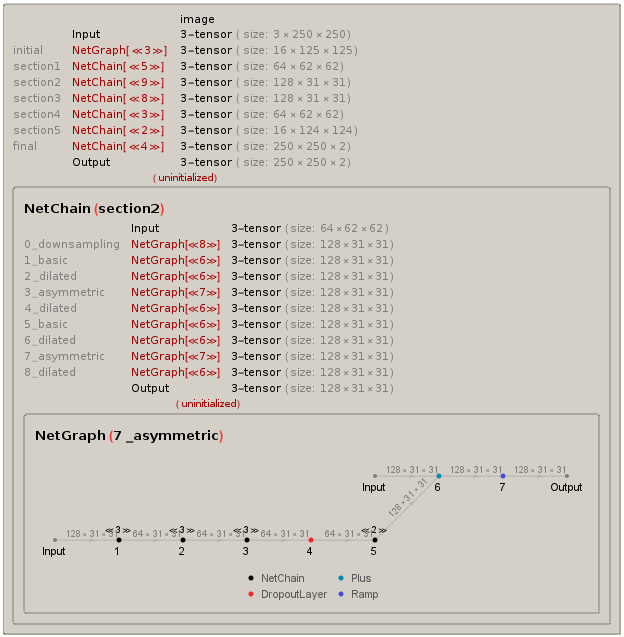

In the first place, I selected a neural net specialized in pixel segmentation, discussed the article [[1]]. It consists of a multi-stage convulutional network, involving multiple layers (more than 20) of asymmetric and dilated convolutions.

After a long time spent for implementation, and an intensive training overnight on more than 4000 pictures, it was time to check for the predictions of our top-notch system. Turns out the training was a total failure, that can be easily explained : the ratio between road and non-road pixels in the image was way too unequal on the training set. The network was doing better predicting non-roads parts than actual roads, since it was told these were preponderant.

Not very efficient as you can see on the picture above. Which meant going back to the drawing board for both the network and the data.

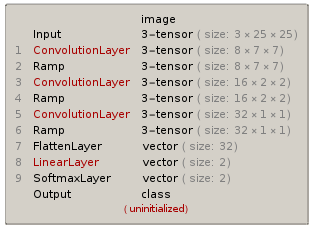

Edge detection

When you think about it for a while, this situation is very close to an edge detection problem : roads have well-defined contours, often cut the image in a straight line, and are always connected. I decided to switch to a different, more simple neural network, already tested for this purpose [[2]]. The implementation was very fast, as it only consists of 3 to 5 layers depending on the amount of precision you want to obtain, but the data had to be completely reprocessed. We used smaller 25x25 chunks of pictures for faster training.

The results were quite promising : the network was able to extract most of the road features from the images, even if the edges are a bit blurry. This can be improved later by adding layers and tweaking the number of channels. You can see below a test satellite picture, the matching mask for the streets, and the final prediction of the network.

I'm planning on training the network with more pictures to give more accurate predictions in the future. The long term goal would be the detection of new roads and automatic updating of GPS databases using this process. This project taught me a lot about image processing, geographic data in Mathematica, and neural networks, and I'll try to keep it evolving to get sharper results.