EDIT: Looking closely at my network I have a similar problem of providing inputs to the CropLayer function. The output from the pooling layer (node 30) and node 87 (activation) should feed to the CropLayer. The question is how to feed outputs from one layer to other layers in the network

I am trying to implement UNET in Mathematica: https://lmb.informatik.uni-freiburg.de/people/ronneber/u-net

I have the following code so far for generating the net partially:

(* encoder *)

encoder = NetEncoder[{"Image", "ImageSize" -> {168, 168}, "ColorSpace" -> "Grayscale"}];

(* decoder *)

decoder = NetDecoder[{"Image", "ColorSpace" -> Automatic}];

(* convolution module *)

Options[convolutionModule] = {"batchNorm" -> True, "downpool" -> False,

"uppool" -> False, "activationType" -> Ramp, "convolution" -> True};

convolutionModule[net_, kernelsize_, padsize_, stride_: {1, 1}, OptionsPattern[]] :=

With[{upPool = OptionValue["uppool"], activationType = OptionValue["activationType"],

convolution = OptionValue["convolution"], batchNorm = OptionValue["batchNorm"],

downpool = OptionValue@"downpool"},

Block[{nnet = net},

If[upPool,

nnet = NetAppend[nnet, DeconvolutionLayer[1, {2, 2}, "PaddingSize" -> {0, 0},

"Stride" -> {2, 2}]];

nnet = NetAppend[nnet, BatchNormalizationLayer[]];

If[activationType === Ramp,

nnet = NetAppend[nnet, ElementwiseLayer[activationType]]

];

];

If[convolution,

nnet = NetAppend[nnet, ConvolutionLayer[1, kernelsize, "Stride" -> stride,

"PaddingSize" -> padsize]]

];

If[batchNorm,

nnet = NetAppend[nnet, BatchNormalizationLayer[]]

];

If[activationType === Ramp,

nnet = NetAppend[nnet, ElementwiseLayer[activationType]]

];

If[downpool,

nnet = NetAppend[nnet, PoolingLayer[{2, 2}, "Function" -> Max, "Stride" -> {2, 2}]]

];

nnet]

]

(* Crop Layer *)

CropLayer[netlayer_] := With[{p = NetExtract[netlayer, "Output"]},

PartLayer[{First@p, 1 ;; p[[2]], 1 ;; Last@p}] ];

(* partial UNET *)

UNET[] :=

Block[{nm, pool1, pool2, pool3, pool4, pool5, kernelsize = {3, 3},

padsize = {1, 1}, stride = {1, 1}},

nm = NetChain@

Join[{ConvolutionLayer[1, {3, 3},

"Input" -> encoder]}, {BatchNormalizationLayer[],

ElementwiseLayer[Ramp],

PoolingLayer[{2, 2}, "Function" -> Max, "Stride" -> {2, 2}]}];

pool1 = nm[[-1]];

nm = convolutionModule[nm, kernelsize, padsize, stride,"downpool" -> True];

pool2 = nm[[-1]];

nm = convolutionModule[nm, kernelsize, padsize, stride,"downpool" -> True];

pool3 = nm[[-1]];

nm = convolutionModule[nm, kernelsize, padsize, stride,"downpool" -> True];

pool4 = nm[[-1]];

nm = NetAppend[nm, DropoutLayer[]];

nm = convolutionModule[nm, kernelsize, padsize, stride, "downpool" -> True];

pool5 = nm[[-1]];

nm = convolutionModule[nm, kernelsize, padsize, stride, "uppool" -> True];

nm = convolutionModule[nm, kernelsize, padsize + 1, stride, "uppool" -> True];

nm = NetAppend[nm, CropLayer@pool3];

with NetInformation I can generate the net plot below:

NetInformation[(nm = UNET[]), "MXNetNodeGraphPlot"]

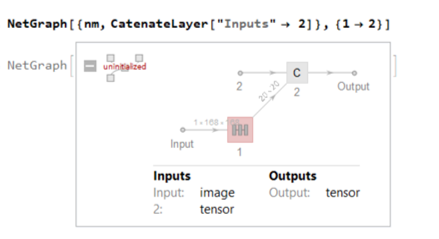

My problem: how do I catenate the output from the pooling layer i.e. node 30 with the output from node 91.

I tried using NetGraph with CatenateLayer but could not find a way to connect node 30 within the NetChain with the second input of CatenateLayer.