Feed-forward neural networks (FNN) are artificial neural networks in which the connections do not form a cycle. These are the earliest and simplest form of neural networks, but they have not been as successful as other types of neural networks, such as the recurrent or convolutional. The FNN that perform well are typically shallow.

Below you can see the feedforward neural network code. The variable "best" is the list of hyperparameters of the FNN, which are the number of layers, number of neurons in each layer and the dropout scalar:

FNNnetClassification[best_List, decoder_] :=

NetChain[Join[

Flatten@Table[{LinearLayer[best[[2]]], ElementwiseLayer["ReLU"],

DropoutLayer[best[[1]]]}, best[[3]] - 1], {LinearLayer[],

SoftmaxLayer[]}], "Output" -> decoder];

Recently a new kind of feedforward neural networks has entered the field: the self-normalizing neural networks (SNN), changing the paradigm of FNN. Their main property is that the activations of neurons converge to zero mean and unit variance after passing through layers. The characteristics that enforce the convergence are:

- The SELU (Scaled Exponential Linear Unit) function

- The "alpha" dropout, which consists in scaling the activations instead of randomly set to 0 some activations.

A series of mathematical powerful tools were used to prove the convergence in SNN: the Banach fixed point theorem, the Central Limit Theorem and properties of the mean of the Binomial Distribution (which is the basis of the generation of alpha dropout).

This type of FNN is outstanding, and it has been proved its efficiency over all other types of feedforward neural networks, making it the BEST option of FNN to use, until now.

The only requirement needed to train a SNN and test it is to normalize the input. Note that the normalization has to be done with FeatureExtraction[] to preserve the main features of input. Done that, the mentioned characteristics guarantee the convergence of the neuron's activations. In case the activations are not close to unit variance it is proved an upper and lower bound on variance, so vanishing and exploding gradients are impossible.

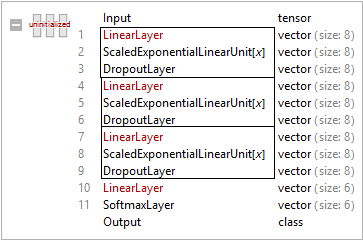

Summing up, a self-normalizing neural network is a simple feedforward neural network with SELU activation function and alpha dropout, and as its name says, it self-normalizes its neuron's activations. The code for the SNN is very simple, which you can see below together with its underlying structure (of four layers in this case):

SNNnetClassification[dropoutrate_, nhidden_, nlayers_, decoder_] :=

NetChain[Join[

Flatten@Table[{LinearLayer[nhidden], ElementwiseLayer["SELU"],

DropoutLayer[dropoutrate, "Method" -> "AlphaDropout"]},

nlayers - 1], {LinearLayer[], SoftmaxLayer[]}], "Output" -> decoder];

We trained the constructed neural network to classify some diseases for which I have found data sets. The input to the neural network were the attributes of the patient (signs and symptoms). The classification was done over dermatological diseases, breast cancer, Parkinson and mesothelioma. Surprisingly the SNN classified the diseases almost perfectly, with a degree of accuracy of 100%.

Motivation

Helping people to fight diseases is my main purpose . Some diseases are difficult to detect and/or diagnose. Some of them have so similar symptoms that they may be confused with each other and mistreated. Also, the unavailability of qualified doctors in some regions of developing countries, such as my country, Mexico, is be preoccupying. I am interested of finding an easy to access/use program that classifies diseases. I don't want you to misunderstand. I am not saying that I am looking for a way to avoid doctors - they are fundamental. But I do want to make the access to possible diagnosis much easier.

For start, I classified four sets of diseases, which I had available data from the UCI machine learning repository. As I mentioned above, SNN gave impressive results.

Dermatology

The differential diagnosis of Erythemato - Squamous diseases is a real problem in dermatology.They all share the clinical features of erythema and scaling, with very little differences. Usually a biopsy is necessary for the diagnosis but unfortunately these diseases share many histopathological features as well. Another difficulty for the differential diagnosis is that a disease may show the features of another disease at the beginning stage and may have the characteristic features at the following stages. With the data set taken from UCI we train neural networks and observe their efficiency to determine the disease.

link = "https://archive.ics.uci.edu/ml/machine-learning-databases/\

dermatology/dermatology.data";

dermaDatawMissing = ReplaceAll[Import[link, "CSV"], "?" -> Missing[]];

dermaData = DeleteMissing[dermaDatawMissing, 1, 2];

dermaDatawRule =

Table[Drop[dermaData[[i]], -1] -> Last@dermaData[[i]], {i, 1,

Length@dermaData}];

{dermatrain,

dermatest} = {RandomSample[dermaDatawRule,

Round[0.9 Length@dermaDatawRule]],

Complement[dermaDatawRule, dermatrain]};

dermaext =

FeatureExtraction[N@Keys@dermaDatawRule, "StandardizedVector"];

{dermatrainSNN,

dermatestSNN} = {dermaext[Keys@dermatrain] -> Values[dermatrain],

dermaext[Keys@dermatest] -> Values[dermatest]};

dermatestSNN =

Table[dermatestSNN[[1, i]] -> dermatestSNN[[2, i]], {i, 1,

Length@dermatestSNN[[1]]}];

dermadec = NetDecoder[{"Class", Range[6]}];

dermaLoss =

CrossEntropyLossLayer["Index",

"Target" -> NetEncoder[{"Class", {1, 2, 3, 4, 5, 6}}]];

dermaNet = <|

Flatten@Table[{i, j, k} ->

SNNnetClassification[i, j, k, dermadec], {i, {0.01, 0.05,

0.1}}, {j, {32, 64, 128, 256}}, {k, {4, 8, 16, 32}}]|>;

trainedNet = <|

Table[SeedRandom[8];

key -> NetTrain[dermaNet[key], dermatrainSNN,

LossFunction -> dermaLoss, MaxTrainingRounds -> 150,

ValidationSet -> Scaled[0.1]], {key, Keys@dermaNet}]|>;

dermacm = <|

Table[key ->

ClassifierMeasurements[trainedNet[key], dermatestSNN], {key,

Keys@trainedNet}]|>;

dermaAccuracy = <|

Table[key -> dermacm[key]["Accuracy"], {key, Keys@trainedNet}]|>;

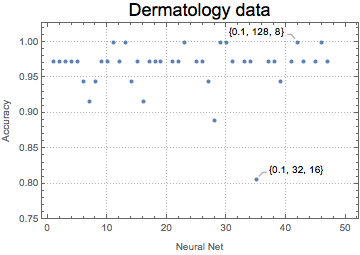

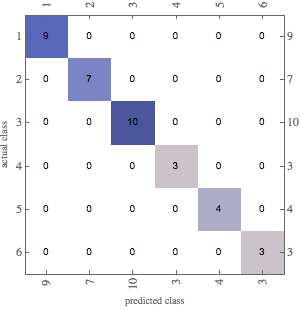

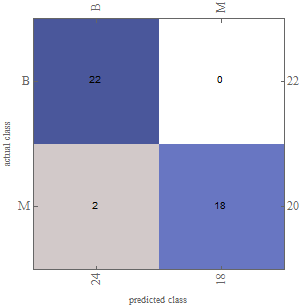

Next List Plot shows the accuracy of the 48 SNN I created, with different structure each. The SNN classifies the data perfectly. The confusion matrix plot shows that, as desired, the predicted classes are the actual classes

Mesothelioma

Mesothelioma is a type of cancer that develops from the thin layer of tissue that covers many of the internal organs (known as the mesothelium).[9] The most common area affected is the lining of the lungs and chest wall. Malignant mesotheliomas (MM) are very aggressive tumors of the pleura.These tumors are connected to asbestos exposure, however it may also be related to previous simian virus 40 infection and genetic predisposition.

To not bore you with much code I won't paste it here, since the code repeats itself from the dermatology data.

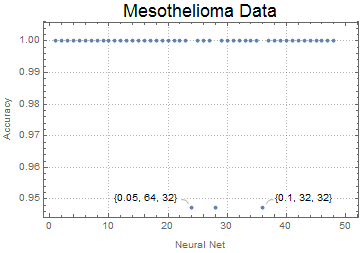

Almost all the SNN gave 100% accuracy! The confusion matrix is a diagonal matrix, as one desires. Perfect classification!

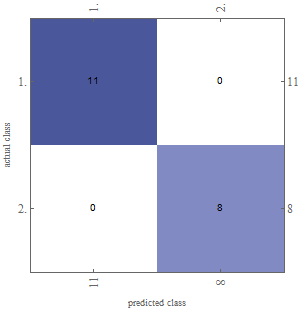

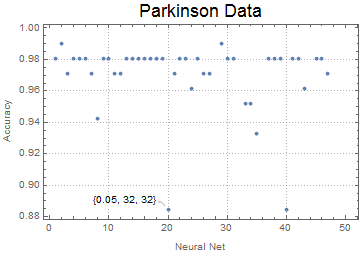

Parkinson Data

Parkinson's disease is a long-term degenerative disorder of the central nervous system that mainly affects the motor system. The symptoms generally develop slowly over time. Early in the disease, the most obvious are shaking, rigidity, slowness of movement, and difficulty with walking. Thinking and behavioral problems may also occur. Dementia becomes common in the advanced stages of the disease. Depression and anxiety are also common, occurring in more than a third of people with PD. Other symptoms include sensory, sleep, and emotional problems. The data used was particularly interesting because the classification was done based on the voice recordings of the subjects. Each subject analyzed has 26 voice samples including sustained vowels, numbers, words and short sentences.

The accuracies are also high for this data

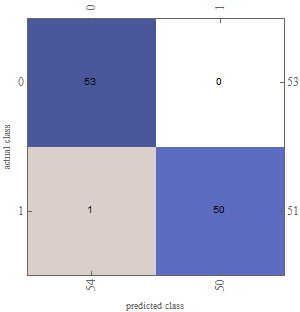

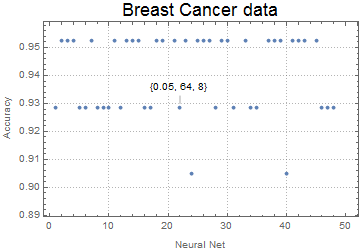

Breast Cancer

Breast cancer is the most common cancer among women. There are many types of breast cancer that differ in their capability of spreading to other body tissues. Breast cancer is diagnosed during a physical exam, by self-examination of the breasts, mammography, ultrasound testing, and biopsy

This data was not an exception. SNN also gave great accuracies of classification.

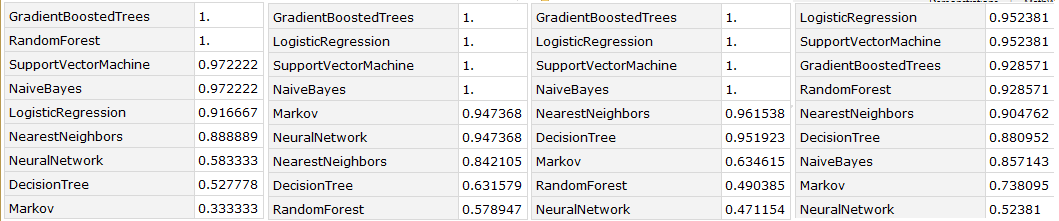

SNN don't only give good accuracy, but it looks like it gives the best accuracy. You can compare in the table belows the accuracies of all the methods of the classify function for dermatology, mesothelioma, parkinson and breast cancer respectively:

I would like to gather data for more diseases, specially tropical diseases such as dengue, zika, chikunguya, paludismo and chagas. This diseases are problematic, specially in the small towns and the poor areas of Mexico. As I discovered in Wolfram Summer School, it is a much more sparse problem than I imagined, it turns out countries on the other side of the world, such as India, also lead with the same problem. Classifying these diseases was my initial goal by coming to Wolfram Summer School, but it was impossible to achieve because of the lack of data. However, this will be the next step to follow when I am back in Mexico.

To view the whole code and details of the data and results, please follow to the notebook attached.

Attachments:

Attachments: