Introduction

Web services that process huge amounts of image data are faced with the problem of how to display the image content to the end-user. For example, when you post something on Twitter and include an image in your post, Twitter tries to crop it in a meaningful way. The user only sees the parts that are informative.

.

.

The goal of my project is to implement smart auto-cropping using the Wolfram Language and to get familiar with different methods of finding crucial information in images.

Approaches

1. Saliency map

First way to find important parts of an image is to use ImageSaliencyFilter[] function that gives us saliency map of an image:

map = ImageSaliencyFilter[image] // ImageAdjust

.

.

On the saliency map we can see regions of an image that have features that stand out as different. A higher saliency value is taken to be more important. In addition to saliency mapping, EdgeDetect[] and LaplacianFilter[] functions may be used to detect edges on an image. To get final cropping area we can binarize an image and find bounding regions of white parts. Since binarization does not perform well on dense images, we can not achieve significant results on detecting main objects on an image. Another disadvantage is that saliency maps tend to change when we try to resize image, therefore accuracy of cropping will be lower in Twitter case.

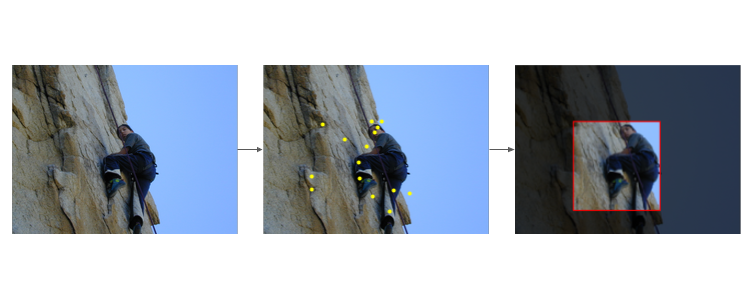

2. Image keypoints

Key-points are essentially points of interest. They are corner points in an image that encode what is of interest in their immediate neighborhood. The reason why keypoints are special is their invariance. No matter how the image changes... whether the image rotates, shrinks/expands, is translated or is subject to distortion, you should be able to find roughly the same key-points in the modified image compared to the original image. Mathematica has a built-in ImageKeypoints[] function for that (keypoints are shown as yellow dots):

Then we can build a bounding region and it will be our crop area. Here are functions that process an image:

aspectRatio[rect_Rectangle] :=

Block[{sides = Differences[{rect[[1, All]], rect[[2, All]]}][[1]]},

First@sides/Last@sides];

centerMass[keypoints_] :=

Round@{Total[#[[1]] & /@ keypoints]/Length@keypoints,

Total[#[[2]] & /@ keypoints]/Length@keypoints};

applyMargin[r_Rectangle, imgDimensions_List, percent_Integer] :=

Block[{rect = r, avgDim = Mean@imgDimensions},

rect[[1, All]] -= avgDim*percent/100;

rect[[2, All]] += avgDim*percent/100;

rect

] (* add margin in % to rectangle. Basically, If percent > 0, then \

rectangle expands *)

smartCrop[input_Image] :=

Block[{img = ImageCrop[input], faces, keypoints, boundReg, cm,

imgDimensions, perc = 5},

faces = FindFaces[img];

keypoints = ImageKeypoints[img, MaxFeatures -> 10];

boundReg = BoundingRegion[keypoints, "MinRectangle"];

cm = centerMass[keypoints];

imgDimensions = ImageDimensions@img;

If[Length@faces > 0,

HighlightImage[img,

applyMargin[Last@SortBy[faces, Area], imgDimensions, perc],

"Darken"],

If[aspectRatio[boundReg] <= 16/9,

HighlightImage[img, applyMargin[boundReg, imgDimensions, perc],

"Darken"],

HighlightImage[img,

Rectangle[cm - Mean[imgDimensions]/4,

cm + Mean[imgDimensions]/4], "Darken"]]]]

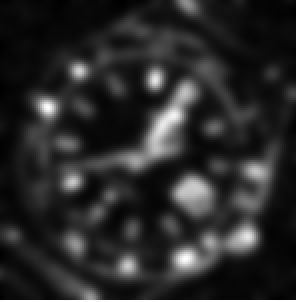

3. Neural net sensitivity map

Another approach is the use of a classifying neural network to find the main objects in an image. Using NetModel[], one can access any neural network in the Wolfram Network Repository. Systematically occluding parts of an image, we can build sensitivity maps from an image classification neural network. This shows what part of an image affects the classifier decision most and, thus, is the most prominent object in the image.

Eventually we remove the parts of an image that do not contribute to the classification result and crop it:

imgIdNet = NetModel["Wolfram ImageIdentify Net V1"];

Clear[coverImageAt];

coverImageAt[image_Image, pos_List, r_: (1/6),

mean_: {0.45, 0.45, 0.45}] :=

Block[

{dims = ImageDimensions[image], img , R},

img = ImageResize[image, dims];

R = Round[dims r];

ImageCompose[

img,

SetAlphaChannel[

ConstantImage[

PadRight[mean, ImageChannels[img]], (2 R + 1) {1, 1},

ColorSpace -> ImageColorSpace[img]],

Image@Normalize[GaussianMatrix[{R}], Max]

],

Scaled[pos]

]

];

(* ---- *)

Clear[networkSensitivityMap];

networkSensitivityMap[img_Image, network : (_NetChain | _NetGraph),

opts___Rule] :=

Block[

{concept = network[img, opts], coverImages, w,

dims = ImageDimensions[img],

mean = NetExtract[network, {"Input", "MeanImage"}],

step = 1/6},

Print[concept];

coverImages = Table[

coverImageAt[img, {x, y}, step, mean],

{y, 1 - step/2, step/2, -step},

{x, step/2, 1 - step/2, step}

];

w = First@Dimensions[coverImages];

Partition[

Lookup[network[Flatten[coverImages], "Probabilities", opts],

concept], w];

RemoveAlphaChannel[

SetAlphaChannel[

img,

ImageAdjust@(

Blur[ImageResize[

ImageAdjust@

ColorNegate@

Image[Partition[

Lookup[network[Flatten[coverImages], "Probabilities",

opts], concept], w]], dims], dims step/Sqrt[2]]^2)

],

Black

]

];

After that we remove dark parts of an image and crop it:

smartCropSensMap[input_Image] :=

Block[{img = ImageCrop[input], mbin, sensMap, rects, boundReg,

imgNet = NetModel["Wolfram ImageIdentify Net V1"]},

sensMap = networkSensitivityMap[img, imgNet];

mbin = MorphologicalBinarize[sensMap];

rects =

Apply[Rectangle,

ComponentMeasurements[mbin, "BoundingBox"][[All, 2]], {1}];

HighlightImage[img, Last@SortBy[rects, Area]]];

Summary

We covered three approaches for recognizing the most important parts of an image: saliency maps, image key-points and neural network sensitivity maps.

Future work

Future work may consist of building a neural network like YOLO from scratch using public datasets (CAT2000, MIT300) and trying to get high score.