I am impressed by the use of NN here, and maybe this is a bit off topic, but I would like to make a soft-spoken remark: When nothing more than an extrapolation is wanted, a simple fit will do it, e.g.:

ClearAll["Global`*"]

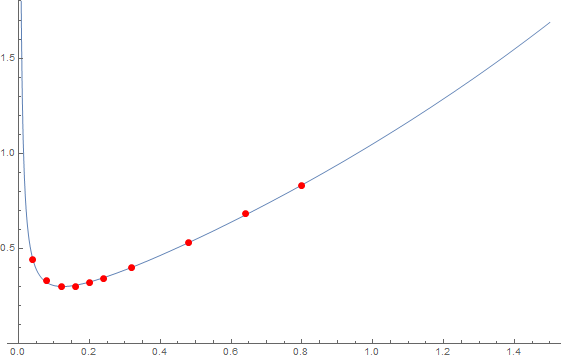

ydata = {0.44, 0.33, 0.3, 0.3, 0.32, 0.34, 0.4, 0.53, 0.68, 0.83};

xdata = {0.04, 0.08, 0.12, 0.16, 0.2, 0.24, 0.32, 0.48, 0.64, 0.8};

data = Transpose[{xdata, ydata}];

model = (a + b x + c x^2)/(d x + e x^2);

fit = FindFit[data, model, {a, b, c, d, e}, x];

Plot[{Evaluate[model /. fit]}, {x, 0, 1.5}, Epilog -> {Red, PointSize[Large], Point[data]}, PlotRange -> {0, 1.8}]