Hello Sebastien,

This AWS support article describes the configuration necessary to mount an EFS file system in AWS Batch job containers. I found it easiest to make a modified version of the RemoteBatchSubmit submission environment CloudFormation template, which I've uploaded here. (You can use this link to open the template in the CloudFormation console.) This template has an additional parameter field at the top for entering an EFS file system ID.

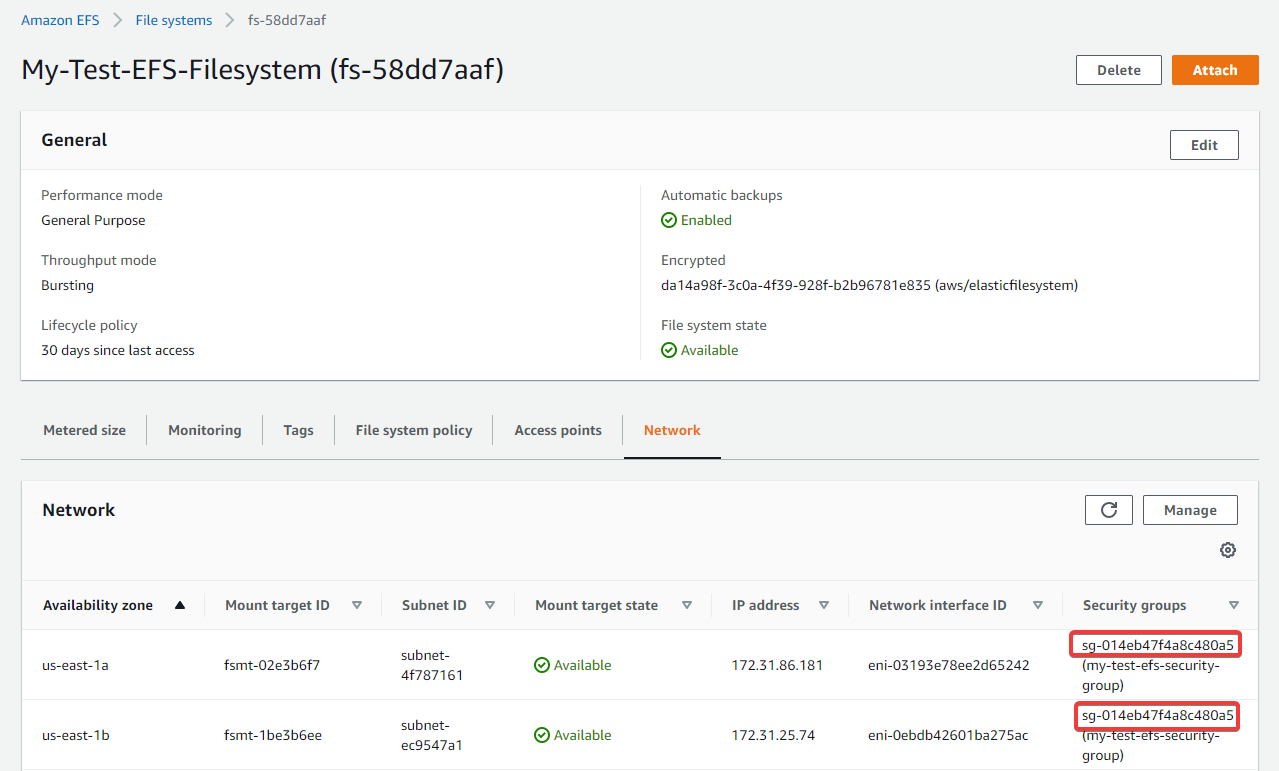

After creating a stack from the template, there's one more manual step required. We have to modify the EFS file system mount targets' security group to allow inbound NFS connections from the compute instance security group created by the CloudFormation template. Take note of the mount targets' security group ID in the EFS console:

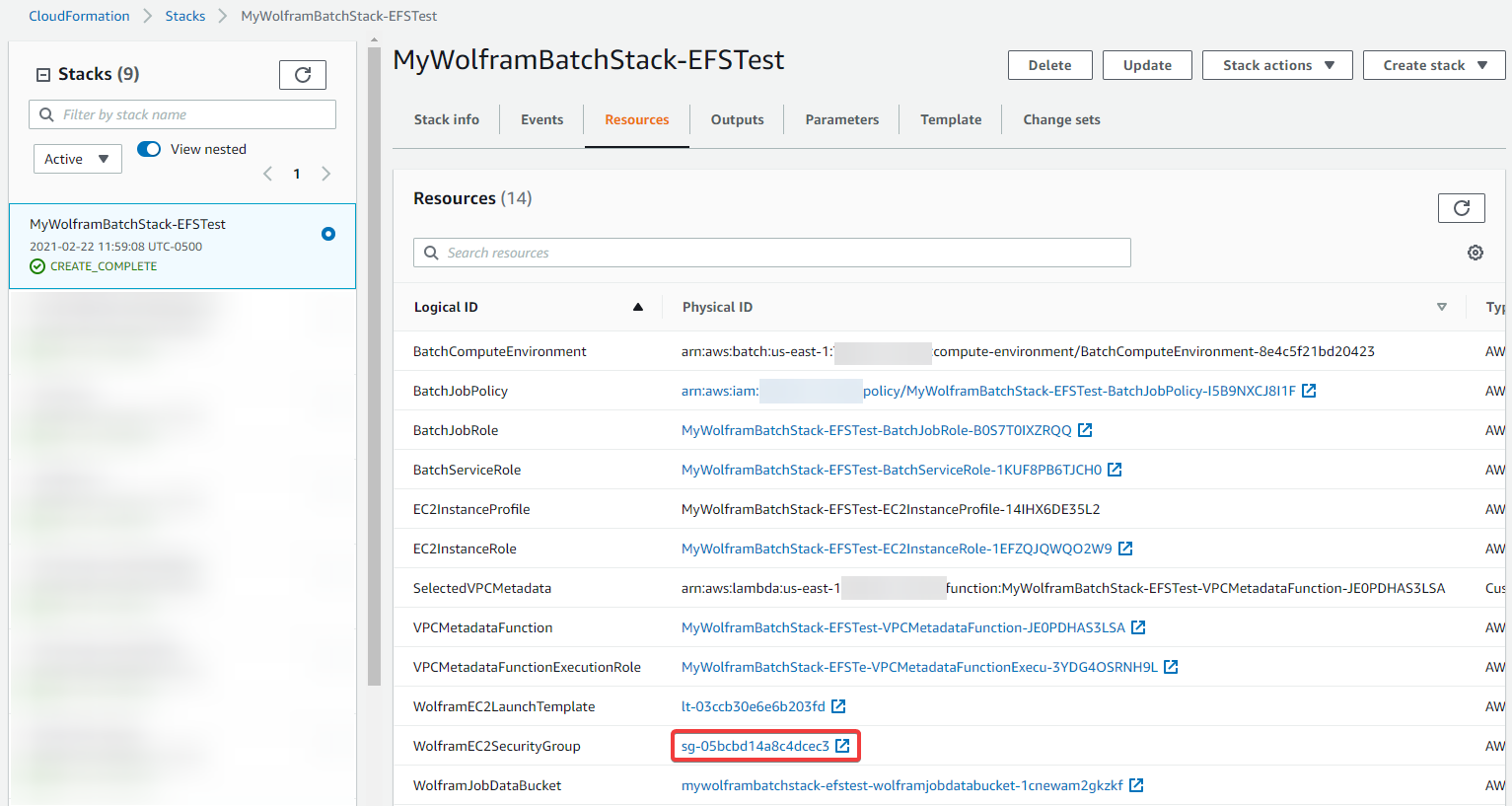

Also take note of the instance security group ID (WolframEC2SecurityGroup) created by the CloudFormation stack:

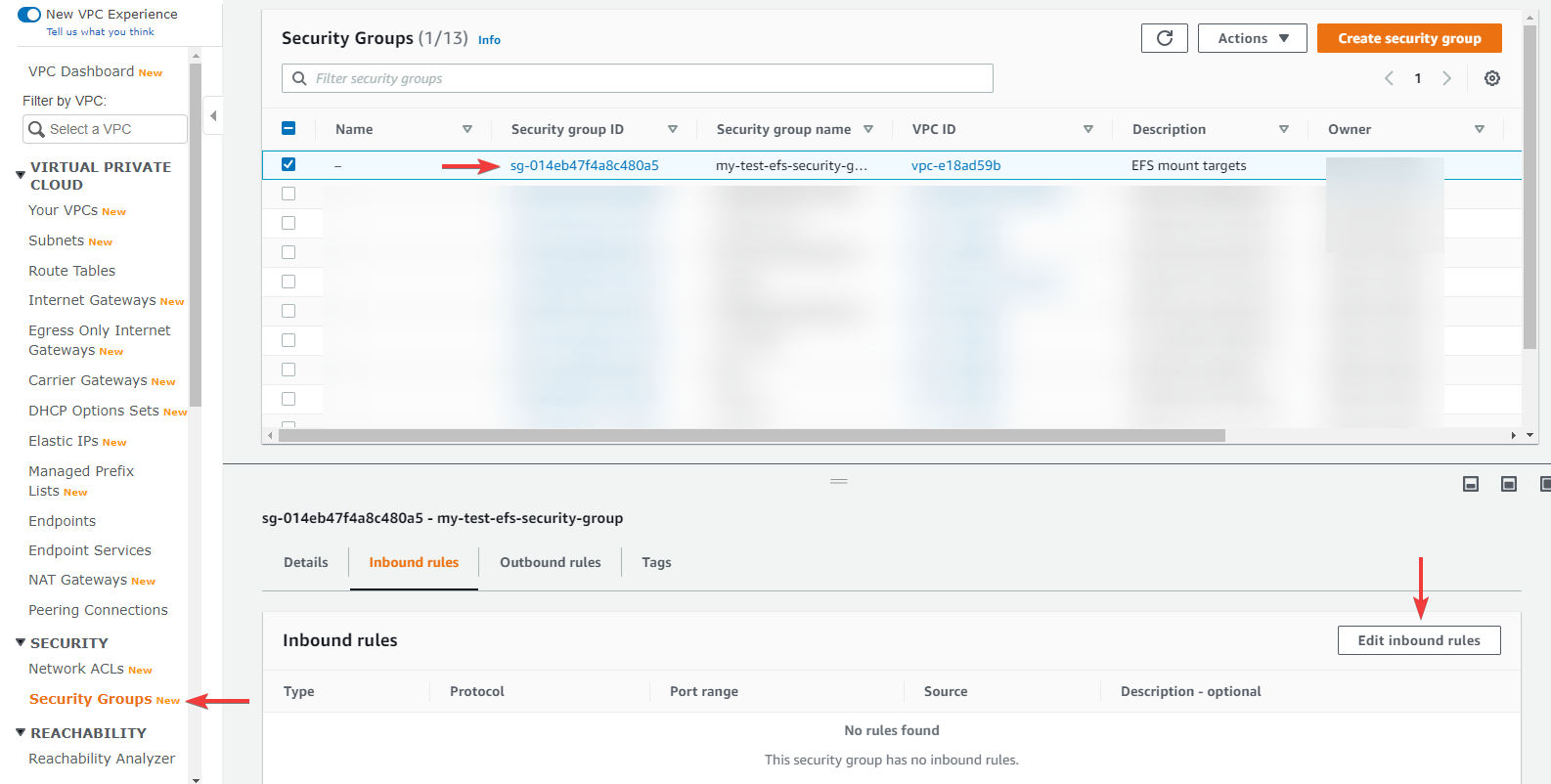

Find the EFS mount targets' security group in the VPC console and edit its inbound rules:

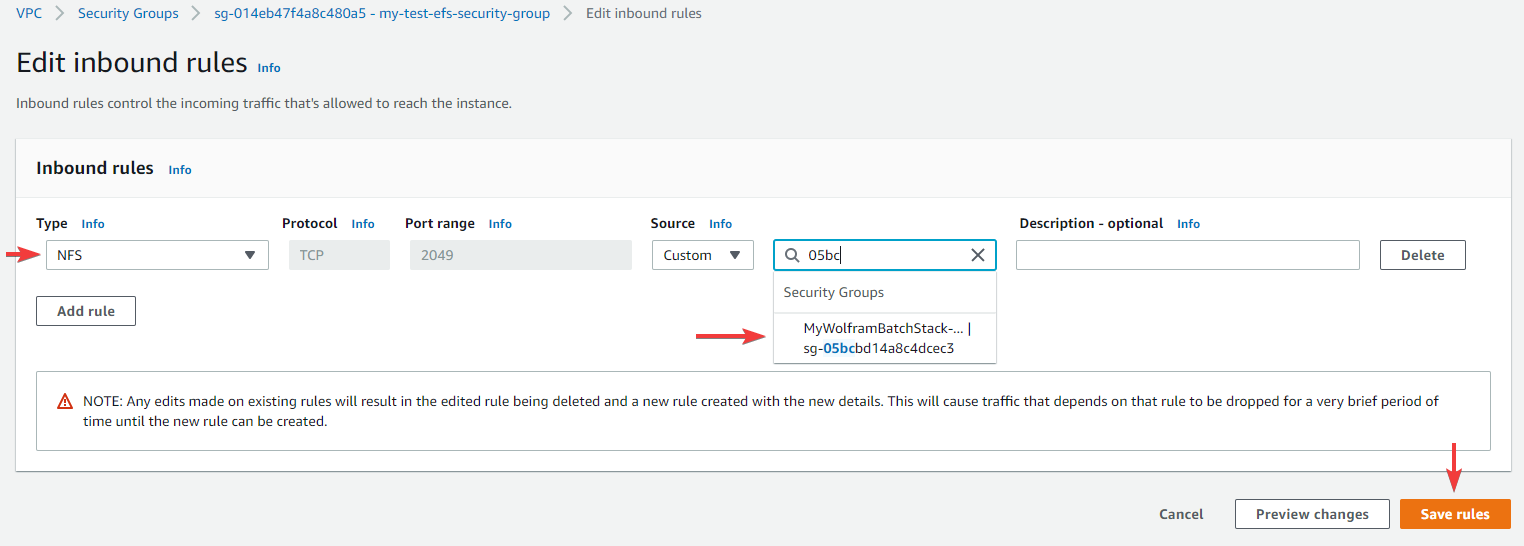

Add an "NFS"-type rule with the instance security group as its source:

Your jobs submitted to this environment should now be able to access the mounted EFS volume under /mnt/efs:

Let me know if this works for you or if you encounter any problems.