The neural network and machine learning framework has become one of the key features of the latest releases of the Wolfram Language. Training neural networks can be very time consuming on a standard CPU. Luckily the Wolfram Language offers an incredible easy way to use a GPU to train networks - and do lots of other cool stuff. The problem with this was/is that most current Macs do not have an NVIDIA graphics card, which is necessary to access this framework within the Wolfram Language. Therefore, Wolfram Inc. had decided to drop support for GPUs on Macs. There is however a way to use GPUs on Macs. For example you can use an external GPU like the one offered by Bizon.

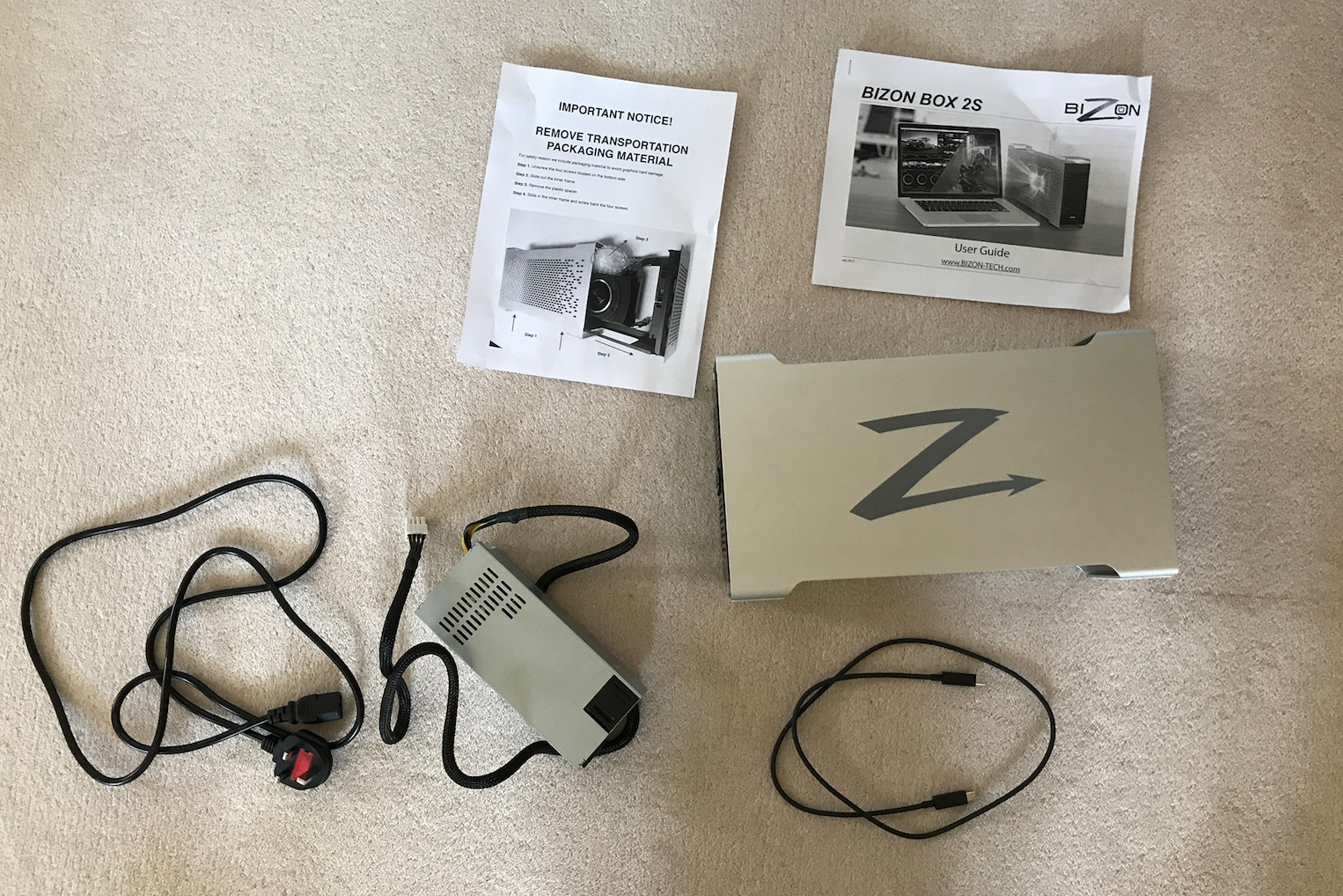

Apart from the BizonBox there a couple of cables and a power supply. You can buy/configure different versions of the BizonBox: there is a range of different graphics cards available and you can buy a the BizonBox 2s which basically connects via Thunderbolt and the BizonBox 3 which connects to USB-C.

Luckily, Wolfram have decided to reintroduce support for GPUs in Mathematica 11.1.1 - see the discussion here.

I have a variety of these BizonBoxes (both 2s and 3) and a range of Macs. I thought it would be a good idea to post a how-to. The essence of what I will be describing in this post should work for most Macs. I ran Sierra on all of them. Here is the recipe to get the thing to work:

Installation of the BizonBox, the required drivers, and compilers

I will assume that you have Sierra installed and that Xcode is running. One of the really important steps if you want to use compilers is to downgrade the command line tools to version 7.3 You will have to log into your Apple Developer account and download the Command Line Tools version 7.3. Install the tools and run the terminal command (not in Mathematica!):

sudo xcode-select --switch /Library/Developer/CommandLineTools

Reboot your Mac into safe mode, i.e. hold CMD+R while rebooting.

Open a terminal (under item Utilities at the top of the screen).

Enter

csrutil disable

Shut the computer down.

Connect your BizonBox to the mains and to either the thunderbolt or USB-C port of your Mac.

Restart your Mac.

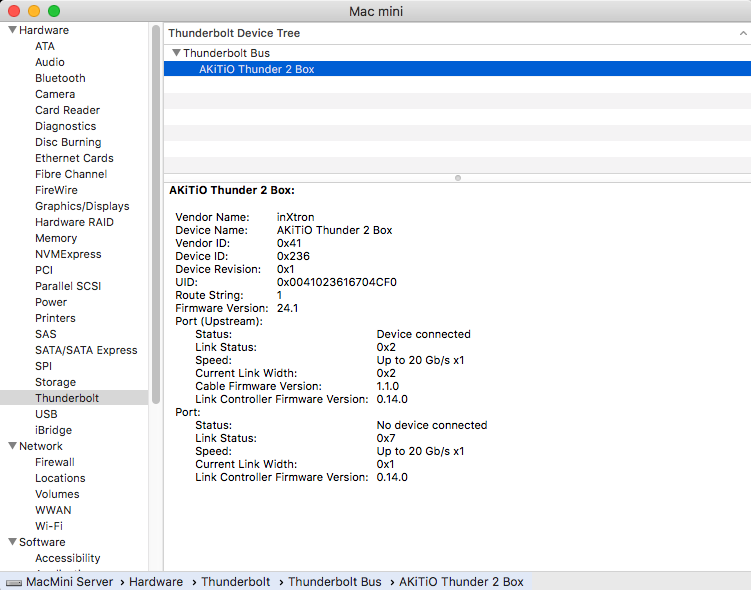

Click on the Apple symbol in the top left. Then "About this Mac" and "System Report". In the Thunderbolt section you should see something like this:

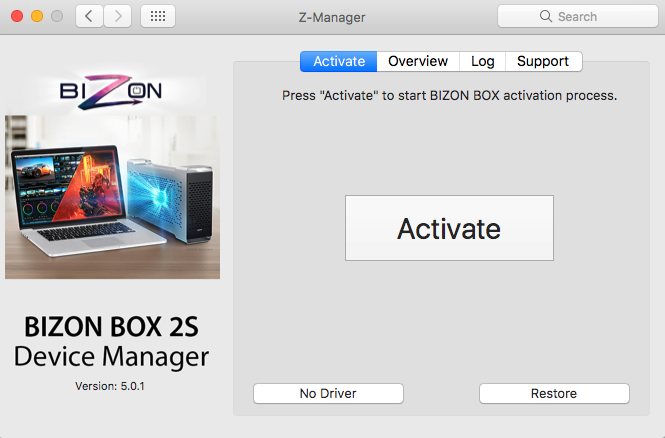

In the documentation of the BizonBox you will find a link to a program called bizonboxmac.zip. Download that file and unzip it.

Open the folder and click on "bizonbox.prefPane" to install. (If prompted to, do update!)

You should see this window:

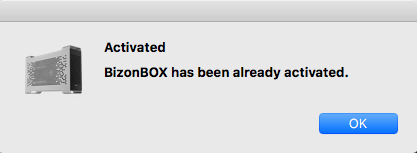

- Click on Activate. Type in password if required to do so. It should give something like this:

Then restart.

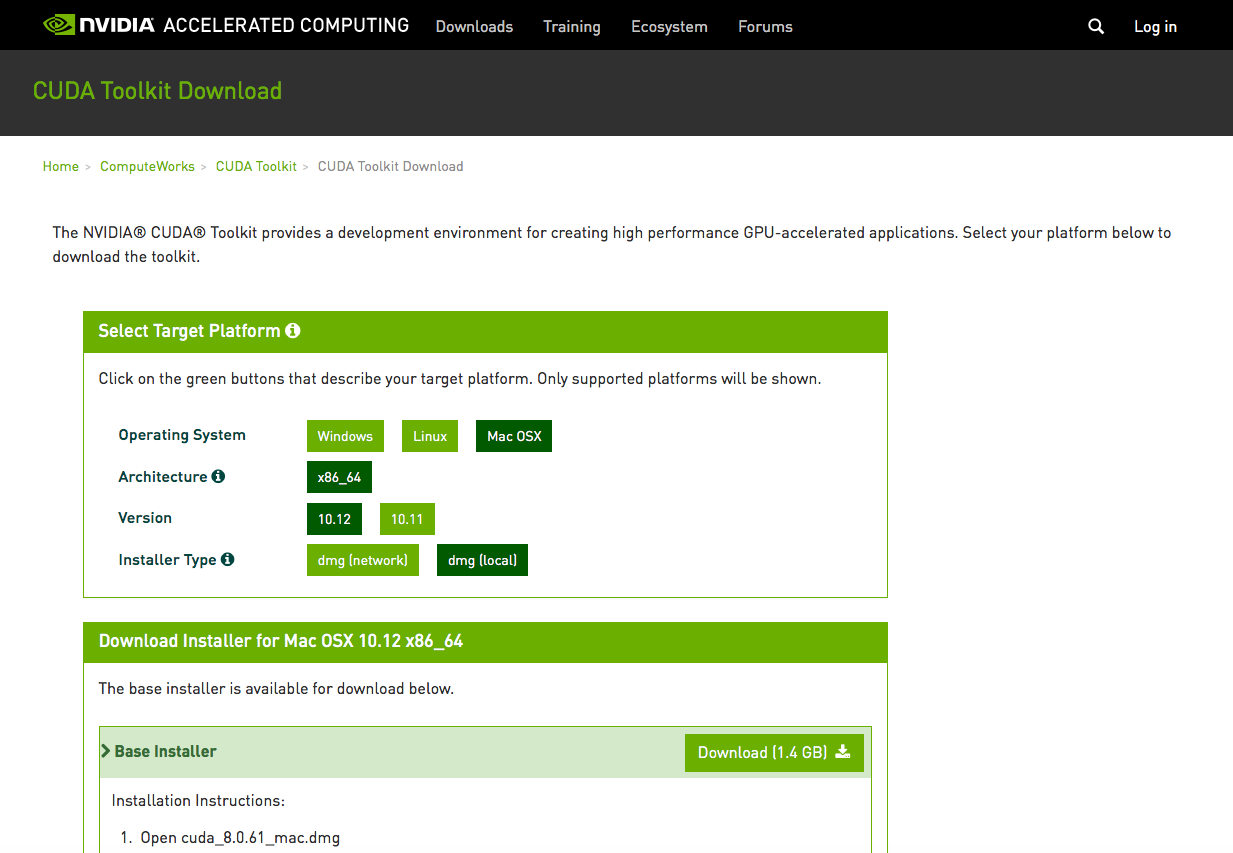

- Install the CUDA Toolkit: https://developer.nvidia.com/cuda-downloads. You'll have to click through some questions for the download.

what you download should be something like cuda8.0.61mac.dmg and it should be more or less 1.44 GB worth.

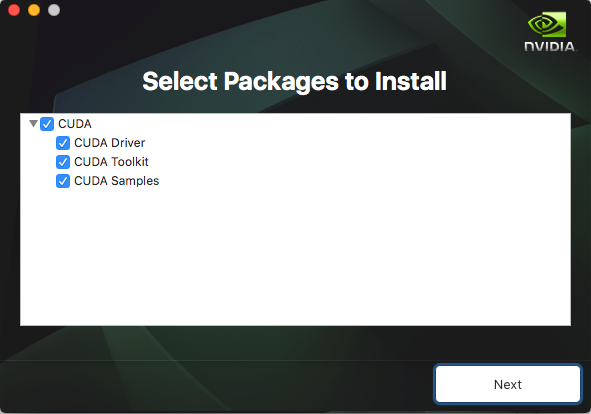

- Install the toolkit with all its elements.

- Restart your computer.

First tests

Now you should be good to go. Open Mathematica 11.1.1. Execute

Needs["CUDALink`"]

Needs["CCompilerDriver`"]

CUDAResourcesInstall[]

Then try:

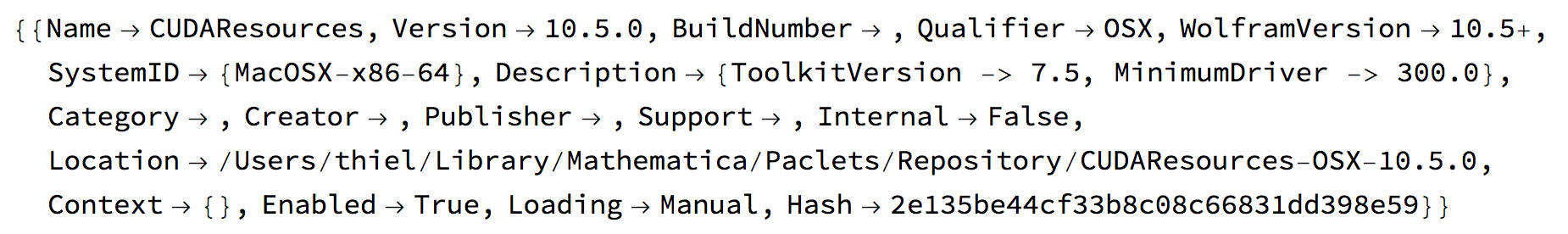

CUDAResourcesInformation[]

which should look somewhat like this:

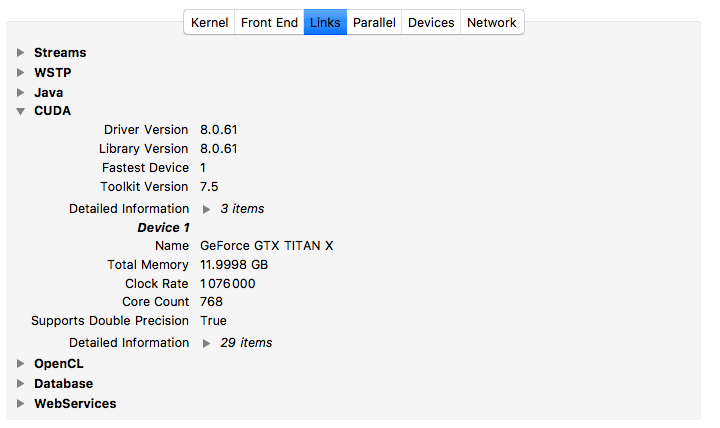

Then you should check

SystemInformation[]

Head to Links and then CUDA.This should look similar to this:

So far so good. Next is the really crucial thing:

CUDAQ[]

should give TRUE. If that's what you see you are good to go. Be more daring and try

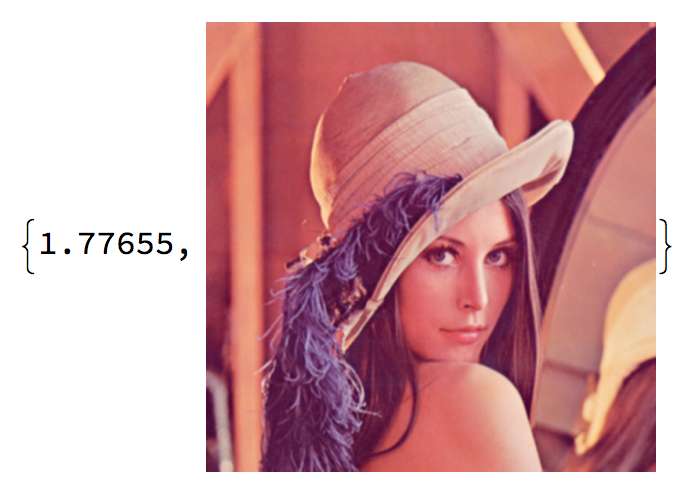

CUDAImageConvolve[ExampleData[{"TestImage","Lena"}], N[BoxMatrix[1]/9]] // AbsoluteTiming

You might notice that the non-GPU version of this command runs faster:

ImageConvolve[ExampleData[{"TestImage","Lena"}], N[BoxMatrix[1]/9]] // AbsoluteTiming

runs in something like 0.0824 seconds, but that's ok.

Benchmarking (training neural networks)

Let's do some Benchmarking. Download some example data:

obj = ResourceObject["CIFAR-10"];

trainingData = ResourceData[obj, "TrainingData"];

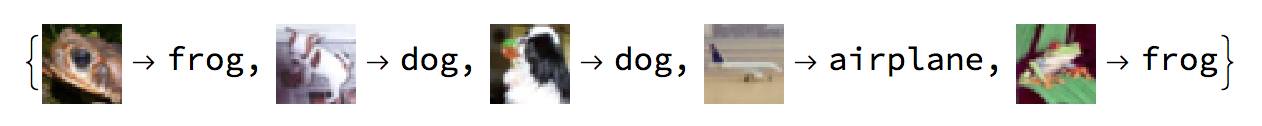

RandomSample[trainingData, 5]

You can check whether it worked:

RandomSample[trainingData, 5]

should give something like this:

These are the classes of the 50000 images:

classes = Union@Values[trainingData]

Let's build a network

module = NetChain[{ConvolutionLayer[100, {3, 3}],

BatchNormalizationLayer[], ElementwiseLayer[Ramp],

PoolingLayer[{3, 3}, "PaddingSize" -> 1]}]

net = NetChain[{module, module, module, module, FlattenLayer[], 500,

Ramp, 10, SoftmaxLayer[]},

"Input" -> NetEncoder[{"Image", {32, 32}}],

"Output" -> NetDecoder[{"Class", classes}]]

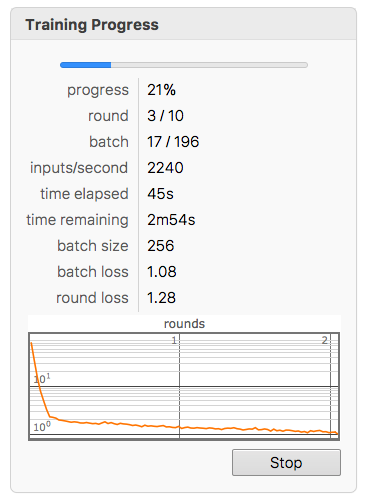

When you train the network:

{time, trained} = AbsoluteTiming@NetTrain[net, trainingData, Automatic, "TargetDevice" -> "GPU"];

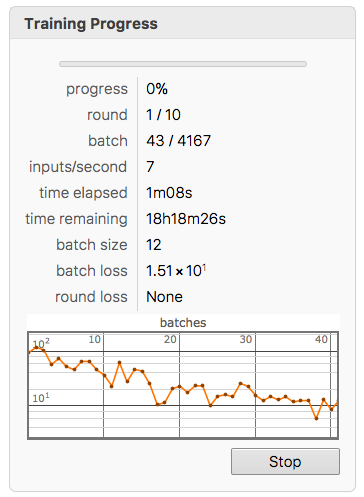

you should see something like this:

So the thing started 45 secs ago and it supposed to finish in 2m54s. In fact, it finished after 3m30s. If we run the same on the CPU we get:

The estimate kept changing a bit, but it settled down at about 18h20m.That is slower by a factor of about 315, which is quite substantial.

Use of compiler

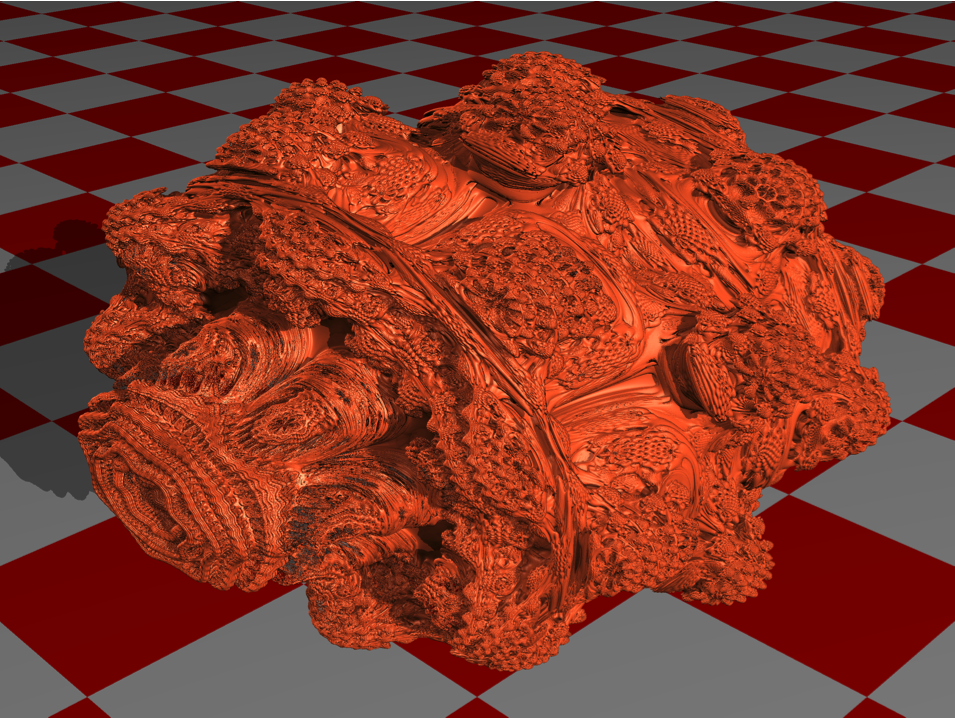

Up to now we have not needed the actual compiler. Let's try this, too. Let's grow a Mandelbulb:

width = 4*640;

height = 4*480;

iconfig = {width, height, 1, 0, 1, 6};

config = {0.001, 0.0, 0.0, 0.0, 8.0, 15.0, 10.0, 5.0};

camera = {{2.0, 2.0, 2.0}, {0.0, 0.0, 0.0}};

AppendTo[camera, Normalize[camera[[2]] - camera[[1]]]];

AppendTo[camera,

0.75*Normalize[Cross[camera[[3]], {0.0, 1.0, 0.0}]]];

AppendTo[camera, 0.75*Normalize[Cross[camera[[4]], camera[[3]]]]];

config = Join[{config, Flatten[camera]}];

pixelsMem = CUDAMemoryAllocate["Float", {height, width, 3}]

srcf = FileNameJoin[{$CUDALinkPath, "SupportFiles", "mandelbulb.cu"}]

Now this should work:

mandelbulb =

CUDAFunctionLoad[File[srcf], "MandelbulbGPU", {{"Float", _, "Output"}, {"Float", _, "Input"}, {"Integer32", _, "Input"}, "Integer32", "Float", "Float"}, {16}, "UnmangleCode" -> False, "CompileOptions" -> "--Wno-deprecated-gpu-targets ", "ShellOutputFunction" -> Print]

Under certain circumstances you might want to specify the location of the compiler like so:

mandelbulb =

CUDAFunctionLoad[File[srcf], "MandelbulbGPU", {{"Float", _, "Output"}, {"Float", _, "Input"}, {"Integer32", _, "Input"}, "Integer32", "Float",

"Float"}, {16}, "UnmangleCode" -> False, "CompileOptions" -> "--Wno-deprecated-gpu-targets ", "ShellOutputFunction" -> Print,

"CompilerInstallation" -> "/Developer/NVIDIA/CUDA-8.0/bin/"]

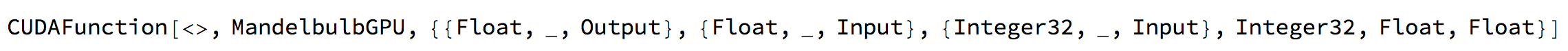

This should give:

Now

mandelbulb[pixelsMem, Flatten[config], iconfig, 0, 0.0, 0.0, {width*height*3}];

pixels = CUDAMemoryGet[pixelsMem];

Image[pixels]

gives

So it appears that all is working fine.

Problems

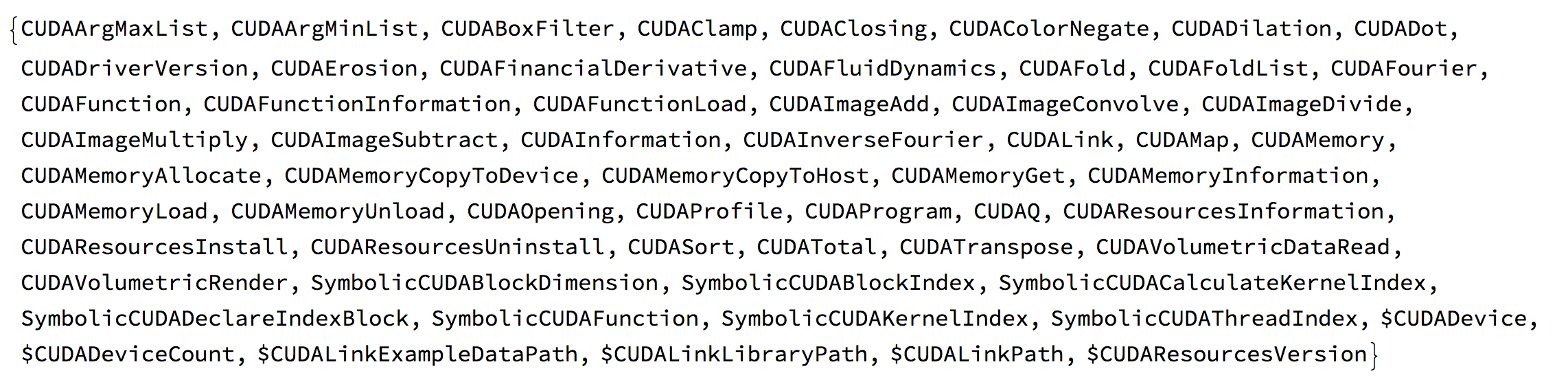

I did come up with some problems though. There is quite a number of CUDA functions:

Names["CUDALink`*"]

Many work just fine.

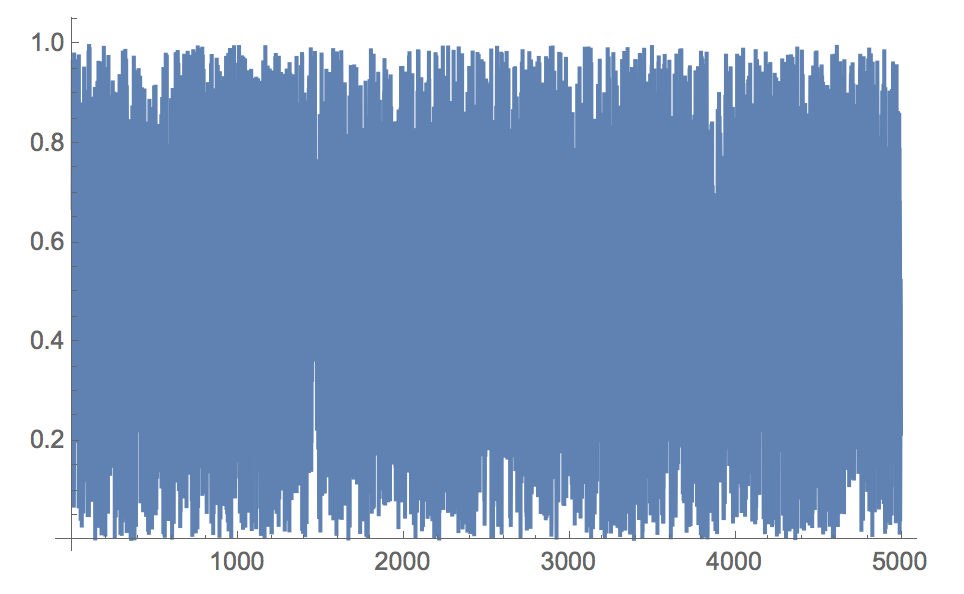

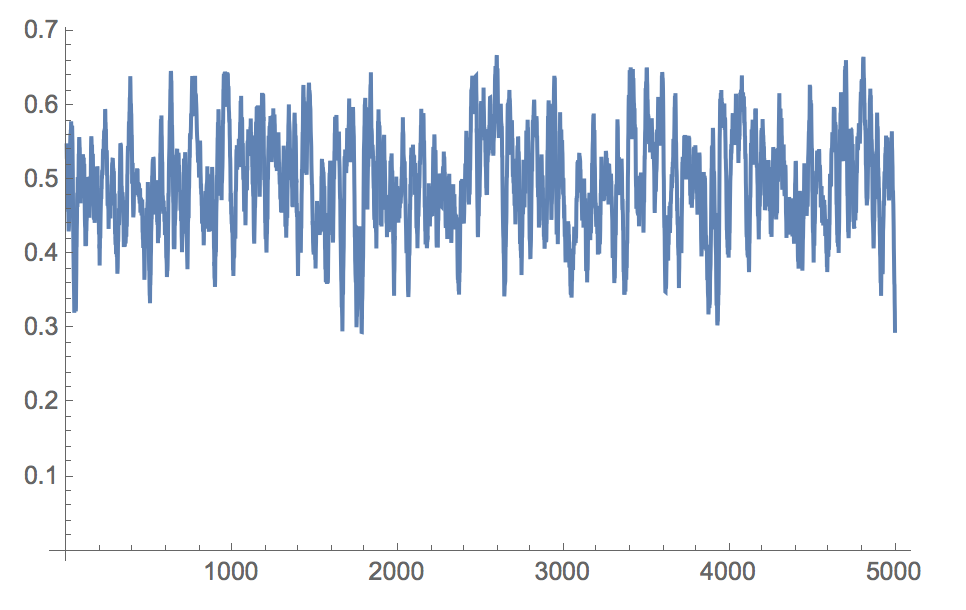

res = RandomReal[1, 5000];

ListLinePlot[res]

ListLinePlot[First@CUDAImageConvolve[{res}, {GaussianMatrix[{{10}, 10}]}]]

The thing is that some don't and I am not sure why (I have a hypothesis though). Here are some functions that do not appear to work:

CUDAColorNegate CUDAClamp CUDAFold CUDAVolumetricRender CUDAFluidDynamics

and some more. I would be very grateful if someone could check these on OSX (and perhaps Windows?). I am not sure if the this is due to some particularity of my systems or something that could be flagged up to Wolfram Inc for checking.

When I wanted to try that systematically I wanted to use the function

WolframLanguageData

to look for the first example in the documentation of the CUDA functions, but it appears that no CUDA function is in the WolframLanguageData. I think tit would be great to have them there, too, and am not sure why they wouldn't be there.

In spite of these problems I hope that this post will help some Mac users to get CUDA going. It is a great framework and simple to use in the Wolfram Language. With the BizonBox and Mathematica 11.1.1 Mac users are no longer excluded from accessing this feature.

Cheers,

Marco

PS: Note, that there is anecdotal evidence that one can even use the BizonBox under Windows running in a virtual box under OSX. I don't have Windows, but I'd like to hear if anyone get this running.