EDIT 3: with help from Alexey Golyshev the performance of UNET has been considerably improved. The code is present @ the github repository (see EDIT 2)

EDIT 2: the code can be found in form of a package: https://github.com/alihashmiii/UNet-Segmentation-Wolfram

EDIT 1: applying Binarize to the masks after resizing helps to ensure the data for mask is binary

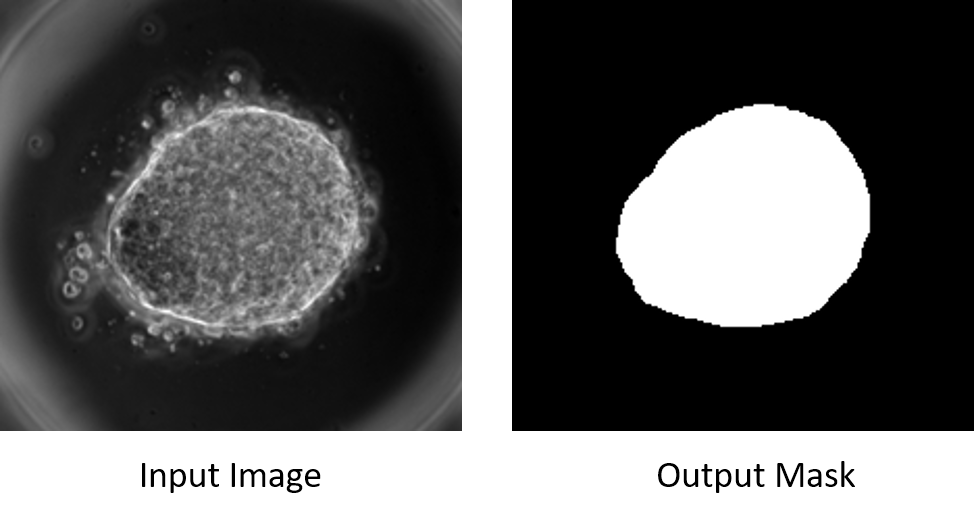

I have made an attempt to implement the neural network (written in R) @ https://github.com/rstudio/keras/blob/master/vignettes/examples/unet.R in Mathematica. The purpose of the network is to take in a grayscale image of an aggregate of cells and output a binarized mask (shown below).

The dataset (images and corresponding labels) for training can be downloaded from:

for images: https://www.dropbox.com/sh/bi0sky2hejjhqit/AAAT3YSzVwvDO0HW3POLJs0Ba?dl=0

for masks: https://www.dropbox.com/sh/yb0itaoyuz2y422/AAB25g6i9A2Mdlz1TwJgfzhka?dl=0

Note: although i am not sure how well i did with translating the network. It seems to be working. However, I am looking for performance boosts to enhance the output generated by the net

Creating The Net

convolutionModule[kernelsize_, padsize_, stride_] := NetChain[

{ConvolutionLayer[1, kernelsize, "Stride" -> stride, "PaddingSize" -> padsize],

BatchNormalizationLayer[],

ElementwiseLayer[Ramp]}

];

decoderModule[kernelsize_, padsize_, stride_] := NetChain[

{DeconvolutionLayer[1, {2, 2}, "PaddingSize" -> 0, "Stride" -> {2, 2}],

BatchNormalizationLayer[],

ElementwiseLayer[Ramp],

convolutionModule[kernelsize, padsize, stride]}

];

encodeModule[kernelsize_, padsize_, stride_] := NetAppend[

convolutionModule[kernelsize, padsize, stride],

PoolingLayer[{2, 2}, "Function" -> Max, "Stride" -> {2, 2}]

];

cropLayer[{dim1_, dim2_}] := NetChain[

{PartLayer[{1, 1 ;; dim1, 1 ;; dim2}],

ReshapeLayer[{1, dim1, dim2}]}

];

UNET := Block[{kernelsize = {3, 3}, padsize = {1, 1}, stride = {1, 1}},

NetGraph[

<|"enc_1" -> encodeModule[kernelsize, 0, stride],

"enc_2" -> encodeModule[kernelsize, padsize, stride],

"enc_3" -> encodeModule[kernelsize, padsize, stride],

"enc_4" -> encodeModule[kernelsize, padsize, stride],

"dropout_1" -> DropoutLayer[],

"enc_5" -> encodeModule[kernelsize, padsize, stride],

"dec_1" -> decoderModule[kernelsize, padsize, stride],

"dec_2" -> decoderModule[kernelsize, padsize + 1, stride],

"crop_1" -> cropLayer[{20, 20}],

"concat_1" -> CatenateLayer[],

"dropout_2" -> DropoutLayer[],

"conv_1" -> convolutionModule[kernelsize, padsize, stride],

"dec_3" -> decoderModule[kernelsize, padsize, stride],

"crop_2" -> cropLayer[{40, 40}],

"concat_2" -> CatenateLayer[],

"dropout_3" -> DropoutLayer[],

"conv_2" -> convolutionModule[kernelsize, padsize, stride],

"dec_4" -> decoderModule[kernelsize, padsize, stride],

"crop_3" -> cropLayer[{80, 80}],

"concat_3" -> CatenateLayer[],

"dropout_4" -> DropoutLayer[],

"conv_3" -> convolutionModule[kernelsize, padsize, stride],

"dec_5" -> decoderModule[kernelsize, padsize, stride],

"map" -> {ConvolutionLayer[1, kernelsize, "Stride" -> stride,

"PaddingSize" -> padsize], LogisticSigmoid}|>,

{{NetPort["Input"] ->

"enc_1" ->

"enc_2" ->

"enc_3" ->

"enc_4" -> "dropout_1" -> "enc_5" -> "dec_1" -> "dec_2"},

"dec_2" -> "crop_1",

{"enc_3", "crop_1"} ->

"concat_1" -> "dropout_2" -> "conv_1" -> "dec_3",

"enc_2" -> "crop_2",

{"crop_2", "dec_3"} ->

"concat_2" -> "dropout_3" -> "conv_2" -> "dec_4",

"enc_1" -> "crop_3",

{"crop_3", "dec_4"} ->

"concat_3" -> "dropout_4" -> "conv_3" -> "dec_5" -> "map"

},

"Input" ->

NetEncoder[{"Image", {168, 168}, ColorSpace -> "Grayscale"}],

"Output" ->

NetDecoder[{"Image", Automatic, ColorSpace -> "Grayscale"}]

]

] // NetInitialize;

Training the Net

dir = "C:\\Users\\aliha\\Downloads\\fabrice-ali\\deeplearning\\data\\train\\train_images_8bit";

SetDirectory[dir];

images = ImageResize[Import[dir <> "\\" <> #], {168, 168}] & /@ FileNames[];

(* we shuffle the images *)

shuffledimages = RandomSample@Thread[Range@Length@images -> images];

keysshuffle = Keys@shuffledimages;

dir = "C:\\Users\\aliha\\Downloads\\fabrice-ali\\deeplearning\\data\\train\\train_masks";

SetDirectory[dir];

masks = Binarize[ImageResize[Import[dir <> "\\" <> #],{160, 160}]]&/@FileNames[];

(* resize masks to 160,160 same size as the output of the net *)

(* shuffle the masks -> same order as images *)

shuffledmasks = Lookup[<|Thread[Range@Length@masks -> masks]|>, keysshuffle];

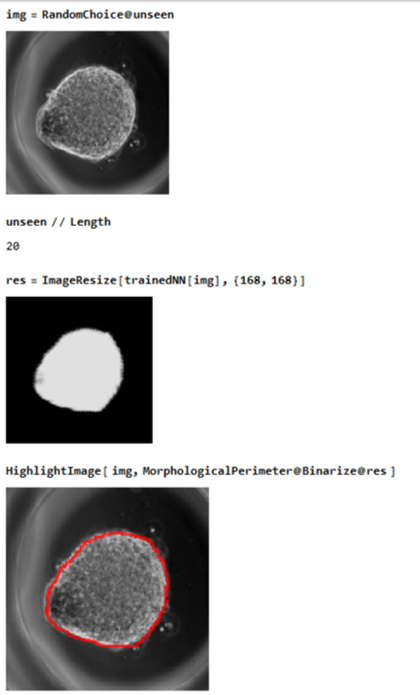

{dataset, validationset, unseen} = TakeList[Values@shuffledimages, {290, 80, 20}];

{labeldataset, labelvalidationset, groundTruth} = TakeList[shuffledmasks, {290, 80, 20}];

trainedNN =

NetTrain[net, Thread[dataset -> labeldataset],

ValidationSet -> Thread[validationset -> labelvalidationset], BatchSize -> 8,

MaxTrainingRounds -> 100, TargetDevice -> "GPU" ];

As can be seen from the image above, the net seems to be working after a 100 rounds of training. For some cases the network works reasonably but for some not so well. However, I think the performance can be improved and I am happy to put anyone in credits who can help me improve the performance.