Introduction

Taking picture is hard for a non-professional photographer, when he tries to get all lines perfectly horizontal. If the orientation of the picture can be corrected automatically, that would be great. The sensor in camera can do 90 degree rotate when we try to take an image and we rotate the phone horizontal/vertical What to do when there are no useful horizontal/vertical lines? They just create confusion. In order to accurately predict the orientation, sometimes it requires understanding of the image content. This is where deep learning network is very good at. Convolution network can learn the feature and predict the orientation. In this article, I will describe approaches and steps to predict the orientation of an image. We have used three datasets in my project. Details will be discussed below.

1. MNIST dataset with LeNet model

The MNIST dataset is a good choice for training a new model, if you want to quickly prototype a new idea because the size of the images is small and their content is relatively simple. No need for a very complex network with many layers that take a long time to train. If the model work well with simple handwritten digits, we can apply to real life images. MNIST data from ResourceData. With 60k images for training, 10k for testing and 10k for validation. Image dimension is 28x28x1. Rotation angles are 0, 90, 180, 270 deg, these are the four classes (labels) in our training net.

Image modification is done with the following steps: - Get digits image from MNIST from ResourceData [4] - Rotate each image with 4 rotations - Make label for each image: image with label - Save into .mx file, which will be used for training later. Code is shown below

list = Keys[ResourceData["MNIST", "TrainingData"]];

imgs = Table[list[[i]], {i, 1, Length[list]}];

rotateAll[x_] :=

Table[ImageRotate[x, i Degree, Background -> None], {i, 0, 270, 90}];

rotatedImage = rotateAll /@ imgs;

labelList = Table[ ToString[i], Length[imgs], {i, 0, 270, 90}];

imageWithLabel = Thread[Flatten[rotatedImage] -> Flatten[labelList]];

SetDirectory["/Users/nguyen/Documents"];

Export["mnist_trained_set.mx", imageWithLabel];

Same procedure is applied to testing set. Result for four rotation is very good. So I increase number of rotation to the step of 30 degree. Training model for this dataset is given below. I used LeNet net model with 20% of training set is used for validation set.

trainSet =

Import["/Users/nguyen/Documents/mnist_trained_set_30deg.mx"];

leNet = NetTrain[NetModel["LeNet"], trainSet,

ValidationSet -> Scaled[0.2]]

Export["/Users/nguyen/Documents/leNetTrain.wlnet", leNet];

testSet = Import["/Users/nguyen/Documents/mnist_test_set_30deg.mx"];

res = ClassifierMeasurements[leNet, testSet];

res["Accuracy"]

res["ConfusionMatrixPlot"]

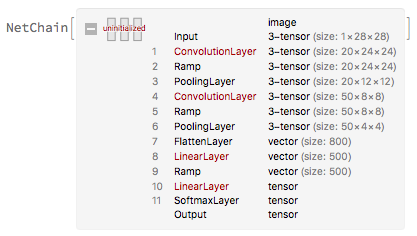

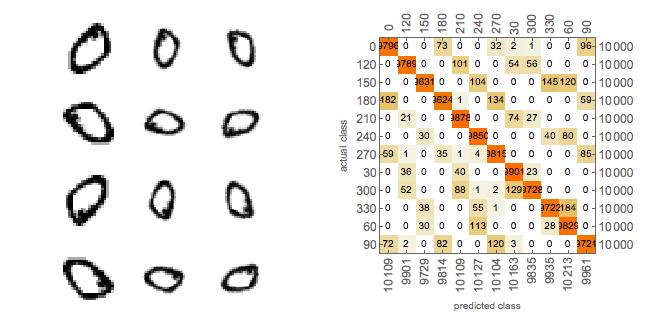

The LeNet network is trained with all 60k images and all layers. As shown in this plot

The confusion matrix for 30 degree rotation is given below. An accuracy of 97.7% is achieved. The left plot is rotation of an image with rotation from 0 to 330 in step of 30 degree. The right plot is confusion matrix from the training net applied to test set.

The confusion matrix for 30 degree rotation is given below. An accuracy of 97.7% is achieved. The left plot is rotation of an image with rotation from 0 to 330 in step of 30 degree. The right plot is confusion matrix from the training net applied to test set.

2. ImageNet dataset with Inception net and Ademxapp model [5]

We used 10k images with Ademxapp net and four rotations (0, 90, 180, 270) classes, accuracy is 90%. Code for image curating is shown below.

listImages =

Import["/Users/nguyen/Documents/all_tar_images/all_images/train/*.\

JPEG"];

cropTrainImage = ImageCrop[#, {224, 224}] & /@ listImages;

rotateAll[x_] :=

Table[ImageRotate[x, i Degree, Background -> None], {i, 0, 270, 90}];

rotatedImage = rotateAll /@ cropTrainImage;

labelList = Table[ ToString[i], Length[listImages], {i, 0, 270, 90}];

imageWithLabel = Thread[Flatten[rotatedImage] -> Flatten[labelList]];

Length[imageWithLabel];

SetDirectory["/Users/nguyen/Documents"];

Export["trained_set10k_new.mx", imageWithLabel];

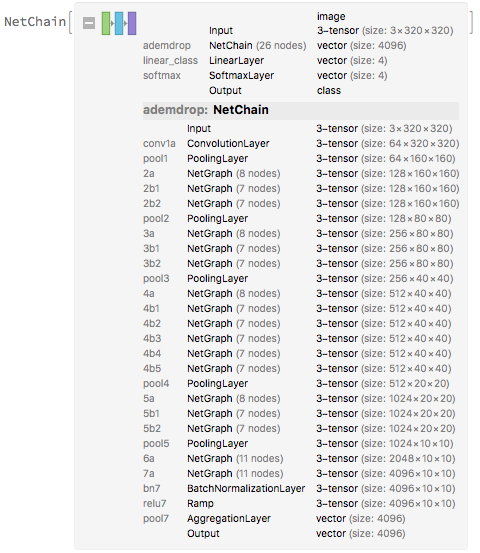

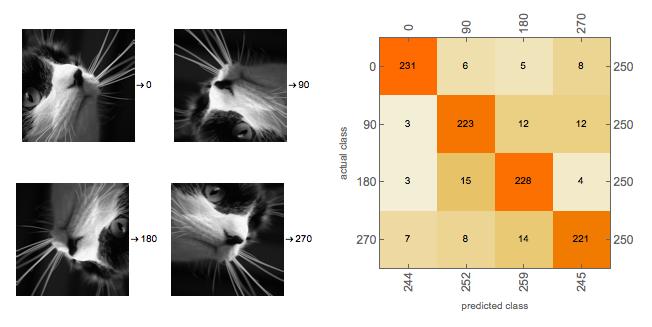

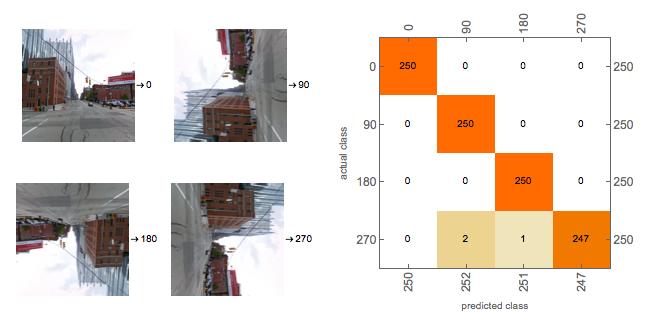

For training net, we use Ademxapp model by getting the pre-trained net then we do network surgery. Transfer learning is done by remove linear layer from pre-trained net. Use transfer learning to create a new net composed of the pre-trained net followed by a linear layer of a softmax layer. The weight inside the net is kept the same by freeze all the layer before 6a and set LearningRateMultiplier equal 1 for the last three layers (6a, 7a, bn7) as shown in the figure below.. The confusion matrix for 10k images with Ademxapp net is shown below. The left plot is the 0, 90, 180 and 270 rotation of the image, the right plot is the confusion matrix.

3. Google Street View dataset [6]

This dataset is downloaded from reference [6] Choose 10k images for training. The code to do image processing is shown below. Same procedure is applied to test and validation sets. An accuracy of 99.7% is achieved for this dataset.

imgRaw = Import[

"/Users/nguyen/Documents/google_street/train_set/*.jpg"];

imgResize = ImageResize[#, {300, 300}] & /@ imgRaw;

imgCrop = ImageCrop[#, {224, 224}] & /@ imgResize;

rotateAll[x_] :=

Table[ImageRotate[x, i Degree, Background -> None], {i, 0, 270, 90}];

rotatedImage = rotateAll /@ imgCrop;

labelList = Table[ ToString[i], Length[imgRaw], {i, 0, 270, 90}];

imageWithLabel = Thread[Flatten[rotatedImage] -> Flatten[labelList]];

SetDirectory["/Users/nguyen/Documents"];

Export["train_set_goolgeImage.mx", imageWithLabel];

Training with Ademxapp. Due to limited memories in my computer, my mentor helped me with training, which is done in her computer.

ademt = Import["/Users/tuseetab/Downloads/adem10kgoogl.wlnet"]

testdata = Import["/Users/tuseetab/Downloads/test_set_googleImage.mx"];

testdata2 = testdata[[;; 1000]];

result = ClassifierMeasurements[ademt, testdata2];

result["Accuracy"]

result["ConfusionMatrixPlot"]

The result is shown below. Caption of the image is the same as above.

Future work

Higher precision can be achieved with more images and more rotation. Right now, we only used 10k images for training set, 1k images for testing set, and 1k images for validation set. Due to limited in time, I only rotate 4 angles (0, 90, 180, 270 degree). With more angles of rotation, we could potentially use the same network to train it as a regression problem in addition to the classification problem. This can be applied to video stream, where the rotation can be corrected while recording.

Acknowledgement

I would like to thanks to the summer for giving me a chance to work on this cool project. I would like to thanks to my mentor, Wenzhen, Max and other mentors for helping me with my project.

References

[6]: Google Street View dataset