Update: I'm thinking this might be a bug in BatchNormalizationLayer?

This problem only seems to happen for BN layers that occur in a network where they aren't preceded by any other layers with learnable parameters. This was the case in the simple network I was using for testing when I wrote the original post above.

A workaround that seems to work is to set the learning rate for BN layers that should have frozen Gammas & Betas to a small value rather than setting it to None. Here's some code that implements and tests this workaround:

Module[

{trainingData, net, trainedNone, trainedSmall},

(* fake some training data *)

trainingData =

Table[RandomImage[1, {16, 9}, ColorSpace -> "RGB"] ->

RandomImage[1, {16, 9}, ColorSpace -> "RGB"], 256];

(* create the network - just a batch norm followed by a conv *)

net = NetInitialize[NetGraph[

<|"BN" -> BatchNormalizationLayer[],

"*" -> ConvolutionLayer[3, 1]|>,

{"BN" -> "*"},

"Input" -> NetEncoder[{"Image", {16, 9}, ColorSpace -> "RGB"}],

"Output" -> NetDecoder[{"Image", ColorSpace -> "RGB"}]

]];

(* train with BN's learning rate = None *)

trainedNone = NetTrain[net, trainingData, TimeGoal -> 5,

LearningRateMultipliers -> {{"BN", "Gamma"} ->

None, {"BN", "Beta"} -> None}];

(* train with BN's learning rate = very small *)

trainedSmall = NetTrain[net, trainingData, TimeGoal -> 5,

LearningRateMultipliers -> {{"BN", "Gamma"} ->

None, {"BN", "Beta"} -> $MachineEpsilon}];

(* format results *)

Dataset[<|

"Gamma" -> <|

"Initialized" -> net[["BN", "Gamma"]],

"Trained: LR=None" -> trainedNone[["BN", "Gamma"]],

"Trained: LR=$MachineEpsilon" -> trainedSmall[["BN", "Gamma"]],

"Expected" -> 1.

|>,

"Beta" -> <|

"Initialized" -> net[["BN", "Beta"]],

"Trained: LR=None" -> trainedNone[["BN", "Beta"]],

"Trained: LR=$MachineEpsilon" -> trainedSmall[["BN", "Beta"]],

"Expected" -> 0.

|>,

"MovingMean" -> <|

"Initialized" -> net[["BN", "MovingMean"]],

"Trained: LR=None" -> trainedNone[["BN", "MovingMean"]],

"Trained: LR=$MachineEpsilon" ->

trainedSmall[["BN", "MovingMean"]],

"Expected" -> Mean[UniformDistribution[{0., 1.}]]

|>,

"MovingVariance" -> <|

"Initialized" -> net[["BN", "MovingVariance"]],

"Trained: LR=None" -> trainedNone[["BN", "MovingVariance"]],

"Trained: LR=$MachineEpsilon" ->

trainedSmall[["BN", "MovingVariance"]],

"Expected" -> Variance[UniformDistribution[{0., 1.}]]

|>

|>]

]

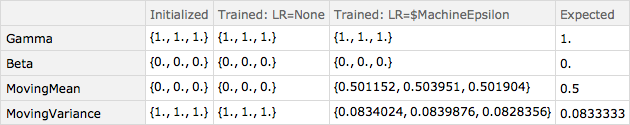

The output of this is: