Introduction

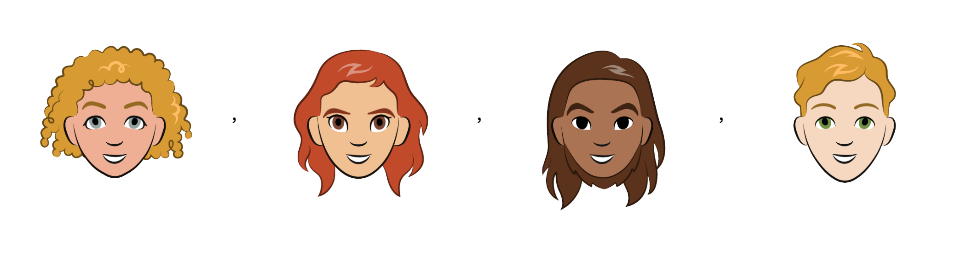

The aim of this project is to create an algorithm for generating semantically and chromatically similar looking Emojis [avatars] from faces of human beings. This has been achieved by a combination of deep learning and heuristic analysis to produce appropriate results. The data sets used in this project are available for public use and have been referenced below. This report has been divided into two major sections, namely - human analysis and emoji segmentation. Finally, a mapping function is presented to generate the final results. The key principle involved in achieving the goal is cross-domain semantic preservation for effective results.

Human Domain

Face/Hair Segmentation mask generation

One of the key features of a human face to keep in mind is the shape of the hair. For effectively extracting the hair out of a given picture, an edited version of the Dilated ResNet-105 has been trained on a dataset containing face/hair masks of faces from the CelebA dataset. The changes made in the network are for incorporating the training of appropriate dimensions of input and target vectors.

net = NetModel["Dilated ResNet-105 Trained on Cityscapes Data"];

netReplaced =

NetReplacePart[net,

"input_0" -> NetEncoder[{"Image", {178, 218}}]];

netPart = NetTake[netReplaced, {All, "broadcast_add0"}];

newNet = NetGraph[{netPart,

DeconvolutionLayer[3, {24, 28}, "Stride" -> {6, 9},

"PaddingSize" -> {{4, 4}, {4, 4}}, "Input" -> {19, 28, 23}],

TransposeLayer[{1 <-> 3, 1 <-> 2}], SoftmaxLayer[]}, {1 -> 2,

2 -> 3, 3 -> 4}]

This network is trained to classify every pixel of any input image into one of three classes, each of those classes corresponding to one colour of the segmentation mask [face, hair or background]. The loss function utilised is the "CrossEntropyLossLayer" function. It has been trained on the 3500 pictures using cross validation techniques over 10 epochs with a batch size of 4 images, due to the relatively small number of training samples available for the task.

Colour Preservation for translation to Emoji

For colour extraction of the various facial features for a given input image, the approach adopted is face-point based analysis predicted using the "2D Face Alignment" network from the Wolfram Neural Network Repository. With some simple rules established with respect to the morphological components of the face, we are easily able to extract the skin, hair and iris colours of an arbitrary input.

PickSkinColorPerson[img_] :=

Module[{image = img, landmarks, splits, vals, mean},

landmarks = netevaluation[image];

splits = landmarks[[#]] & /@ groupings;

vals = PixelValue[image, #] & /@

ScalingTransform[ImageDimensions[image]]@splits[[4]];

mean = Mean[vals[[1 ;; 4]]];

RGBColor[mean]]

PickHairColorPerson[img_] :=

Module[{image = img, net, i, m},

net = Import["/home/kashikar/trained.mx"];

i = ImageResize[image, {178, 218}];

image = net[i];

m = ColorNegate[

Binarize[

ColorDistance[ImageResize[Image[Round[image]], {178, 218}],

Red]]];

DominantColors[i, Masking -> m][[1]]]

Emoji Domain

Structural Extraction and Analysis

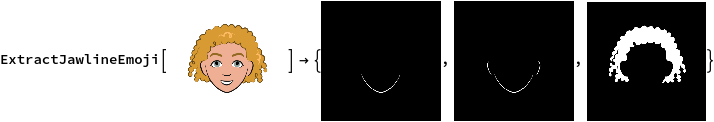

The dataset we use for the making avatars is the Cartoon Set compilation of images wherein a certain number of hairstyles, jawline types, and eye variations have been procedurally combined to create 100k avatars. It should be noted here that the nose and mouth of every emoji are in them same coordinates, with variance in jawlines and appropriate hairstyles.

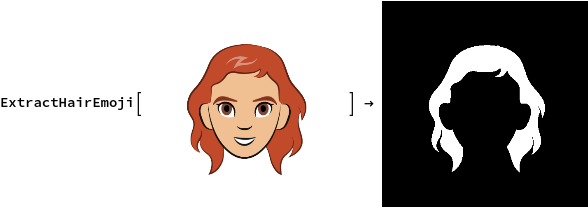

Considering we have hair masks for faces, a heuristic can be setup based on masking specific regions of any arbitrary emoji, taking advantage of the common positions in the dataset, to extract hair, and corresponding jawline/ear combinations for the input.

ExtractHairEmoji[img_] :=

Module[{image = img, image1, poly, skin, cols, haircolor},

image1 = RemoveAlphaChannel[image, White];

poly = Polygon[{{132.72727272727275`, 410.90909090909093`}, {

372.7272727272727, 407.27272727272725`}, {363.6363636363636,

290.90909090909093`}, {133.63636363636363`, 291.8181818181818}}];

skin = RGBColor[ PixelValue[image, {{247, 197}}][[1]]];

cols = DominantColors[image1, Masking -> poly];

haircolor =

SelectFirst[cols,

ColorDistance[#, White] > 0.001 &&

ColorDistance[#, skin] > 0.001 &];

FillingTransform[

SelectComponents[

Binarize[ImageAdjust[ColorDistance[image, haircolor]], {0, 0.1}],

"Count", -1]]]

Similarly, we can also extract jawlines and ear combinations, which could be potentially used for better fitting of face to emoji contours. It must be noted that this works on region based colour distance mapping, and hence doesn't necessarily return the required outputs. Some helper functions for the colour extraction of face features of emojis have also been created.

Data Processing

The next steps involved are to create chunks of associations labelling emoji segmentation masks with their names, and more importantly, generating a final list with unique hairstyles that conform to the heuristics involved in the generating process. This has been followed by a manual intervention over the remaining 423 images to remove edge cases such as beards, spectacles and other similar outlier combinations. The data processing steps and outputs can be viewed in the full project notebook provided in the links below.

Mapping

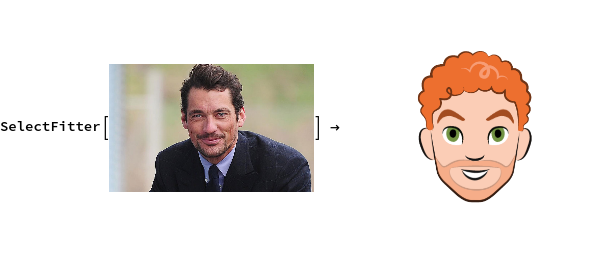

Now that we have extracted all the required data for mapping, we utilise Wolfram Language's ImageCrop function to create a function to calculate the percentage overlap mapped over the curated unique emoji list and return the filename of the emoji with the best fitting skeleton.

SelectFitter[Image_] :=

Module[{image = Image, nf, hairmask, emojifile, pics, l1, num},

hairmask = GetHairMaskPerson[image];

pics = ImageResize[#, {178, 218}] & /@ hairlist[[All, 2, 3]];

l1 = Divide[

Total[Flatten[

ImageData[

Abs[ImageCrop[#] -

ImageResize[ImageCrop[Binarize[ImageAlign[#, hairmask]]],

ImageCrop[#] // ImageDimensions]]]]],

2*Times @@ ImageDimensions@ImageCrop@#] & /@ pics;

num = Position[l1, Min[l1]][[1]];

hairlist[[num, 1]]]

And finally, we colorize this skeleton emoji appropriately!

Emoji[Image_] :=

Module[{img = Image, eyecolperson, haircolperson, skincolperson,

emojistd, skincolemoji, eyecolemoji, emojichanged, hairmask},

eyecolperson = PickIrisColorPerson[img];

haircolperson = PickHairColorPerson[img];

skincolperson = PickSkinColorPerson[img];

emojistd = Import[SelectFitter[img][[1]]];

skincolemoji = SkinColorEmoji[emojistd];

eyecolemoji =

DominantColors[emojistd,

Masking ->

Polygon[{{280.0224466891134, 251.96408529741862`}, {

306.3973063973064, 253.64758698092032`}, {306.9584736251403,

228.39506172839504`}, {281.1447811447812,

226.71156004489336`}}]][[1]];

emojichanged =

ColorReplace[

emojistd, {skincolemoji -> skincolperson,

eyecolemoji -> eyecolperson}, {0.1, 0.1}];

hairmask = ExtractHairEmoji[emojistd];

emojichanged =

ImageResize[

ImageApply[# - # + {haircolperson[[1]], haircolperson[[2]],

haircolperson[[3]], 1} &, emojichanged, Masking -> hairmask],

300]]

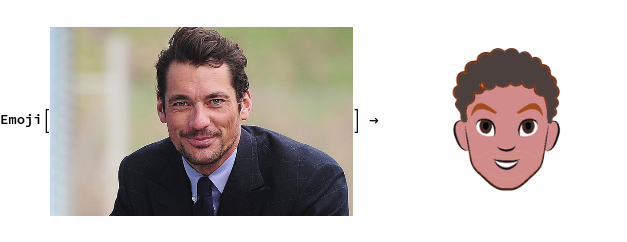

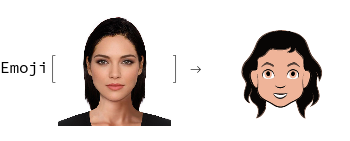

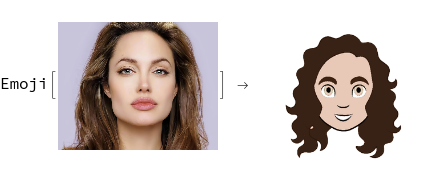

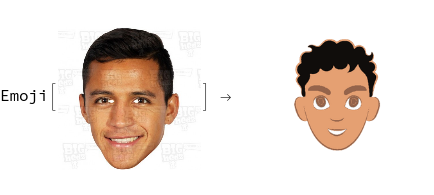

Some more results...

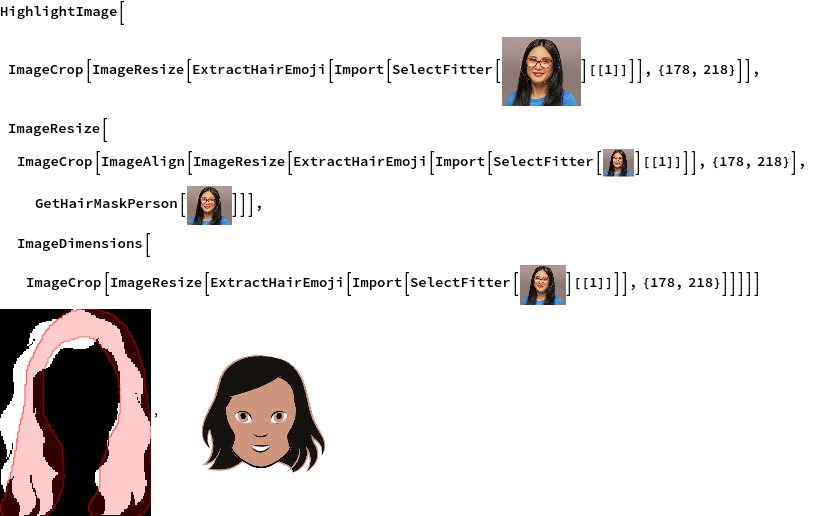

A visual representation of the fitting process is as follows:

Future Improvements

- Integration of some functions to map hairstyles and jawlines together, varying the hairstyle according to the jawline chosen.

- Spectacle detection and improvement of the hair/face segmentation dataset for inclusion of a wider variety of hair.

- Improving upon the SelectFitter function for a better metric of contour-shape similarity.

- Curating a dataset with pre-decided anchor points of the graphics to manipulate the required semantic features, and clearer colouring.

Relevant Links