After a full 30 mins of some very intellectual talk with Mr.Stephen Wolfram, shortened my Final Project choice list from 7 to just 2. I decided to go with one of the weirdest projects in my list which was Parsing Details from Video Game footage.

Now the topic literally made me infer to teach the computer how to understand the game it is showed but Mr. Stephen Wolfram thought it was maybe too easy, I agreed so we both got excited on the practical approach of the project, which was to make a computer algorithm that fully understands a footage given to it and makes a speech output of some key details in such a way that a Visually Impaired person was able to understand and play if the algorithm was to be running realtime.

While working on my project at the program I got to understand the realm of image processing in great detail. Now since image processing is comparatively hard in other languages, it became one of my greatest fears and thus I got a chance to battle it with Mathematica.

The trajectory of this project kinda changed when I had a one on one with Mr. Stephen Wolfram, He suggested me to make the Computer understand each frame in such detail that even a blind person can understand it and thus It gave me an Idea on shifting my Project's Purpose from Simply annotation to Analyzing and Pre to Post-processing details.

The idea was to use the annotation procedure to analyze the scenario of the game and give out Audio Response (One of the Layers of Output)

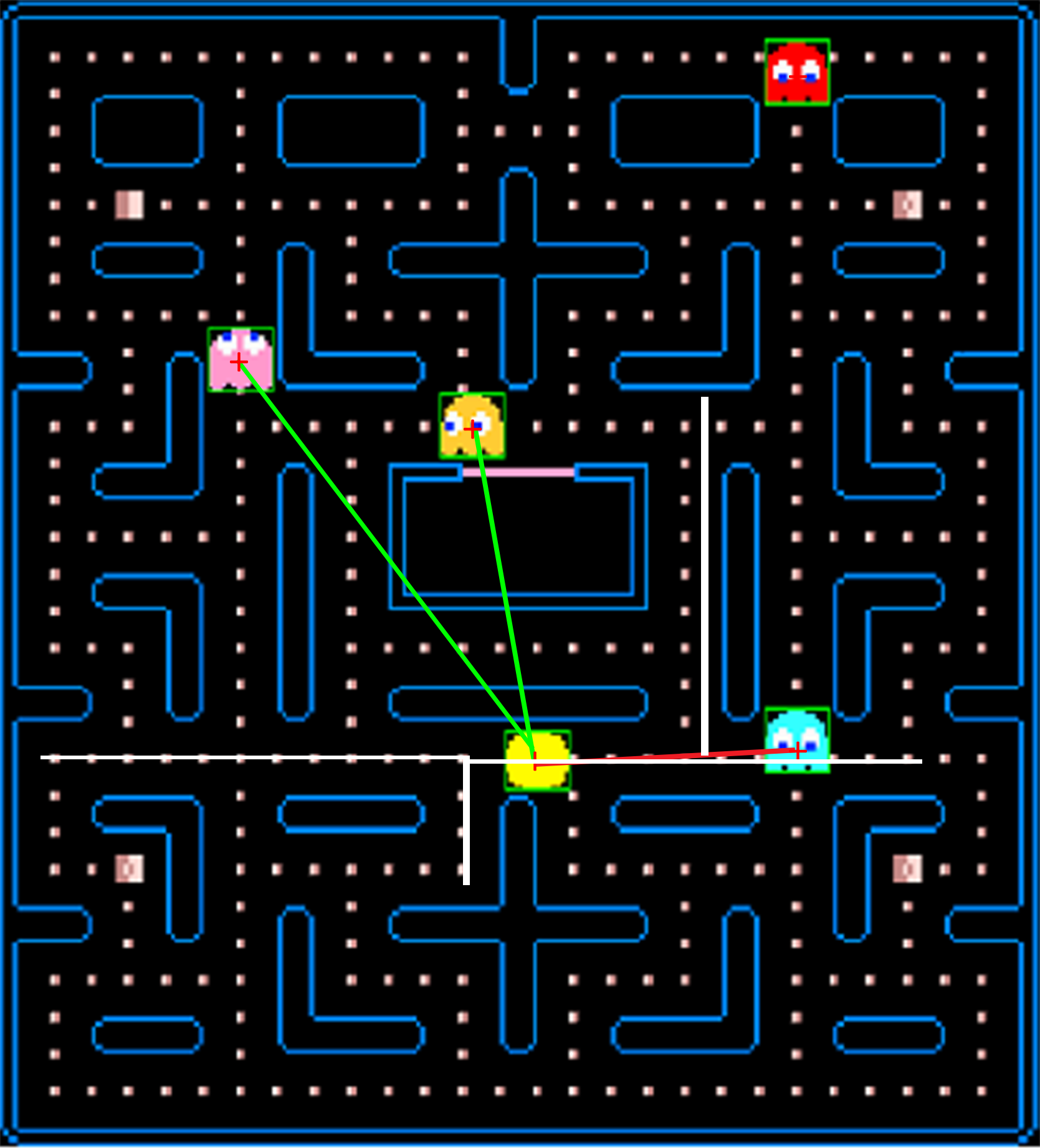

Among the 3 layers of outputs, the graphical visualization of the processes of the project looked like this:

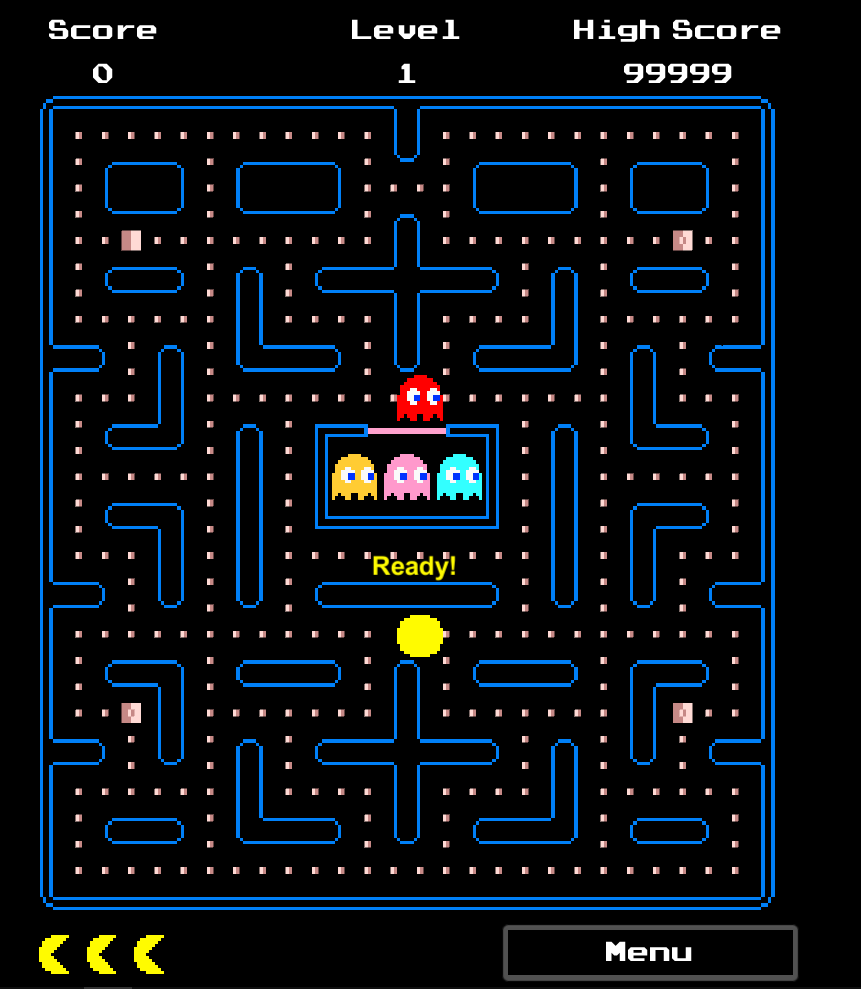

I began working by first making the game myself on my favorite Game engine UNITY, check it out here and after that, I collected a few screenshots and Videos footages from the game.

The Reason I made the Game myself on Unity was to use the UnityConnect[ ] in Mathematica, However for a few reasons was not possible, I would use it later though

The first thing to be done was to make the computer read any useful details that may change the game's state. Among them, "Ready!" and"Game Over" were a few of the Messages that halted the game until the user moves the sprite again.

To start TextRecognition on this image above I first had to remove all the layers extra detail from the footage that distorted the result of the text recognition.

Definitely, it was the grid behind it, I used ColorDetect on the Green color channel of the Image and deleted that which gave me a result like this:

After Reversing the colors for a better view I applied the text recognize function and got a stable result. Now that we have found the halting point of the code we could skip these frames for program efficiency.

I nested this to an entire Function and along with that I defined a few more Custom Functions that made my code a bit easier to debug.

Before we Continue, A little Custom Functions.

My Custom Function LegacyTracker[ ]

LegacyTracker[image_, clr_, disp_, imp_] := ( \

pixelize =

PixelValuePositions[

ColorNegate[Binarize[ColorDistance[image, clr], imp]],

1];

dop = CoordinateBounds[

pixelize]; \

If[disp == True,

Show[image,

Graphics[{EdgeForm[{Thick, Green}], Opacity[0],

BoundingRegion[pixelize, "MinRectangle"]}]], dop]

)

Tracks Keyed Color and return the specific keyed data from the image argument inform of an Image or Coordinates.

My Custom Function LegacyTrackerMasker[ ]

LegacyTrackerMasker[image_, clr_, disp_, imp_] := (

pixelize =

PixelValuePositions[

ColorNegate[Binarize[ColorDistance[image, clr], imp]], 1];

dop = CoordinateBounds[pixelize];

If[disp == True ,

Show[image,

Graphics[{EdgeForm[{Thick, White}], Opacity[1],

BoundingRegion[pixelize, "MinRectangle"]}]], dop]

)

Masks the Keyed Color to Black in order to Perform Efficient tracking on similar Color key data

I first used this function to Track the yellow life bars in the Game HUD and cropped out the middle row of the image and simply read the Pixel Values and counted each time a yellow group of pixels appeared to determine the number of lives the player has and

save them to the main String which would be narrated to you each time you died.

f = LegacyTracker[

LegacyTrackerMasker[footage, RGBColor[0/255, 128/255, 248/255],

True, 0.1], Yellow, False, 0.1];

tst = ImageTrim[

footage, {Take[f, 1][[1]][[1]],

Take[f, 2][[2]][[1]]}, {Take[f, 1][[1]][[2]],

Take[f, 2][[2]][[2]]}];

{c, r} = ImageDimensions[tst];

ImageTake[RemoveAlphaChannel[tst], {Round[r/2]}, {1, c}];

count = Length[

Flatten[Length /@ Split [#] & /@

ImageData[

ImageTake[RemoveAlphaChannel[tst], {Round[r/2]}, {1, c}]]]];

If[count == 7, MainStr <> " ," <> "3 Lives Remaining",

If[count == 5, MainStr <> " ," <> "2 Lives Remaining",

If[count == 3, MainStr <> " ," <> "1 Life Remaining",

MainStr <> " ," <> "Game Over"]]]

If[SecStr == "Ready!", SpeechSynthesize[MainStr]]

After this, I inverted the Previous mask and cropped the grid out and removed all the HUD elements since the have been analyzed for the frame. After that, I ran the Trackers again but now on ghosts for Calculating the angle vectors with respect to Pac-Man for processing the direction they are headed towards and if they were are a safe distance or not.

abt = False;

coordEnemyRed = N[Mean[LegacyTracker[a, Red, abt, 0.4]]];

coordEnemyCyan = N[Mean[LegacyTracker[a, Cyan, abt, 0.4]]];

coordEnemyYellow =

N[Mean[LegacyTracker[a, RGBColor[255/255, 201/255, 51/255], abt,

0.1]]];

coordEnemyPink =

N[Mean[LegacyTracker[a, RGBColor[255/255, 153/255, 204/255], abt,

0.03]]];

coordPacMan = N[Mean[LegacyTracker[a, Yellow, abt, 0.2]]];

I took the mean since it gave me the center of the image which I later was used for calculating the vector angle of the Ghosts with respect to Pac-Man which later was mapped out to give the direction the ghosts were at.

distance =

Min[N[EuclideanDistance[coordPacMan, coordEnemyCyan]],

N[EuclideanDistance[coordPacMan, coordEnemyYellow]],

N[EuclideanDistance[coordPacMan, coordEnemyPink]],

N[EuclideanDistance[coordPacMan, coordEnemyRed]]]

Later, I Calculated the distance between the points and the Mean point of the enemy Mobs that ultimately decided which enemy is most probably a threat to you at the moment and accordingly the program uses a speech synthesizer to produce an output.

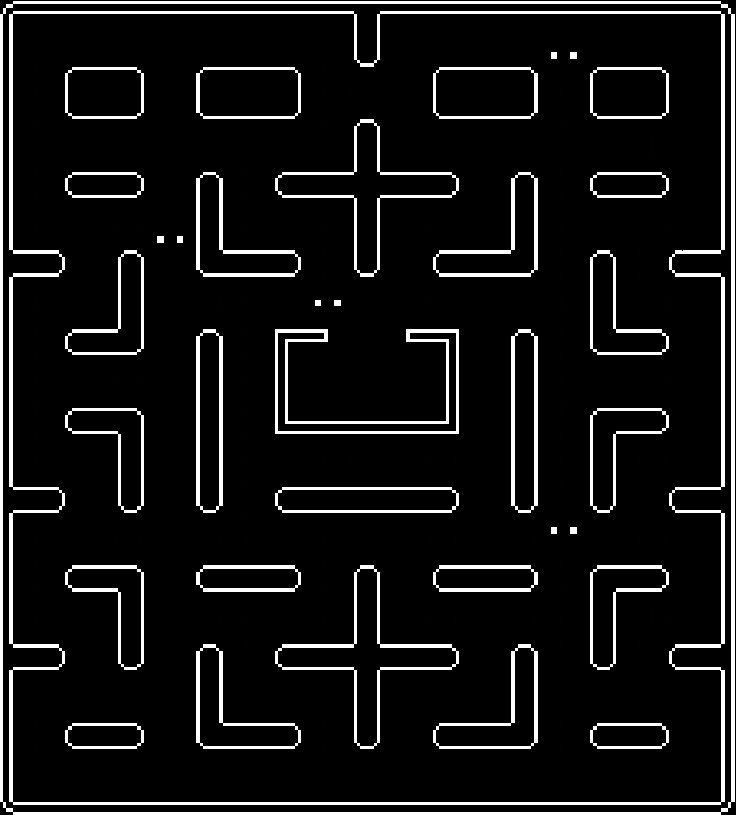

After the Ghosts, the next thing to detect was the maze, for which I first applied a series of filters to the Cropped Image of the grid (Maze)

ColorNegate[

ColorReplace[

ColorNegate[

ColorDetect[FinCrp, RGBColor[0/255, 128/255, 248/255]]],

LightGray] // Normal]

This ultimately gave me the output like this:

After this, I took the PixelValue position of the White bits and coded a construct that immediately makes a pop sound whenever the Pac-Man hits a wall.

Now the next thing I did was to store the coordinates of Pac-man to a List which took a history of the movement and using the existing list data and the Maze pixel values, Calculated above, The computer found probable ways for the Sprite to go and if a way was hazardous for the sprite it would also produce an output.

In similar, all the constructs work in Parallel on passes frame(s) and completely annotates it all.

At this time the Program is indeed not real-time thus ill be working on this to make it process in the background by connecting my Pacman game with UnityLink and Mathematica. A lot of development yet remains in this project but the prime goal to parse the detail from a frame has been achieved.

As for my future work, I would actually like to work my way towards the accessibility computing and would take this project of mine to a much-advanced level where it actually does all of this in Real-time while producing using music as a key component to communicate with the player.

Important Links: Project Source Code

Attachments:

Attachments: