For your function F[x], try using LinearModelFit on a set of points on the function.

Note F[x] runs much faster with Real input values of high precision compared to fractional values.

pnts = Table[{x, F[x]}, {x, N[5/100, ndigits], N[1/4, ndigits], N[1/400, ndigits]}];

mons = NestList[(#*x) &, x, 20]; (* monomials for the polynomial fit *)

lm = LinearModelFit[pnts, mons, x];

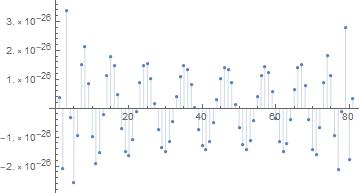

ListPlot[lm["FitResiduals"],Filling->Axis,PlotRange->All];

You can increase nDigits and the number of terms in the polynomial as needed to get the precision you need. I hope that you find this helpful.