I have the following vectors of a translation lattice:

trans = {{-3040, 98, 193}, {-2977, 96, 189}, {-2016, 65, 128}, {-1985,

64, 126}, {-1055, 34, 67}, {-1024, 33, 65}, {-992, 32, 63}, {-961,

31, 61}, {-63, 2, 4}, {0, 0,

0}, {63, -2, -4}, {961, -31, -61}, {992, -32, -63}, {1024, -33, \

-65}, {1055, -34, -67}, {1985, -64, -126}, {2016, -65, -128}, {2977, \

-96, -189}, {3040, -98, -193}}

In this case, the following method will do the trick:

In[169]:= Unprotect[{u, r}];

{u, r} = (trans // HermiteDecomposition);

u[[1 ;; 3, 1 ;; 3]]

Out[171]= {{-3, -1, 6}, {-32, 32, 1}, {-31, -32, 94}}

But if the vectors of a translation lattice are as follows:

trans = {{0, 1, 0}, {0, 0, 1}, {1, 1, 0}, {0, 0, 0}, {0, 0, 0}, {1/

2, -(1/2), 1}, {0, 0, 1}, {-(1/2), 0, -(3/2)}, {1, 0, 0}, {0, 0,

0}, {-(3/2), -(1/2), 0}, {0, 0, 0}, {0, 2, 0}, {1, 0, 1}}

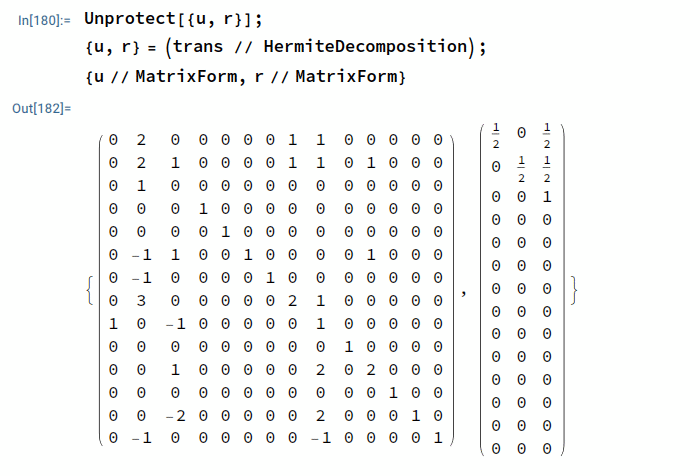

Unprotect[{u, r}];

{u, r} = (trans // HermiteDecomposition);

{u // MatrixForm, r // MatrixForm}

The result is as follows:

In this case, I am very confused how to find the transform matrix to transform the lattice to its standard base, aka, Z^n.

Regards,

Zhao