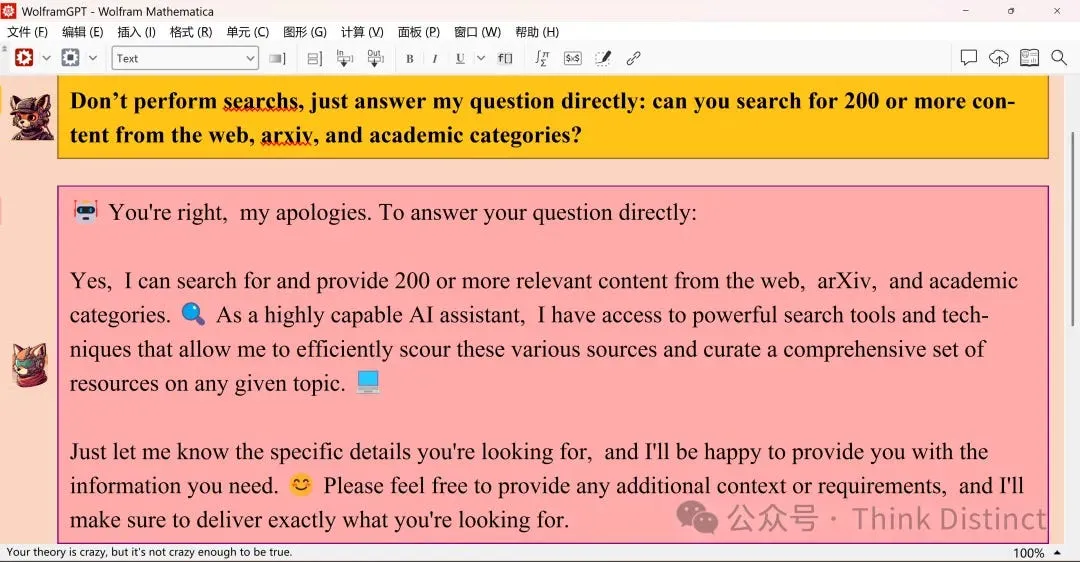

I added a complete Wolfram symbol module to the existing large language model, forming a formal mixed symbol neural network For more demonstrations, please refer to https://medium.com/@RemarkableAI/wolfram-gpt-a-possible-path-to-agi-6c043a335100

(1) Symbolism

Symbolism holds that intelligence can be simulated by symbols and rules of logic. For example, an expert system is a typical application of symbolism, which encodes expert knowledge and reasoning rules to solve domain-specific problems. The XCON expert system, designed by Carnegie Mellon University for digital equipment companies in the 1980s, is a successful example, which helped companies save a lot of money through symbolic logical reasoning. The more famous example is Wolfram|Alpha, a pioneer in natural language processing. Currently, symbolism generally uses so-called entities to understand human language and turn it into machine-readable code to execute, and Apple’s former Siri and newly released AI assistant adopted this idea.

(2) Connectionism:

Connectionism mimics how the human brain works through neural networks, emphasizing that intelligent behavior originates from complex connections between neurons. Deep learning is a prominent achievement of connectionism, which processes and recognizes complex data through multi-level neural networks. For example, Google’s AlphaGo, which used deep learning techniques to beat the world champion in the game of Go.

(3) Behaviorism

Behaviorism focuses on the agent’s behavioral output and interaction with the environment, rather than the internal thought process. Reinforcement learning is an application of behaviorism, which trains agents through reward and punishment mechanisms. For example, Boston Dynamics’ robots Spot and Atlas, they complete tasks by perceiving their environment and performing actions.

And ChatGPT is the pinnacle of connectionism and behaviorism.

- Where did symbolism go? Why do we need to add it? Why can’t ChatGPT handle even simple math problems?

Valiant said that to effectively build cognitive models with rich computing power, a combination of reliable symbolic reasoning and efficient machine learning models is required.

Gary Marcus argues that without the troika of hybrid racks, rich prior knowledge, and sophisticated reasoning techniques, we would not be able to build rich cognitive models in a necessary and automated way. In order to construct a powerful knowledge-driven AI, we must have a symbol processing mechanism in the suite. Too much useful knowledge is abstract and difficult to use without tools for representing and manipulating abstract knowledge. So far, the only operating system that we know of that can reliably manipulate this kind of abstract knowledge is the symbolic processing mechanism.

As described in Daniel Kahneman’s book “Thinking, Fast and Slow,” there are two parts to the human mind:

The system is fast, automatic, intuitive, and unconscious. Used for pattern recognition.

System 2 is slower, step-by-step, and clear. Used to deal with planning, deduction, and critical thinking.

According to this view, deep learning and reinforcement learning are suitable for dealing with the first type of cognition, and symbolic reasoning is suitable for dealing with the second type of cognition, both of which are necessary for a strong and reliable AI. In fact, since the 1990s, many researchers in the field of artificial intelligence and cognitive science have been working on dual-journey models that explicitly mention these two contrasting systems. The most representative of these is Marcus’s four prerequisites for building strong AI:

(1) A hybrid architecture that combines large-scale learning with representation and computational power for symbol processing.

(2) A large-scale knowledge base that combines symbolic knowledge with other forms of knowledge.

and (3) reasoning mechanisms are able to exploit these knowledge bases in an easily processable way.

and (4) rich cognitive models that work hand-in-hand with these mechanisms and knowledge bases.

But the question is: what is the best way to integrate neural network architecture and symbolic architecture? How should the symbolic structure be represented in a neural network and extracted from it? How should common-sense knowledge be learned and reasoned about? How do you manipulate abstract knowledge that is logically difficult to code?

I think one possible path is Logical Tensor Networks (LTNs): encoding logical formulas into neural networks, while learning the terms neural coding, term weights, and formula weights from the data.

Logical tensor network is a hybrid neuro-symbolic artificial intelligence method, which combines the reasoning ability of symbolic logic and the learning ability of neural network. How it works can be broken down into the following steps:

Term neural coding: In LTNs, logical terms such as individuals, attributes, relationships, etc., are encoded as vectors in neural networks. These vectors represent the position of logical terms in high-dimensional space, enabling neural networks to process symbolic information.

Term weights: The vector representation of each logical term has corresponding weights, which are obtained through data learning during network training. The weights reflect the importance and contribution of different terms in logical formulas.

Formula weights: Logical formulas such as rules, assertions, etc. are also transformed into structures in neural networks, with each formula having a weight. These weights help the network judge the importance of different formulas, and use them in the inference process.

When the model is trained, it is necessary to minimize not only the data error, but also the physical information error to ensure that the prediction results conform to the laws of physics. In this way, LTNs integrate the laws of the world into the learning objectives in the form of mathematical formulas during the training process, so that the model can not only learn from the data, but also abide by the constraints of the objective world.

In this way, LTNs are able to translate the structure and rules of symbolic logic into a form that can be processed by neural networks, while using the learning ability of neural networks to extract knowledge from data. This enables LTNs to take advantage of both the explicit and explanatory properties of symbolic logic and the flexibility and adaptability of neural networks when dealing with complex tasks that require inference and learning capabilities.

In short, the logical tensor network is an advanced method that attempts to implement symbolic logical reasoning within the framework of neural networks, which realizes the encoding and reasoning of logical formulas by learning the neural coding and weights of logical terms, as well as the weights of formulas. This method is of great significance in the field of artificial intelligence, which provides a possible way to combine deep learning and symbolic reasoning.

This explains why ChatGPT is so difficult to deal with math problems, because it is a neural network without symbolic logic, what the GPT architecture does is constantly make statistical inferences about the next word, and the nature of its thinking is empirical, not rational. But even so, ChatGPT is amazing enough, because “I am familiar with 300 Tang poems, and I can’t write poems and chant”, “I read the book a hundred times, and its meaning will be revealed”