Hello Gregory

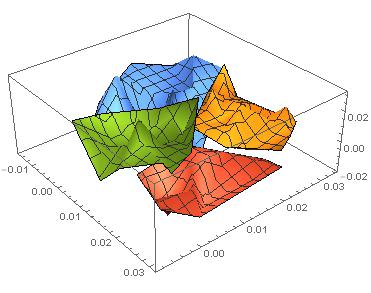

Yes, clusters can be multi-dimensional. Below is a simple example using numerical data - in fact just 3 dimensional array to be able to visualise it. I am asking <FindClusters> command to generate 4 clusters

cdata = RandomVariate[NormalDistribution[0.01, 0.01], {100, 3}];

FindClusters[cdata, 4];

ListPlot3D[%]

This will look as follow:

As you can see, you can work with multi-dimensional data, but visualisation of the clustered objects can be more difficult if you move beyond 3 dimensions. If the data is non-numeric, you are likely to work with strings. You can still try using the default distance function or select specific method such as DamerauLevenshteinDistance or HammingDistance.

To execute it: build your data as nested list where each sub list contains the data you want. Then simply apply the FindClusters command to your data object.

Hope this answers your query.

Kind regards

Igor