Using machine learning, we can distinguish large features in images - for example telling an apple from a chair. We can also distinguish more precise differences, like characters in handwriting. An interesting investigation is to see how well machine learning performs with images with different major trends, but still with a need for precision. My project for the Wolfram Summer school was to read the time from pictures of analogue clocks.

Generating a training set

Without photographing different clocks, generating a training set for clocks is challenging. There seems to be a consensus that all clocks should point to 10:10. Using images from google, a simple training set of about 200 examples can be obtained. To compensate for the relatively small set, a large number of variations of the function ClockGauge can supplement it. Below is a sample on how to generate a large set of simple ones, and a sample of the training set used.

times = Table[{RandomInteger[{1, 12}], RandomInteger[{0, 59}]}, 4000];

generatedclocks= Thread[Rule[Image[ClockGauge[#]], #] & /@ times];

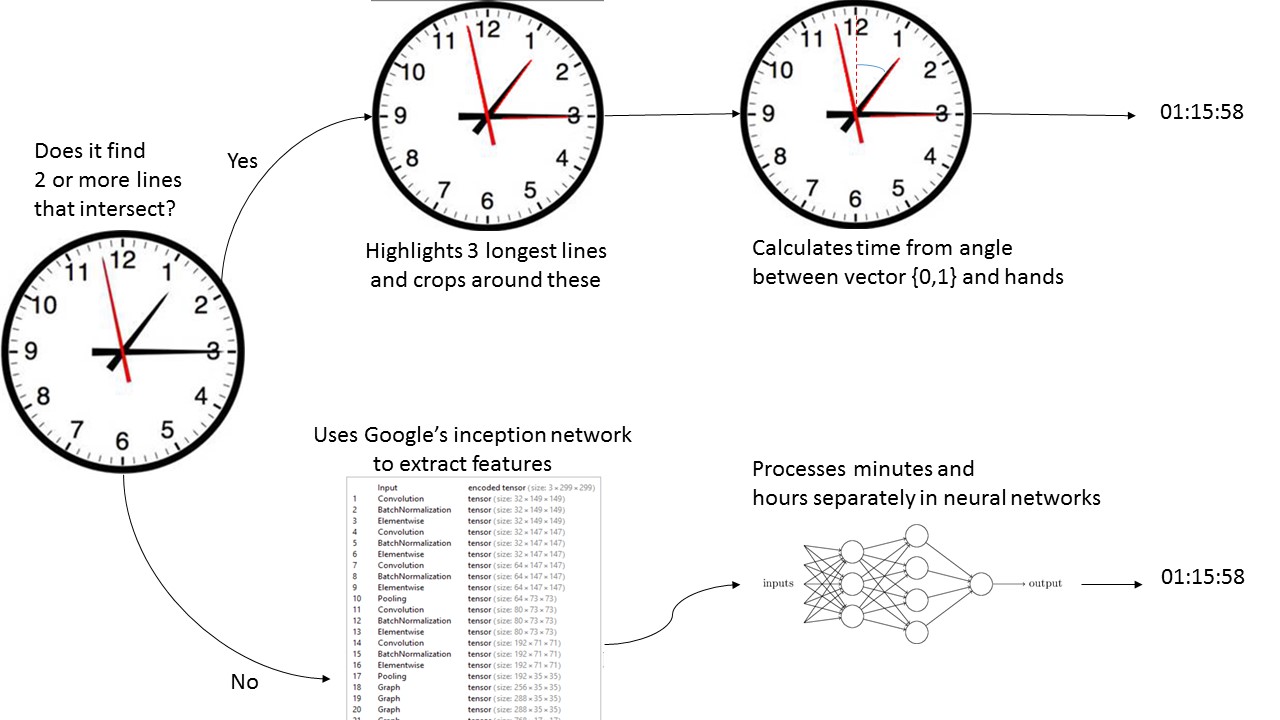

Algorithm

It seemed that neural networks, or even neural networks fed with feature extracted images, does not work very well. As the training continues or the the layers increase, the set gets closer and closer to giving one number for hours/minutes for all examples. In fact, training the net for only a short time without a validation set seems to give the best result.

Another approach to the problem is to find the longest lines that intersect within a certain radius in the image. The angle between this and the vector {0,1} can then be used to find the time. This works every time the correct lines are identified; it finds lines about 50% of the time for simple images, but not necessarily all the right ones. A function was written using the algorithm below.

Below is an extract of the final compiled function. This particular section finds the parametric equation of the three longest image lines that intersect in a given radius.

clocklines = ImageLines[EdgeDetect[clock], 0.17, .1,

Method -> {"Hough", "Segmented" -> True}];

clocklines = Flatten[clocklines, 1];

norms = Norm[#[[1]] - #[[2]]] & /@ clocklines;

best = Ordering[norms, 3, Greater];

handvectors = clocklines[[best]];

linesubset = Subsets[handvectors, {2}];

tvalues = {#, Flatten[NSolve[t #[[1, 1]] + (1 - t) #[[1, 2]] ==

t2 #[[2, 1]] + (1 - t2) #[[2, 2]] , {t, t2}], 1]} & /@

linesubset;

validt = Select[tvalues, Length[#[[2]]] == 2 &];

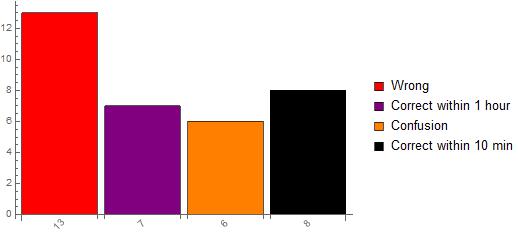

Performance

An issue with the image processing part is that sometimes the wrong lines are found - e.g. it finds the second and minute hands, not the hour. This is a sample of the results, showing two that are wrong, two that are correct within 1 hour, two that confused the hands, and two that are correct within 10 minutes.

The performance on a simple test set of 34 examples is shown in the bar chart below. Confusion represents when it confuses the hands.

Thus this algorithm succeeds about 20% of the time, and is correct within one hour 40% of the time.

Improvements

There are two main improvements that can be done. The most important would be to generate a significantly larger training set of real clocks. Considering the image processing approach, obtaining a better function that ImageLines or specifying that e.g the line for the seconds hand should be the thinnest would also help.