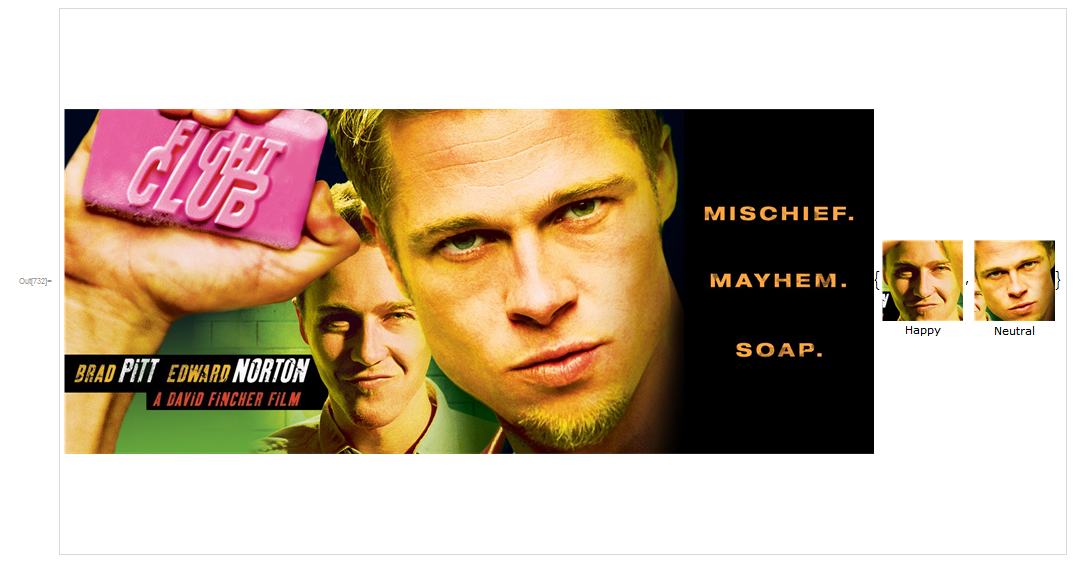

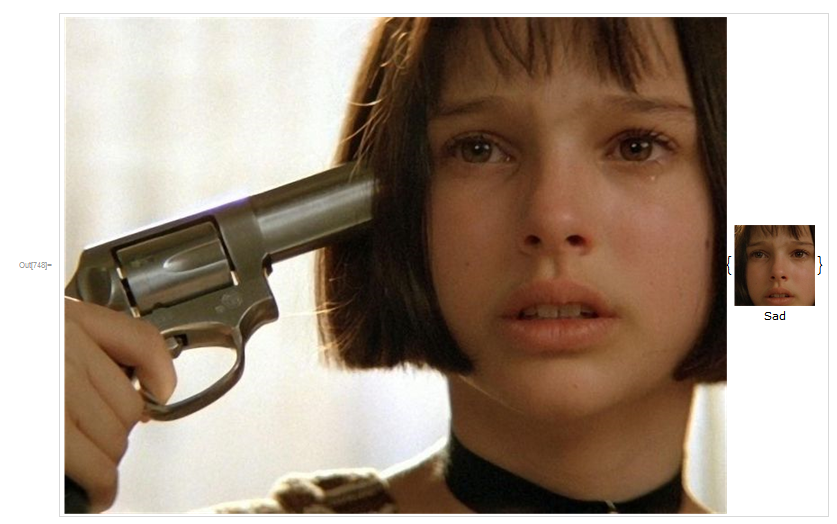

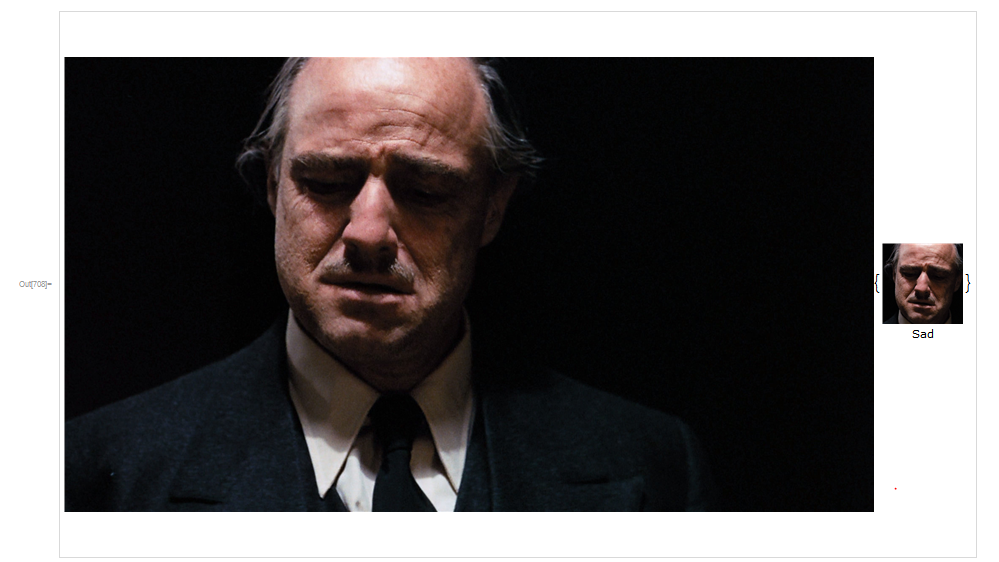

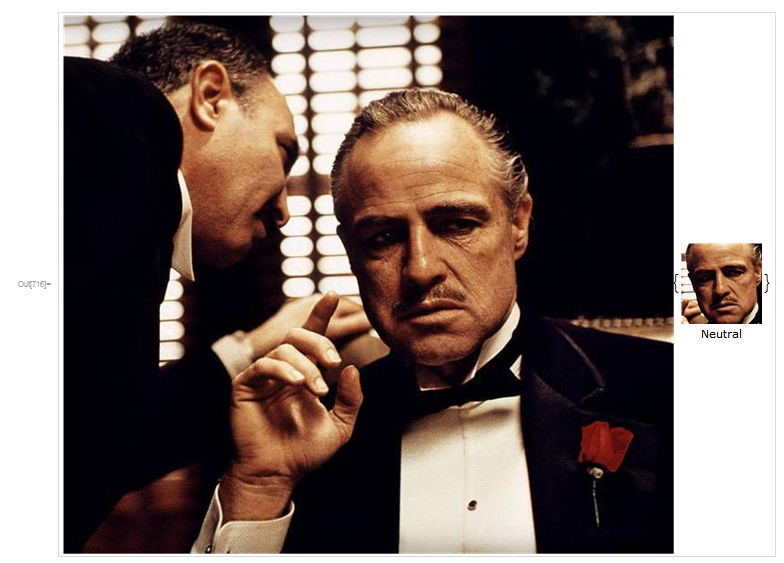

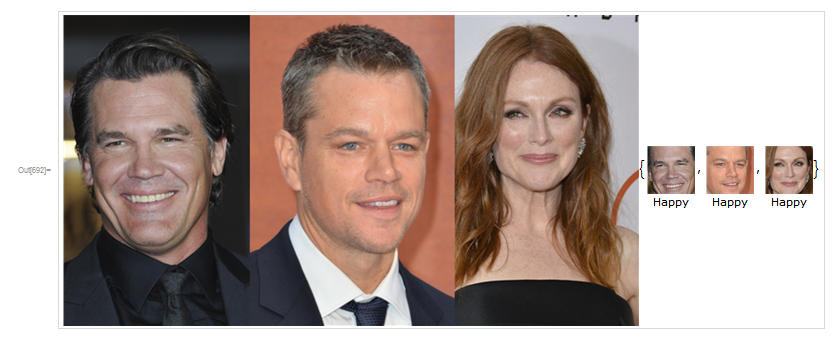

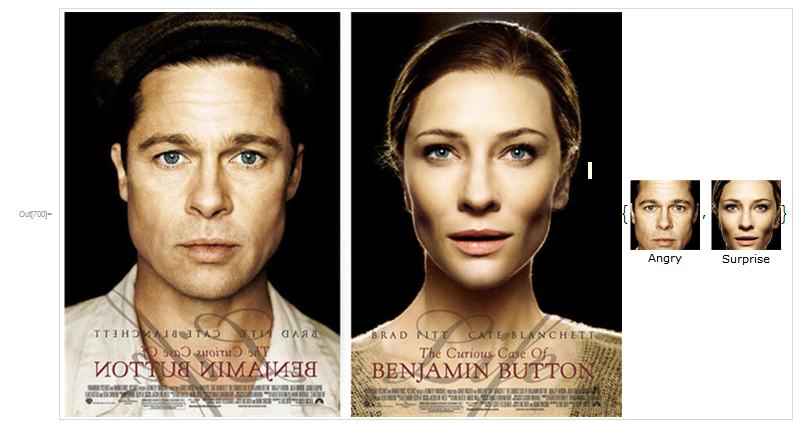

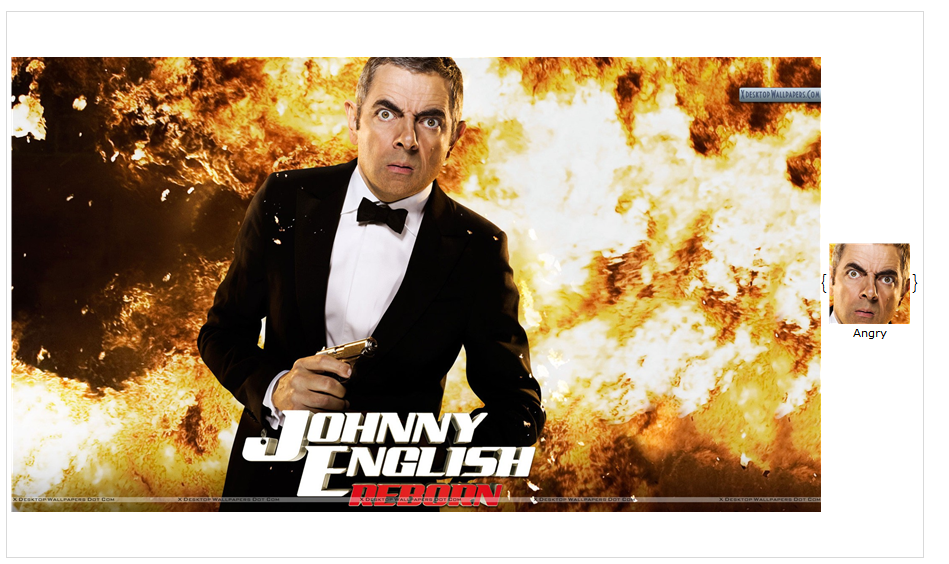

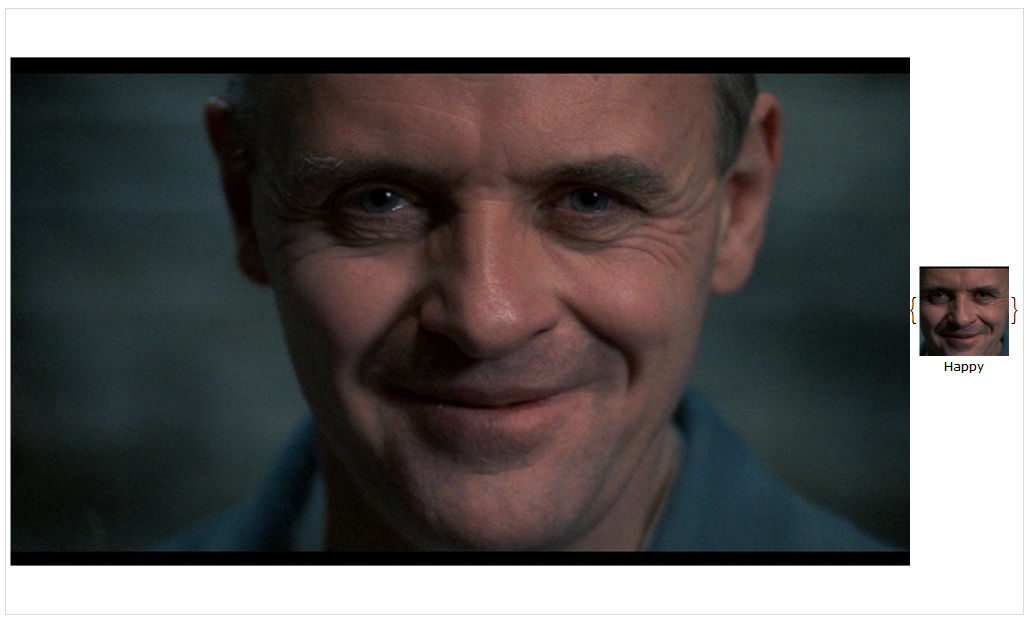

The big image on the left side is the Input, and on the right side is the Output

The aim of my project for Wolfram Summer School in Armenia is to recognize emotions of a person, using a neural network with input of a picture of the face. The mentioned network is based on LeNet network. In this paper, I propose an approach to "Facial emotion recognition" in a fully automated way. Though, only four databases were used during the research, manual operations were applied to generate different and practical data as an addition to the ready ones.

Collecting Data

Four different databases were used during this research:

- Cohn-Kanade AU-Coded Expression Database (+)

- Japanese Female Facial Expression (+)

- FaceTales (+)

- Yale face database (+)

Every database has it's own emotions, so there is a need to filter emotions we are interested in. We came up with 7 emotion plus one Neutral face. Here is the share of each emotion in all 4211 labeled pictures that we had:

- Happy(844)

- Sad (412)

- Angry (659)

- Disgusted (592)

- Afraid (408)

- Ashamed (81)

- Surprised (775)

- Neutral (440)

Some emotions are taken from different datasets.

Tolerance problem

In the real world there is no guarantee that one stands strictly straight in front of the camera, so some processes were applied to data to match more to the real situations. As a result, all images were rotated at 5, 10 and 15 in both clockwise and counter clockwise directions (appropriate cut-offs were performed on newly generated artiacts) and even been reflected from left to right, in order to generate more data. The manual generation increased overall number of the images to be used in training by 14 times.

The functions below describe the process:

reflectData := Join[KeyMap[(ImageReflect[#, Left -> Right]) &, #], #] &;

rotateDatas[x_] := Join[

KeyMap[

Function[u, ImageRotate[u, -5Degree, 128, Background->White]]

,

x

]

,

KeyMap[

Function[u, ImageRotate[u, -10Degree, 128, Background->White]]

,

x

]

,

KeyMap[

Function[u, ImageRotate[u, -15Degree, 128, Background->White]]

,

x

]

,

KeyMap[

Function[u, ImageRotate[u, +5Degree, 128, Background->White]]

,

x

]

,

KeyMap[

Function[u, ImageRotate[u, +10Degree, 128, Background->White]]

,

x

]

,

KeyMap[

Function[u, ImageRotate[u, +15Degree, 128, Background->White]]

,

x

]

,

x

]

Preprocess

All pictures have to be rescaled (128x128) so that they have identical size of face and same color properties (grayscale), this is done to perform the biggest face detection (the default FindFaces function is used), and omit the margin around it.

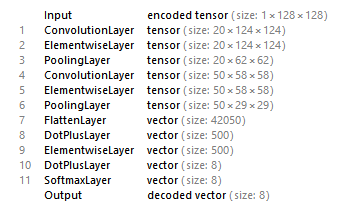

Neural network

The used network was based on the LeNet neural network that was used to recognize digits. Of course the encoder and decoder layers are changed with respect to the requirements of the present problem. As a result the final network layers look like:

This code below describes the creation of neural network:

classes = {"Neutral", "Angry", "Happy", "Sad", "Afraid", "Disgusted", "Ashamed", "Surprise"};

decoder = NetDecoder["Class", classes];

encoder = NetEncoder["Image", {128,128}, "Grayscale"];

loss = CrossEntropyLossLayer["Target" -> NetEncoder["Class", classes]];

lenet = NetChain[{

ConvolutionLayer[20, {5,5}],

ElementwiseLayer[Ramp],

PoolingLayer[{2, 2}, {2, 2}],

ConvolutionLayer[50, {5, 5}],

ElementwiseLayer[Ramp],

PoolingLayer[{2, 2} ,{2, 2}],

FlattenLayer[],

DotPlusLayer[500],

ElementwiseLayer[Ramp],

DotPlusLayer[8],

SoftmaxLayer[]

},

"Output" -> decoder,

"Input" -> encoder

];

Training

Data was split into three parts: Training data (54954 Images), Validation data (3000 Images), Test data (1000 Images). It took 14 hours on "Intel Core i5 4210U CPU, Windows 10 x64" to train for 10 rounds, and the accuracy on test data was 98.5%, but as there is a great chance for a test data to be a rotated or a reflected version of a image in train data or vice versa, which means that this number is not pretty reliable, though the result during dynamic test using the laptop camera is still good.

Block[{tempData},

{testData, tempData} = TakeDrop[RandomSample[complexData], 1000];

{validationData, trainData} = TakeDrop[RandomSample[tempData], 3000]

];

classComplex = NetTrain[classGray, Normal[trainData], loss, ValidationSet -> Normal[validationData]];

Issues and Further Improvement

One of elements in building this application is a Wolfram Language built-in function: FindFace. This function is still in improvement phase and has several issues, from showing non-face examples and having problem with rotated pictures. Due to this problem there is one additional step added to the user-interface. If some parts of the image are false recognized as faces, one can omit them by clicking on the image, but this problem still doesnt really solve the dynamic recognition issues.

Even in dynamic mode, all processes are mapped into many single frames; so some wrong results may be shown when picture is changing rapidly. It is possible to improve the functionality by saving the probabilities of every emotions for several frames, and estimate emotion of a period, by using some simple formulas.

As the experiment showed that it was more wise approach to split images to training data, validation data and test data, and only then start the manual transformations. Of course the result may not vary much, but the accuracy may be more reliable.

All training data were pictures of random people, standing exactly in camera direction. Having even more databases for the training may solve this problem in the future. The emotions, the neural network came up recognizing, were the ones present in the databases. It was possible to have some more emotions like Contempt or Tired. Still more databases may help.

Conclusion

Applying machine learning methods to emotion recognition was successful. Our results appear to be at the state of art in Facial Emotion Recognition. The network is designed to perform in under-control situation (stable picture, enough light, standing straight and into camera direction). More data and maybe more complex network are required to improve the functionality, so it can be applied to more practical/real-life situations. Because of the selected network architecture, the network could be trained on a low-level representation of data that had minimal processing (as apposed to elaborated feature extraction). Because of redundant nature of data and because of the constraints imposed on the network, the learning time was relatively short considering the size of the training set (one gigabyte of grayscaled 128x128 images).

One can test the application, by downloading this network file (Mirror) and putting it in $HomeDirectory (usually Documents folder for Windows users) and then only evaluate last two parts of the code in the attached notebook.

Happy to supply more information on request.

Some more examples

(With some special thanks to Brad Pitt, Natalie Portman, Marlon Brando, Edward Norton, Shrek and others for all special things they have done.)