Hi David.

I think what you are looking for is LearningRateMultipliers. LearningRateMultipliers is an option for NetTrain that lets you specify what learning rate you want for each layer in the network.

Look at the details section for more information, but these seem to be the most important lines:

"m;;n->r use multiplier r for layers m through n"

"If r is zero or None, it specifies that the layer or array should not undergo training and will be left unchanged by NetTrain."

"LearningRateMultipliers->{layer->None} can be used to "freeze" a specific layer."

Here is an example. First, we will get some model from NetModel:

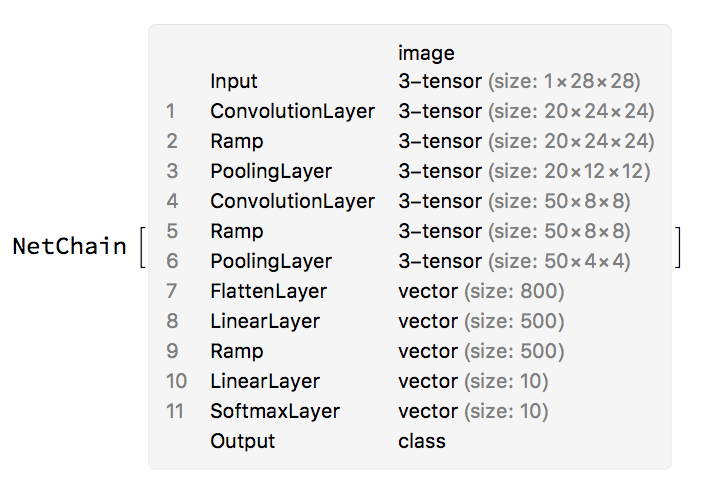

net = NetModel["LeNet Trained on MNIST Data"]

Then we can get some training and test data:

resource = ResourceObject["MNIST"];

trainingData = ResourceData[resource, "TrainingData"];

testData = ResourceData[resource, "TestData"];

And finally, we can train just the layers after layer 7:

NetTrain[net, trainingData, ValidationSet -> testData, LearningRateMultipliers -> {1 ;; 7 -> None}]

This particular example probably isn't all too useful, as we're probably using the same dataset as this network was originally trained on, but I think this shows the general idea.