Stephen Wolfram's latest blog post is about communicating with extra-terrestrials using a beacon or time capsule, and is worth checking out especially for those interested in language, AI, etc.. Basically one of the biggest nonobvious obstacles is the principle of computational equivalence, and the obvious problem with a lot of ideas is that cultural conventions give meaning to symbols and drawings.

I had been thinking about how Wolfram's A New Kind of Science gives insights into the Fermi paradox, which I think is related to his recent post. Roughly speaking here is the Fermi paradox. The aliens must be visiting us already if one assumes any reasonable positive chance for the requirements of an alien civilization (whatever that means), e.g. requiring a star with planets, planets that are habitable, life developing, civilization arising, technology developing, sending out probes or messages with technology either on purpose or by accident. The messages or travelers could go slowly since the age of the universe is so long, and the probabilities of each required step can be very small because there are so many stars in the galaxy (even ignoring other galaxies).

There are many takes on this paradox (including the proof-by-contradiction claim that the chances of civilization are exactly zero). There is a note in the book discussing the paradox, but I was thinking there could be another NKS-centric explanation (probably similar to what has been said before but would be based on a computational way of thinking).

Consider the space of animal intelligences. We don't even have a NKS model for this, but let's say the space has N rules which are universal. That would mean there are N rules that can effectively model all animal intelligences, which capture the main features allowing some fine details to differ. If two species use the same rule then they can understand each other. If one has a universal rule then it is theoretically possible to understand the others, but usually it is quite hard to do so to the point of being practically unintelligible for any other universal intelligence.

So one answer to the Fermi paradox is that we are surrounded by alien civilizations that are bizarre and unidentifiable as intelligent (and vice versa). The value N of universal rules in the space of naturally arising animal intelligences might be the most important factor, since one might expect from random placement in 3D that between one and ten neighbors would be enough to isolate us from any other civilizations that we could communicate with.

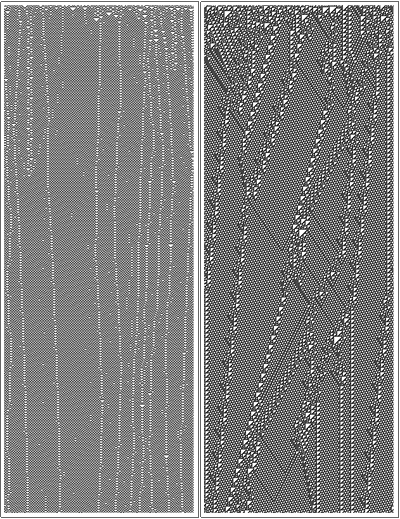

For example, one might be performing rule 54 and the other performing rule 110, and the respective civilizations have no practical way of knowing if the other civilization's artifacts are just natural.

Row[ArrayPlot[CellularAutomaton[#, RandomInteger[1, 300], 800], ImageSize -> 200] & /@ {54, 110}]