Accelerometer-based Gesture Recognition with Machine Learning

Introduction

This is Part 2 of a 2-part community post - Part 1 (Streaming Live Phone Sensor Data to the Wolfram Language) is available here: http://community.wolfram.com/groups/-/m/t/1386358

As technology advances, we are constantly seeking new, more intuitive methods of interfacing with our devices and the digital world; one such method is gesture recognition. Although touchless human interface devices (kinetic user interfaces) exist and are in development, the cost and configuration required for these sometimes makes them impractical, particularly for mobile applications. A simpler method would be to use devices the user already has on their person - such as a phone or a smartwatch - to detect basic gestures, taking advantage of the wide array of sensors included in such devices. In an attempt to assess the feasibility of such a method, methods of asynchronous communication between a mobile device and the Wolfram Language are investigated, and a gesture recognition system based around an accelerometer sensor is implemented, using a machine learning model to classify a few simple gestures from mobile accelerometer data.

Investigating Methods of Asynchronous Communication

To implement an accelerometer-based gesture recognition system, we must devise a suitable means for a mobile device to transmit accelerometer data to a computer running the Wolfram Language (WL). On a high level, the WL has baked-in support for a variety of devices - specifically the Raspberry Pi, Vernier Go!Link compatible sensors, Arduino microcontrollers, webcams and devices using the RS-232 or RS-422 serial protocol (http://reference.wolfram.com/language/guide/UsingConnectedDevices.html); unfortunately, there is no easy way to access sensor data from Android or iOS mobile devices.

On a low level, the WL natively supports TCP and ZMQ socket functionality, as well as receipt and transmission of HTTP requests and Pub-Sub channel communication. We investigate the feasibility of both methods for transmission of accelerometer data in Part 1 of this community post (http://community.wolfram.com/groups/-/m/t/1386358).

Gesture Classification using Neural Networks

Now that we are able to stream accelerometer data to the WL, we may proceed to implement gesture recognition / classification. Due to limited time at camp, we used the UDP socket method to do this - in the future, we hope to move the system over to the (more user-friendly) channel interface.

We first configure the sensor stream, allowing live accelerometer data to be sent to the Wolfram Language:

Configure the Sensor Stream

- Install the "Sensorstream IMU+GPS" app (https://play.google.com/store/apps/details?id=de.lorenz_fenster.sensorstreamgps)

- Ensure the sensors you want to stream to Wolfram are ticked on the 'Toggle Sensors' page. (If you want to stream other sensors besides 'Accelerometer', 'Gyroscope' and 'Magnetic Field', ensure the 'Include User-Checked Sensor Data in Stream' box is ticked. Beware, though - the more sensors are ticked, the more latency the sensor stream will have.)

- On the "Preferences" tab: a. Change the target IP address in the app to the IP address of your computer (ensure your computer and phone are connected to the same local network) b. Set the target port to 5555 c. Set the sensor update frequency to 'Fastest' d. Select the 'UDP stream' radio box e. Tick 'Run in background'

- Switch stream ON before executing code. (nb. ensure your phone does not fall asleep during streaming - perhaps use the 'Caffeinate' app (https://play.google.com/store/apps/details?id=xyz.omnicron.caffeinate&hl=en_US) to ensure this.)

- Execute the following WL code:

(in part from http://community.wolfram.com/groups/-/m/t/344278)

QuitJava[];

Needs["JLink`"];

InstallJava[];

udpSocket=JavaNew["java.net.DatagramSocket",5555];

readSocket[sock_,size_]:=JavaBlock@Block[{datagramPacket=JavaNew["java.net.DatagramPacket",Table[0,size],size]},sock@receive[datagramPacket];

datagramPacket@getData[];

listen[]:=record=DeleteCases[readSocket[udpSocket,1200],0]//FromCharacterCode//Sow;

results={};

RunScheduledTask[AppendTo[results,Quiet[Reap[listen[]]]];If[Length[results]>700,Drop[results,150]],0.01];

stream:=Refresh[ToExpression[StringSplit[#[[1]],","]]& /@ Select[results[[-500;;]],Head[#]==List&],UpdateInterval-> 0.01]

Detecting Gestures

On a technical level, the problem of gesture classification is as follows: given a continuous stream of accelerometer data (or similar),

distinguish periods during which the user is performing a given gesture from other activities / noise and

identify / classify a particular gesture based on accelerometer data during that period. This essentially boils down to classification of a time series dataset, in which we can observe a series of emissions (accelerometer data) but not the states generating the emissions (gestures).

A relatively straightforward solution to (1) is to approximate the gradients of the moving averages of the x, y and z values of the data and take the Euclidean norm of these - whenever these increase above a threshold, a gesture has been made.

movingAvg[start_,end_,index_]:=Total[stream[[start;;end,index]]]/(end-start+1);

^ Takes the average of the x, y or z values of data (specified by index - x-->3, y-->4, z-->5) from the index start to the index end.

normAvg[start_,middle_,end_]:=((movingAvg[middle,end,3]-movingAvg[start,middle,3])/(middle-start))^2+((movingAvg[middle,end,4]-movingAvg[start,middle,4])/(middle-start))^2+((movingAvg[middle,end,5]-movingAvg[start,middle,5])/(middle-start))^2;

^ (Assuming difference from start to middle is equal to difference from middle to end:) Approximates the gradient at index middle using the average from start to middle and from middle to end for x, y and z values, and then takes the sum of the squares of these values. Note that we do not need to take the square root of the final answer (to find the Euclidean norm), as doing so and comparing it to some threshold x would be equivalent to not doing so and comparing it to the threshold x^2 (square root is a computationally expensive operation).

Thus

Dynamic[normAvg[-155,-150,-146]]

will yield the square of the Euclidean norm of the gradients of the x, y and z values of the data (approximated by calculating the averages from the 155th most recent to 150th most recent and 150th most recent to 146th most recent values). As accelerometer data is sent to the Wolfram Language, this value will update.

Data Collection

To train the network, we must collect gesture data. To do this, we have a variety of options - we can either represent the gesture as a tensor of 3 dimensional vectors (x,y,z accelerometer data points) and perform time series classification on these sequences of vectors using hidden Markov models or recurrent neural networks, or we can represent the gesture as a rasterised image of a graph much like the one below:

and perform image classification on the image of the graph.

Since the latter has had some degree of success (e.g. in http://community.wolfram.com/groups/-/m/t/1142260 ), we attempt a similar method:

PrepareDataIMU[dat_]:=Rasterize@ListLinePlot[{dat[[All,1]],dat[[All,2]],dat[[All,3]]},PlotRange->All,Axes->None,AxesLabel->None,PlotStyle->{Red, Green, Blue}];

^ Plots the data points in dat with no axes or axis labels, and with x coordinates in red, y coordinates in green, z coordinates in blue (this makes processing easier as Wolfram operates in RGB colours).

threshold = 0.8;

trainlist={};

appendToSample[n_,step_,x_]:=AppendTo [trainlist,PrepareDataIMU[Part[x,n;;step]]];

Dynamic[If[normAvg[-155,-150,-146]>threshold,appendToSample[-210,-70,stream],False],UpdateInterval->0.1]

^ Every 0.1 seconds, checks whether or not the normed average of the gradient of accelerometer data at the 150th most recent data point (using the normAvg function) is greater than the threshold - if it is, it will create a rasterised image of a graph of accelerometer data from the 210th most recent data point to the 70th most recent data point and append it to trainlist - a list of graphs of gestures. Patience is recommended here - there can be up to ~5 seconds' lag before a gesture appears. Ensure gestures are made reasonably vigorously.

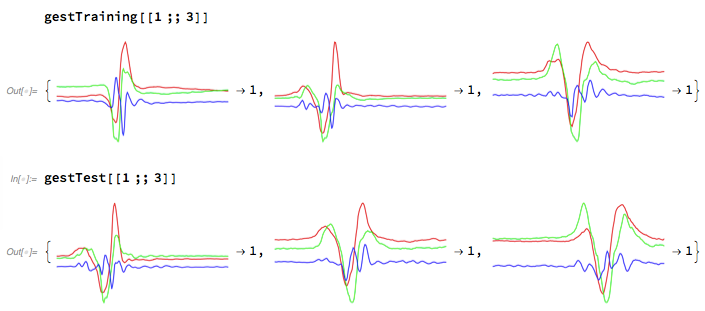

As a first test, we attempted to generate 30 samples of each of the digits 1 to 5 drawn in the air with a phone - the images of these graphs were stored in trainlist. Then, we classified them as 1, 2, 3, 4 or 5, converting trainlist into an association with key <image of graph> and value <number>). We split the data into training data (25 samples from each category) and test data (all remaining data):

TrainingTest[data_,number_]:=Module[{maxindex,sets,trainingdata,testdata},

maxindex=Max[Values[data]];

sets = Table[Select[data,#[[2]]==x&],{x,1,maxindex}];

sets=Map[RandomSample,sets];

trainingdata =Flatten[Map[#[[1;;number]]&,sets]];

testdata =Flatten[Map[#[[number+1;;-1]]&,sets]];

Return[{trainingdata,testdata}]

]

^ Randomly selects number training elements and Length[data]-number test elements for each value in the list data

gestTrainingTest=TrainingTest[gesture1to5w30,25];

gestTraining=gestTrainingTest[[1]];

gestTest=gestTrainingTest[[2]];

gestTraining and gestTest now contain key-value pairs like those below:

Machine Learning

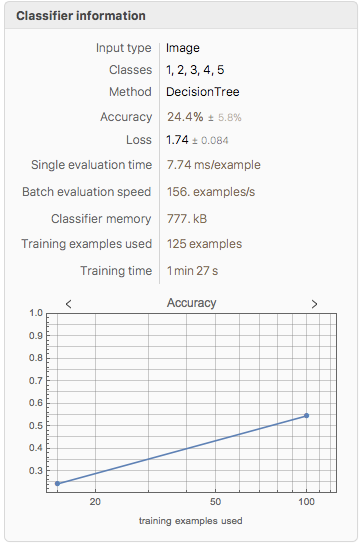

To train a model on these images, we first attempt a basic Classify:

TrainWithClassify = Classify[gestTraining]

ClassifierInformation[TrainWithClassify]

Evidently, this gives a very poor training accuracy of 24.4% - given that there are 5 classes, this is only marginally better than random chance.

As the input data consists of images, we try transfer learning on an image identification neural network (specifically the VGG-16 network):

net = NetModel["VGG-16 Trained on ImageNet Competition Data"]

We remove the last few layers from the network (which classify images into the classes the network was trained on), leaving the earlier layers which perform more general image feature extraction:

featureFunction = Take[net,{1,"fc6"}]

We train a classifier using this neural net as a feature extractor:

NetGestClassifier = Classify[gestTraining,FeatureExtractor->featureFunction]

We now test the classifier using the data in gestTest:

NetGestTest = ClassifierMeasurements[NetGestClassifier,gestTest]

We check the training accuracy:

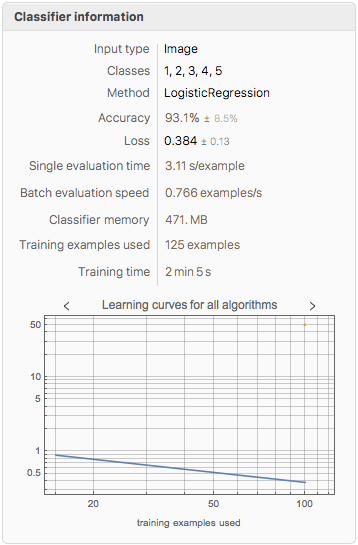

ClassifierInformation[NetGestClassifier]

NetGestTest["Accuracy"]

1.

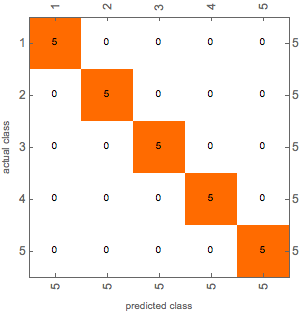

NetGestTest["ConfusionMatrixPlot"]

This method appears to be promising, as a training accuracy of 93.1% and a test accuracy of 100% was achieved.

Implementation of Machine Learning Model

To use the model with live data, we use the same method of identifying gestures as before in 'Detecting Gestures' (detecting 'spikes' in the data using moving averages), but when a gesture is identified, instead of being appended to a list it is sent through the classifier:

results = {""};

ClassGestIMU[n_,step_,x_]:=Module[{aa,xa,ya},

aa = Part[x,n;;step];

xa=PrepareDataIMU[aa];

ya=gestClassifier[xa];

AppendTo[results,{Length@aa,xa,ya}]

];

Dynamic[If[normAvg[-155,-150,-146]>threshold,ClassGestIMU[-210,-70,stream],False],UpdateInterval->0.1]

Real time results (albeit with significant lag) can be seen by running

Dynamic@column[[-1]]

Conclusions and Further Work

On the whole, this project was successful, with gesture detection and classification using rasterised graph images proving a viable method. However, the system as-is is impractical and unreliable, with a significant lag, training bias (trained to recognise the digits 1 to 5 the way they are drawn by one person only) and small sample size: these are problems that can be solved, given more time.

Further extensions to this project include: - Serious code optimisation to reduce / eliminate lag

An improved training interface to allow users to create their own gestures

Integration of the gesture classification system with the Channel interface (as described earlier) and deployment of this to the cloud.

Investigation of the feasibility of using an RNN or an LSTM for gesture classification - using a time series of raw gesture data rather than relying on rasterised images (which, although accurate, can be quite laggy). Alternatively, hidden Markov models could be used in an attempt to recover the states (gestures) that generate the observed data (accelerometer readings).

Adding an API to trigger actions based on gestures and deployment of gesture recognition technology as a native app on smartphones / smartwatches.

Improvement of gesture detection. At the moment, the code takes a predefined 1-2 second 'window' of data after a spike is detected - an improvement would be to detect when the gesture has ended and 'crop' the data values to include only the gesture made.

Exploration of other applications of gesture recognition (e.g. walking style, safety, sign language recognition). Beyond limited UI navigation, a similar concept to what is currently implemented could be used with, say, a phone kept in the pocket, to analyse walking styles / gaits and, for instance, to predict or detect an elderly user falling and notify emergency services. Alternatively, with suitable methods for detecting finger motions, like flex sensors, such a system could be trained to recognise / transcribe sign language.

Just for fun - training the model on Harry Potter-esque spell gestures, to use your phone as a wand...

A notebook version of this post is attached, along with a full version of the computational essay.

Acknowledgements

We thank the mentors at the 2018 Wolfram High School Summer Camp - Andrea Griffin, Chip Hurst, Rick Hennigan, Michael Kaminsky, Robert Morris, Katie Orenstein, Christian Pasquel, Dariia Porechna and Douglas Smith - for their help and support during this project.

Attachments:

Attachments: