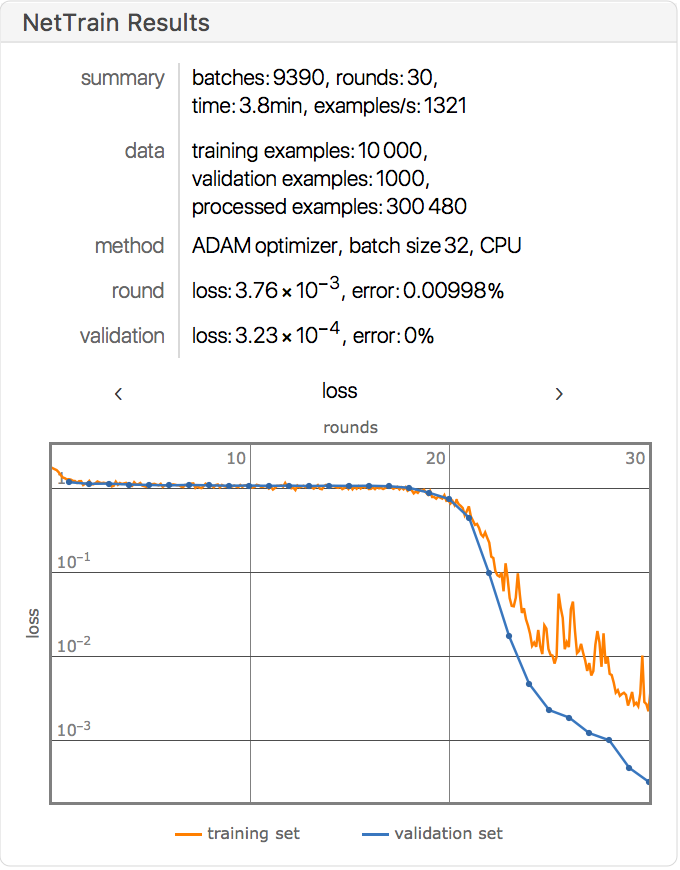

Hi guys, I'm very curious why the loss of validation set is even lower than the loss of training set when I use NetTrain. Say, on this page,

https://reference.wolfram.com/language/tutorial/NeuralNetworksSequenceLearning.html#1094728277

for the Q&A RNN trained on the bAbI QA Dataset, the loss of validation set shouldn't be lower than the loss of training set, according to Goodfellow's DL book. Right?

Is it possible that these 2 sets are mistakenly labeled in the NetTrain function when it tries to plot the learning curve?