Twitter has been humming this week with people experimenting with OpenAI's GPT-3 language model. A few days ago I received an invitation to the private beta, and I think it's fair to say that I'm very impressed and surprised with how well it generalizes, even to newly provided context.

In this post I'll demonstrate the model's ability to quickly pick up on how to write Wolfram Language code.

Predicting What Comes Next

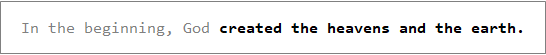

To use the language model, you provide it with a string of text (the gray text below), and its job is to predict what text should come next (the black text below). For example:

Specializing the Model

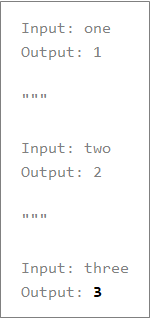

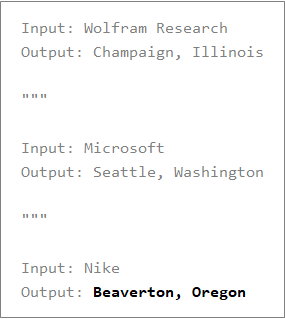

By providing the model with some preamble text containing a few examples that map an input to an output, it will -- surprisingly -- generalize that mapping to new inputs. For example:

And while that's just a toy example, it's ability to generalize really is quite impressive:

Converting Natural Language to Code

It didn't take long for people to try using the model to convert natural language to code. And amazingly, it works!

Let's do some experiments to see how quickly it can pick up on how to write Wolfram Language code.

Inferring Function Names

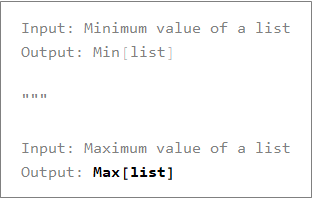

Let's see if a single example is enough to teach the model how to choose a reasonable function name:

Sure enough. It inferred that "Max" would be a good function name for "maximum", and it followed my lead using square brackets, passing a variable named list.

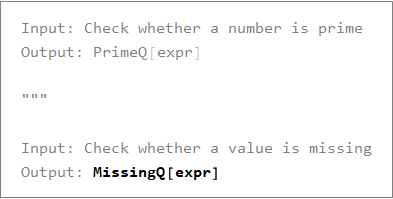

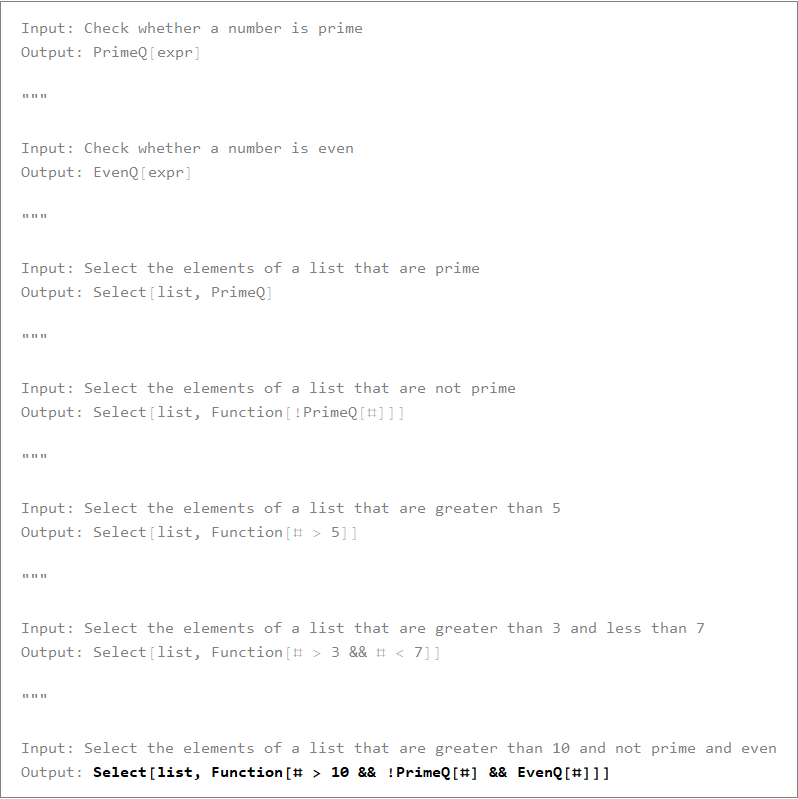

Let's see if it catch on to using "Q" as a suffix for boolean valued functions:

Argument Values

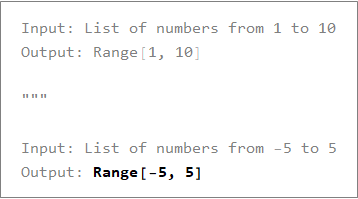

We're going to need to do more than choose function names -- we also need to deal with argument values properly. Let's see if the model can do that:

Variance in Phrasings

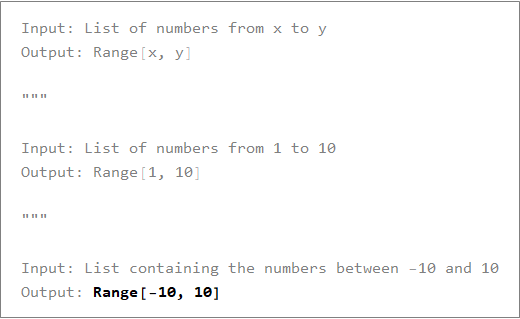

And of course, the model will need to be able to adapt to different phrasings:

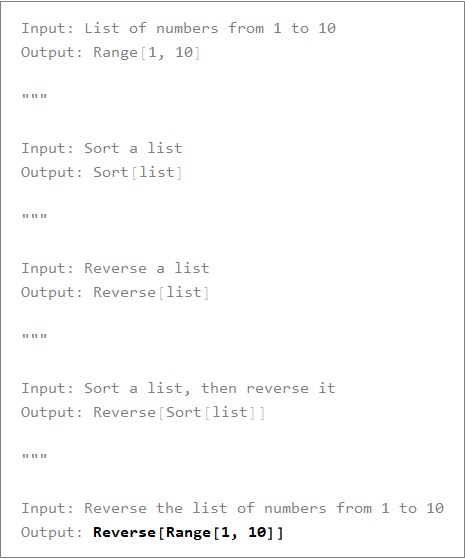

Composing Functions

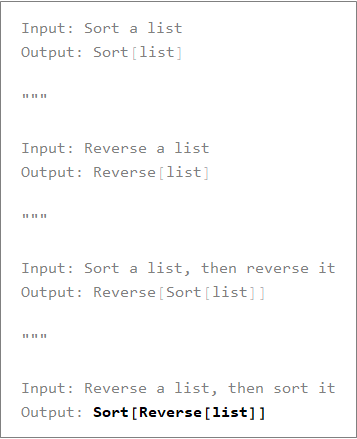

Let's see if it can learn how to compose functions:

Example 2:

Example 3:

Some Reflections and Predictions

We're just getting started here. It will be interesting to see how sophisticated and reliable GPT-3 can be at mapping natural language to code given enough examples. But these initial results are extremely promising.

One of my pet peeves as a software developer is getting stuck for 5 or 10 minutes trying to look up in the documentation or on the web how to do some simple thing. (it happened multiple times tonight) I'll usually figure it out, but it breaks my flow. For many years I've dreamed of a system that could reliably take a short natural language description and provide a high quality code snippet to demonstrate how to do something.

With GPT-3, it appears we're finally on the cusp.

https://openai.com/blog/openai-api/

Code

If you've been lucky enough to get access to the OpenAI beta, here's some of my code incase you find it useful:

$openAIBaseURL = "https://api.openai.com/v1/engines/";

$openAIPublishableKey = "TODO";

$openAISecretKey = "TODO";

(*!

\function OpenAIURL

\calltable

OpenAIURL[model, endpointName] '' Produces a URL to an OpenAI API endpoint.

Examples:

OpenAIURL["davinci", "completions"] === "https://api.openai.com/v1/engines/davinci/completions"

\maintainer danielb

*)

Clear[OpenAIURL];

OpenAIURL[model_, endpointName_String] :=

Module[{},

URLBuild[{$openAIBaseURL, model, endpointName}]

]

(*!

\function OpenAICompletions

\calltable

OpenAICompletions[prompt] '' Given a prompt, calls the OpenAI API to produce a completion.

Example:

OpenAICompletions["In 10 years, some people are predicting Tesla's stock price could reach"]

===

" $4,000.

That's a lot of money."

\maintainer danielb

*)

Clear[OpenAICompletions];

Options[OpenAICompletions] =

{

"Model" -> "davinci", (*< The model name to use. *)

"TokensToGenerate" -> 20, (*< The maximum number of tokens to generate. *)

"Temperature" -> 0, (*< Controls randomness / variance. 0 is no variance, 1 is maximum variance. *)

"FormatOutput" -> True, (*< Should the output be graphically formatted? *)

"AutoPrune" -> True (*< Automatically prune what appears to be beyond the first answer? *)

};

OpenAICompletions[prompt_String, OptionsPattern[]] :=

Module[{completion},

completion =

URLExecute[

HTTPRequest[

OpenAIURL[OptionValue["Model"], "completions"],

<|

Method -> "POST",

"Body" -> ExportString[

<|

"prompt" -> prompt,

"max_tokens" -> OptionValue["TokensToGenerate"],

"temperature" -> OptionValue["Temperature"]

|>,

"JSON"

],

"Headers" -> {

"Authorization" -> "Bearer " <> $openAISecretKey,

"Content-Type" -> "application/json"

}

|>

],

"RawJSON"

];

If [FailureQ[completion], Return[completion, Module]];

completion = completion[["choices", 1, "text"]];

$RawOpenAIOutput = completion;

If [TrueQ[OptionValue["AutoPrune"]],

completion = AutoPruneOpenAIOutput[completion]

];

$OpenAIOutput = completion;

If [TrueQ[OptionValue["FormatOutput"]],

completion = FormatCompletion[prompt, completion]

,

completion

]

]

(*!

\function FormatCompletion

\calltable

FormatCompletion[prompt, completion] '' Formats a completion from a language model.

Examples:

FormatCompletion["In the beginning, God", " created the heavens and the earth."]

===

Framed[

Style[

Row[

{

Style["In the beginning, God", GrayLevel[0.5]],

Style[" created the heavens and the earth.", Bold]

}

],

FontSize -> 16

],

FrameStyle -> GrayLevel[0.5],

FrameMargins -> {{20, 20}, {16, 16}}

]

\maintainer danielb

*)

Clear[FormatCompletion];

FormatCompletion[prompt_String, completion_String] :=

Module[{},

Framed[

Style[

Row[{Style[prompt, Gray], Style[completion, Bold]}],

FontSize -> 16

],

FrameStyle -> Gray,

FrameMargins -> {{20, 20}, {16, 16}}

]

]

(*!

\function OpenAICompletionUI

\calltable

OpenAICompletionUI[] '' A UI for producing completions using the OpenAI API.

\maintainer danielb

*)

Clear[OpenAICompletionUI];

OpenAICompletionUI[] :=

Module[{inputFieldVar, input, output = ""},

Dynamic[

Grid[

{

{

Framed[

#,

FrameMargins -> {{0, 0}, {2, 0}},

FrameStyle -> None

] & @

EventHandler[

Style[

InputField[

Dynamic[inputFieldVar],

String,

FrameMargins -> Medium,

ReturnEntersInput -> False,

ImageSize -> {700, Automatic}

],

FontSize -> 14

],

{"MenuCommand", "HandleShiftReturn"} :>

(

input = StringReplace[inputFieldVar, {"\[IndentingNewLine]" -> "\n"}];

output = OpenAICompletions[

StringTrim[input],

"TokensToGenerate" -> 30

]

)

]

},

{

output

}

}

],

TrackedSymbols :> {inputFieldVar, output}

]

]

(*!

\function AutoPruneOpenAIOutput

\calltable

AutoPruneOpenAIOutput[output] '' Given some output from OpenAI's API, tries to automatically remove what is beyond the next output.

Examples:

AutoPruneOpenAIOutput["Just testing\n\n\"\"\"\n\nMore output"] === "Just testing"

\maintainer danielb

*)

Clear[AutoPruneOpenAIOutput];

AutoPruneOpenAIOutput[outputIn_] :=

Module[{matches, output = outputIn},

matches = StringPosition[

output,

Repeated["\n", {1, Infinity}] ~~ "\"\"\"" ~~ Longest[___]

];

If [Length[matches] > 0,

output = StringTake[output, matches[[-1, 1]]]

];

(* StringTrim right side *)

StringReplace[

output,

WhitespaceCharacter.. ~~ EndOfString :> ""

]

]