Hello,

I have been playing with the new remote batch computation capabilities in Mathematica and have come across speed issues that I do not understand. My calculation involves thousands of Fourier transforms on complex lists with tens of thousands of elements per "step".

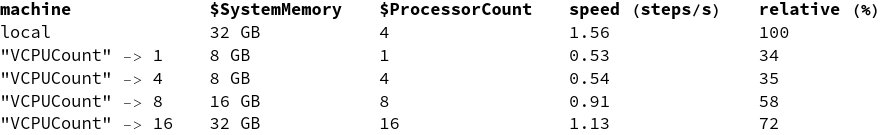

It runs reasonably fast on my local computer with a seven year old mobile i7 (4 cores, 8 threads) but much slower on AWS. Also the speed on AWS seems to be affected by parameters that (in my understanding) should not affect it. To rule out that the jobs run on slower hardware, I created a stack with only "c5a" as allowed instance type.

All tests are performed with one master kernel and zero subkernels. On my local machine CPU load is 50 %, so the calculation uses all cores. First I tested the performance of sequential single jobs with RemoteBatchSubmit:

The achieved speeds are not great, but still something I could work with. When working with array batch jobs it gets more confusing and slower though.

When running an array job (RemoteBatchMapSubmit, 16 evaluations, 16 jobs, 1 vCPU each) every child job sees just one CPU, but 64 GB memory, the speed started at 0.8 steps/s when only one child was running but dropped to 0.05 steps/s when multiple ones were - should different child jobs influence each other like this?

To test this I started a single job with "VCPUCount" -> 2. This job reported 128 GB of $SystemMemory and ran with 0.9 steps/s. I started an additional and identical single job and the speed of both running jobs dropped to 0.6 steps/s. After starting a third identical job the speed of all three single jobs dropped to 0.3 steps/s.

Has anyone else experienced these issues or has a better understanding to explain what is happening? I would love to use this feature, but as of now there is no benefit, it runs much faster locally.

Thank you!