Nice exploration!

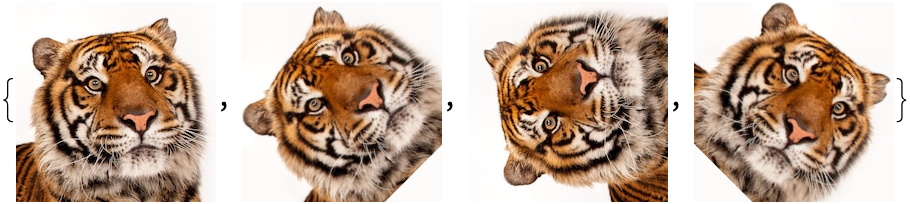

One thing that might be worth trying is to check how the degradation by rotation is influenced by the pretty sharp transition to the black background.

You can pad the original image (or find one with more background)

img = Import[

"https://i.natgeofe.com/n/6490d605-b11a-4919-963e-f1e6f3c0d4b6/sumatran-tiger-thumbnail-\

nationalgeographic_1456276.jpg?w=200"];

img = ImagePad[img, 50, RGBColor[{252, 251, 249}/255]];

and than create a fixed size rotated crop:

Table[ImageRotate[img, d Degree, 200], {d, {0, 45, 90, -45}}]