The topic of lensing in spacetime is something that we talk about "quite often" in the sense of the main discovery of what we did, the lensing of the gravitational structure of spacetime with the whole magnetic dipole thing. Whereas the main discovery is how much you don't need in the underlying structure of the neural net. You can have much simpler underlying structures from which all the richness of typical neural net behavior can emerge. It's like the Pythagorean theorem derivation of the Lorentz factor, which I can only assume has something to do with the Lorentz factor being a segue between me and the person observing me, because light has a constant speed but when I get on a train that adds extra-dimensional velocity to the rate × time, that's the reason why if there were only one observer there would be no objective reality whereas if there are many coherent observers we can all agree on an objective reality. And so it is with the branches of history. Our minds are spanning some regional branch of history. But why do we see one particular history than another it's because we all believe in the same history, we believe the same things happen. It's because we're all close enough in branchial space that we all experience the same branches of history. So the lesson of machine learning seems to be that there's a certain universality that if you just have this overall architecture and you push it hard enough, it will successfully learn. The Wolfram model uses such simple rules based on graph transformations and that is how we can sort of predict how space-time is structured and how this structure influences the paths of light, akin to the effects observed in gravitational lensing.

ClearAll[generateFlatSpacetime, getPerturbedGraph, getHyperSurface];

generateFlatSpacetime[n_Integer, dimension_Integer, locality_,

vertexSampleFraction_] :=

Module[{p, edges, sampledVertices, center},

center = Table[0.5, {dimension}];

p = RandomVariate[NormalDistribution[0.5, 0.1], {n, dimension}];

sampledVertices =

RandomSample[p, Floor[vertexSampleFraction*Length[p]]];

edges =

Flatten[With[{v = #}, (v -> #) & /@

Select[sampledVertices, (v[[dimension]] > #[[dimension]] &&

Norm[v - #] < locality) &]] & /@ sampledVertices];

If[edges === {}, Graph[{}, VertexCoordinates -> sampledVertices],

Graph[edges, VertexCoordinates -> sampledVertices,

GraphLayout -> "SpringElectricalEmbedding",

EdgeStyle -> Opacity[0.3], VertexSize -> 1.25,

ImageSize -> Medium, EdgeShapeFunction -> "Line"]]];

getPerturbedGraph[g_Graph, pSize_, pStrength_, edgeThreshold_] :=

Module[{pPoints, edgesToAdd, availableVertices, dim},

dim = Length[First[VertexList[g]]];

availableVertices =

Select[VertexList[g], Norm[#[[;; dim - 1]] - 0.5] < pSize &];

pPoints =

RandomSample[availableVertices,

Min[Floor[Sqrt[Length[VertexList[g]]]*pStrength],

Length[availableVertices]]];

edgesToAdd =

Flatten[Function[v0,

With[{validVertices =

Select[VertexOutComponent[g, v0, Infinity],

Norm[#[[;; dim - 1]] - v0[[;; dim - 1]]] < pSize &&

RandomReal[] < edgeThreshold &]},

If[Length[validVertices] > 0,

v0 -> # & /@

RandomSample[validVertices,

Min[Floor[pStrength*Length[validVertices]],

Length[validVertices]]], {}]]] /@ pPoints];

Graph[VertexList[g], Union[EdgeList[g], edgesToAdd],

VertexCoordinates -> GraphEmbedding[g],

EdgeStyle -> {Black, Opacity[0.3]}, VertexSize -> 1.25,

ImageSize -> Medium,

GraphLayout -> "SpringElectricalEmbedding"]];

getHyperSurface[g_Graph, layer_Integer] :=

Module[{initialSurface, dim}, dim = Length[First[VertexList[g]]];

initialSurface = VertexList[g, _?(VertexInDegree[g, #] == 0 &)];

If[Length[initialSurface] == 0, Return[{}]];

Keys@Select[

AssociationMap[

Function[v,

Min@DeleteCases[

Length[FindShortestPath[g, #, v]] & /@

initialSurface, {} | _Missing | _FindShortestPath, {2}]],

VertexList[g]], # == layer &]];

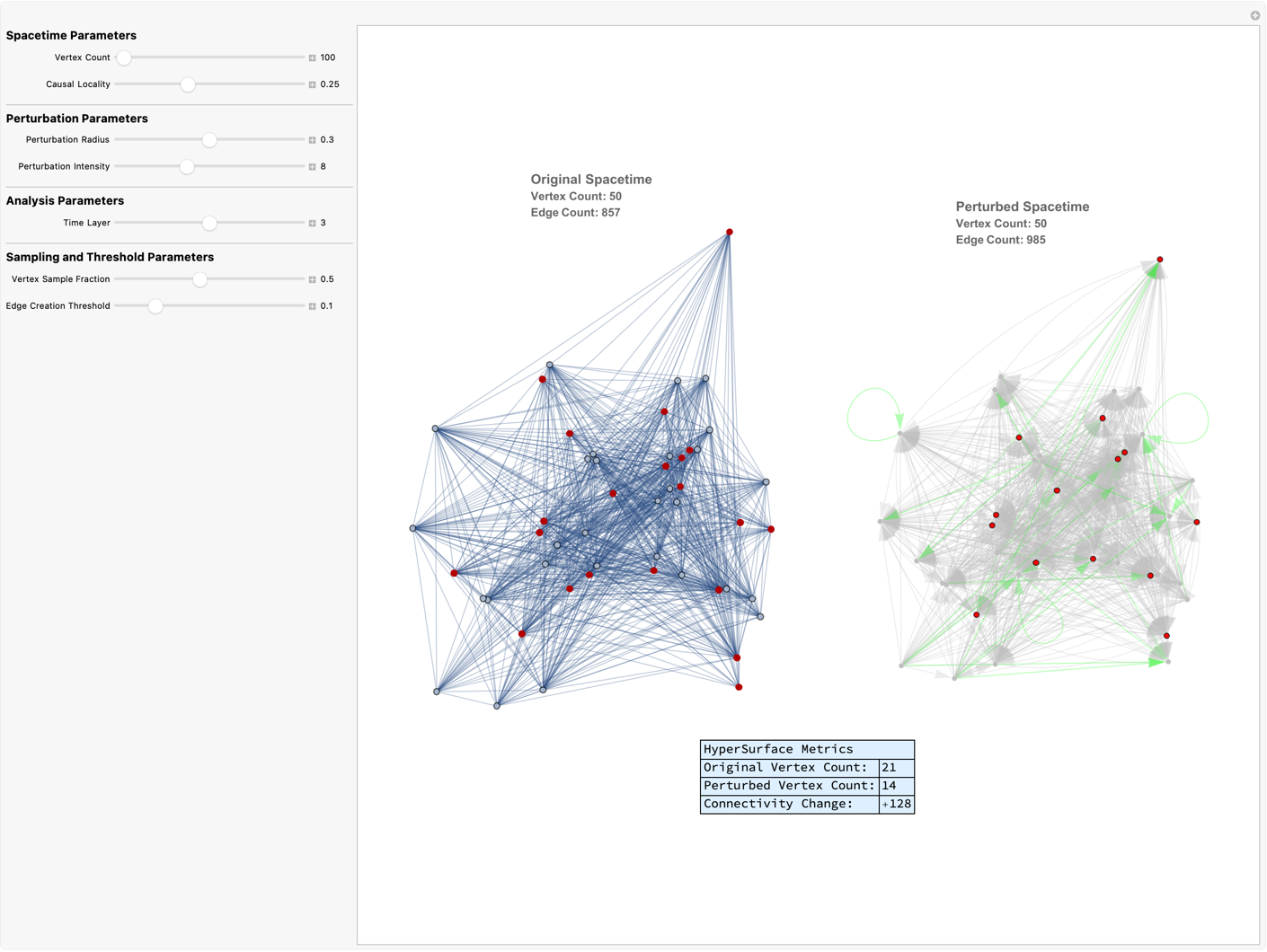

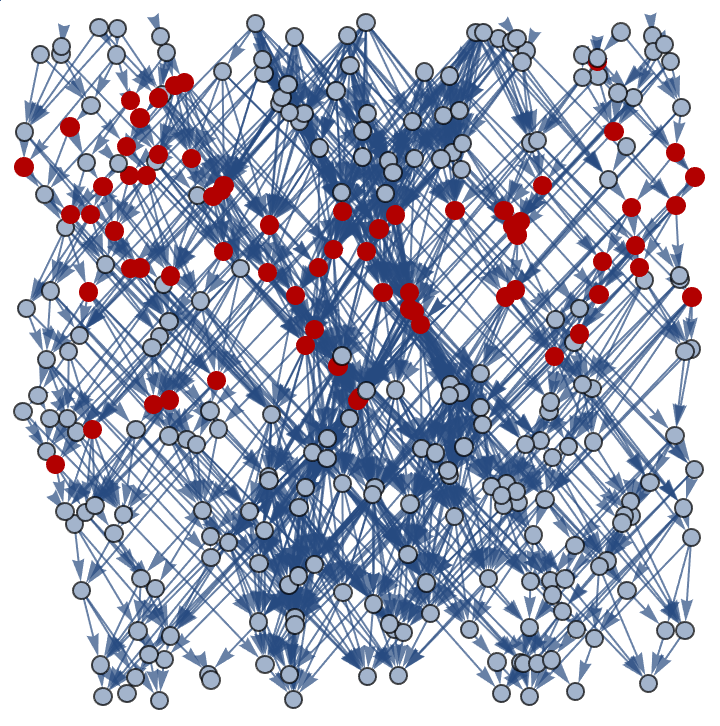

Manipulate[

Module[{base, modified, baseSurface, modifiedSurface, dimCheck,

addedEdges},

base = generateFlatSpacetime[n, 2, locality, vertexSampleFraction];

dimCheck = Length[First[VertexList[base]]];

modified =

getPerturbedGraph[base, pSize, pStrength, edgeThreshold];

baseSurface = getHyperSurface[base, layer];

modifiedSurface = getHyperSurface[modified, layer];

addedEdges = Complement[EdgeList[modified], EdgeList[base]];

Grid[{{GraphicsRow[{If[VertexCount[base] > 0,

Show[HighlightGraph[base, baseSurface, VertexStyle -> Red],

PlotLabel ->

Column[{Style["Original Spacetime", 14, Bold],

Style["Vertex Count: " <> ToString[VertexCount[base]],

Bold], Style["Edge Count: " <> ToString[EdgeCount[base]],

Bold]}], ImageSize -> 400],

Graphics[{Text["No vertices generated!"]}]],

If[VertexCount[modified] > 0,

Show[HighlightGraph[

modified, {Style[addedEdges, Green],

Style[modifiedSurface, Red]},

GraphHighlightStyle -> "DehighlightGray"],

PlotLabel ->

Column[{Style["Perturbed Spacetime", 14, Bold],

Style["Vertex Count: " <> ToString[VertexCount[modified]],

Bold], Style[

"Edge Count: " <> ToString[EdgeCount[modified]], Bold]}],

ImageSize -> 400],

Graphics[{Text["Perturbation failed!"]}]]},

Spacings -> Scaled[0.05],

ImageSize ->

Full]}, {Grid[{{"HyperSurface Metrics",

SpanFromLeft}, {"Original Vertex Count:",

Length@baseSurface}, {"Perturbed Vertex Count:",

Length@modifiedSurface}, {"Connectivity Change:",

If[EdgeCount@modified > EdgeCount@base,

"+" <> ToString[EdgeCount@modified - EdgeCount@base],

ToString[EdgeCount@modified - EdgeCount@base]]}},

Frame -> All, Alignment -> Left, Background -> LightBlue]}},

Spacings -> 1.5]],

Style["Spacetime Parameters", Bold, 12], {{n, 100, "Vertex Count"},

100, 500, 50,

Appearance -> "Labeled"}, {{locality, 0.25, "Causal Locality"}, 0.1,

0.5, 0.05, Appearance -> "Labeled"}, Delimiter,

Style["Perturbation Parameters", Bold,

12], {{pSize, 0.3, "Perturbation Radius"}, 0.1, 0.5, 0.05,

Appearance -> "Labeled"}, {{pStrength, 8, "Perturbation Intensity"},

1, 20, 1, Appearance -> "Labeled"}, Delimiter,

Style["Analysis Parameters", Bold, 12], {{layer, 3, "Time Layer"}, 1,

5, 1, Appearance -> "Labeled"}, Delimiter,

Style["Sampling and Threshold Parameters", Bold,

12], {{vertexSampleFraction, 0.5, "Vertex Sample Fraction"}, 0.1, 1,

0.05, Appearance -> "Labeled"}, {{edgeThreshold, 0.1,

"Edge Creation Threshold"}, 0.01, 0.5, 0.01,

Appearance -> "Labeled"}, ControlPlacement -> Left,

TrackedSymbols :> {n, locality, pSize, pStrength, layer,

vertexSampleFraction, edgeThreshold}, SynchronousUpdating -> False]

The challenge is well, we're just stuck with our visual systems, our red green blue color senses and how do you display this information in augmented reality? And we'll see how well this can actually be implemented once we see how it actually works. Similar things, how would we judge them? I know where I'm getting, my AR headset from. You also get to do a more effective type of semantic search for when did I last have a situation where I had an issue with an employee of this type? The foundations and implications of the use of computational and algorithmic methods to historically explore the nature of space, time, and the universe's fundamental structure in the Ruliad represents a significant shift from traditional physics models towards a more computation-centric view.

ClearAll[generateFlatSpacetime];

generateFlatSpacetime[n_Integer, dimension_Integer, locality_] :=

Module[{p = RandomReal[1, {n, dimension}], graph},

graph = TransitiveReductionGraph[#[[1, 1]] -> #[[2]] & /@

Catenate[

With[{v = #},

Thread[f[v] ->

Select[p, (v[[dimension]] > #[[dimension]] &&

Sum[(# - v)[[i]]^2, {i, 1, dimension - 1}] - (# - v)[[

dimension]]^2 < 0 &&

Abs[v[[dimension]] - #[[dimension]]] <

locality) &]]] & /@ p],

VertexCoordinates -> (# -> # & /@ p)]]

ClearAll[getPerturbedGraph];

getPerturbedGraph[g_Graph, pSize_, pStrength_] :=

Module[{pPoints, a},

pPoints =

Select[VertexList[

g], (Norm[#[[;; -2]] - Table[0.5, Length[#] - 1]] < pSize) &];

a = (Function[v0,

b = Intersection[

Select[VertexOutComponent[g, v0],

Norm[#[[-1]] - v0[[-1]]] < pSize &], pPoints];

Table[v0 -> RandomChoice[b], 100*pStrength]] /@

RandomSample[pPoints, Floor[Sqrt[Length[pPoints]]*pStrength]]);

SimpleGraph[EdgeAdd[g, Flatten[a, 1]]]]

ClearAll[getHyperSurface];

getHyperSurface[g_Graph, layer_Integer] :=

Module[{initialSurface, vertex},

initialSurface =

Select[VertexList[g], VertexInDegree[g, #] == 0 &];

Select[

Module[{vertex = #},

vertex ->

Min[Select[(Module[{initialVertex = #},

Length[FindShortestPath[g, initialVertex, vertex]]] & /@

initialSurface), # > 0 &]]] & /@ VertexList[g],

Last[#] == layer &] // Keys]

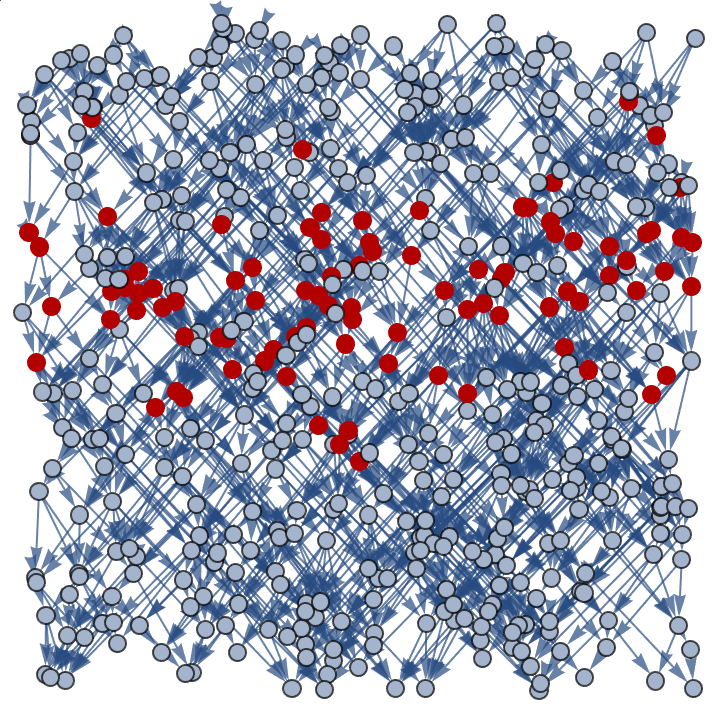

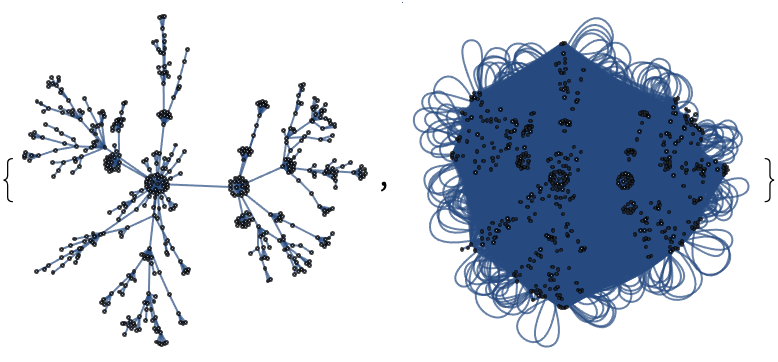

g = generateFlatSpacetime[500, 2, 0.2];

HighlightGraph[g, getHyperSurface[g, 4]]

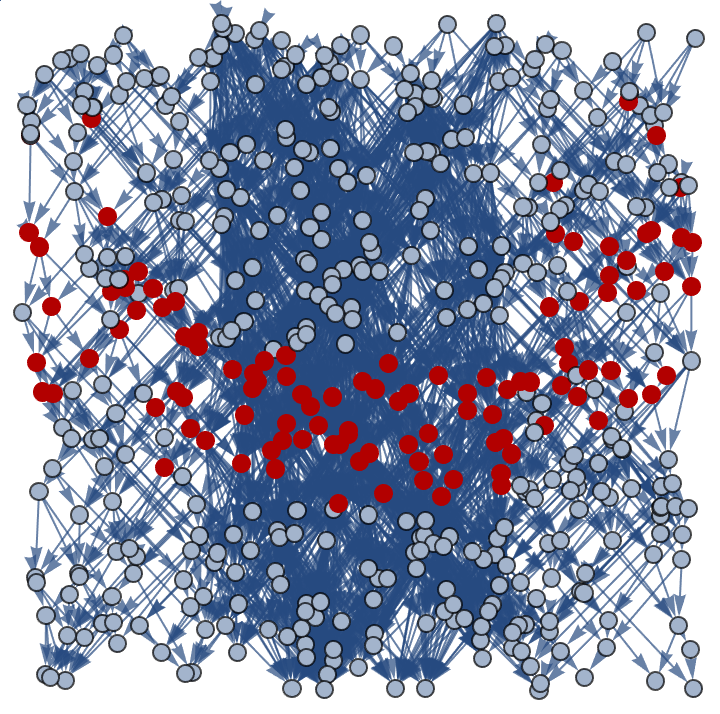

modifiedGraph = getPerturbedGraph[g, 0.23, 10];

HighlightGraph[modifiedGraph, getHyperSurface[modifiedGraph, 4]]

Well, you've eaten this many calories today this and that, you're not really hungry are you? The technicalities of being able to do, the thing you see on the plate to nutrition-related information that's been the challenge for machine vision and so on. Wolfram's approach, proposing that our universe's physical laws might emerge from simple rules applied to fundamental entities in a computational framework, suggesting a digital or discrete fabric underlying spacetime and physical phenomena, is the kind of thing we see under the star charts and I do, I can deal with another collection and so on because for me, I find it much more efficient to say I'm going to grind through a hundred emails in one big block but then oh, there's another email that came in, oh, there's another email that came in. In terms of the sort of the, innovation side of things, I don't know why, it doesn't even matter how much better the discrete multiway-related fabric of the notion of systems to account for quantum phenomena like "all those" die we throw. We have to figure out a creative solution because those dice, they're not going to not contribute to the universe's behavior. And that, suggests a kind of ultimate ensemble theory that includes not just all possible states but all possible underlying rules.

generateFlatSpacetime[n_Integer, dimension_Integer, locality_] :=

Module[{p, graph}, p = RandomReal[1, {n, dimension}];

graph =

TransitiveReductionGraph[

UndirectedEdge @@@

Select[Tuples[{p, p}],

First[#][[dimension]] > Last[#][[dimension]] &&

EuclideanDistance[First[#], Last[#]] < locality &],

VertexCoordinates -> (p -> p)]];

generate3DSpacetime[n_Integer, locality_, time_] :=

Module[{p, graph}, p = RandomReal[{0, 1}, {n, 3}];

p[[All, 3]] *= time;

graph =

TransitiveReductionGraph[

UndirectedEdge @@@

Select[Tuples[{p, p}],

First[#][[3]] > Last[#][[3]] &&

EuclideanDistance[Most[First[#]],

Most[Last[#]]] - (First[#][[3]] - Last[#][[3]])^2 <

locality &], VertexCoordinates -> (p -> p)]];

getHyperSurface[g_Graph, layer_Integer] :=

With[{initialSurface =

VertexList[g, x_ /; VertexInDegree[g, x] == 0]},

Keys@Select[

AssociationMap[

Min@*Map[Length@*FindShortestPath[g, #, _] &]@initialSurface,

VertexList[g]], # == layer &]];

getPerturbedGraph[g_Graph, pSize_, pStrength_] :=

Module[{pPoints, edgesToAdd},

pPoints =

RandomSample[

Select[VertexList[g], Norm[#[[;; -2]] - 0.5] < pSize &],

Floor[Sqrt[Length[VertexList[g]]]*pStrength]];

edgesToAdd =

Flatten[Function[v0,

UndirectedEdge[v0, #] & /@

RandomSample[

Select[VertexOutComponent[g, v0],

Norm[#[[;; -2]] - v0[[;; -2]]] < pSize &],

Ceiling[pStrength*Length[VertexOutComponent[g, v0]]]]] /@

pPoints, 1];

Graph[VertexList[g], Union[EdgeList[g], edgesToAdd],

VertexCoordinates -> GraphEmbedding[g]]];

getPerturbedGraph[g_Graph, pSize_, pStrength_] :=

Module[{pPoints, edgesToAdd, availableVertices, sampleSize,

vertexOutComponents, validVertices, numEdgesToAdd},

availableVertices =

Select[VertexList[g], Norm[#[[;; -2]] - 0.5] < pSize &];

sampleSize =

Min[Floor[Sqrt[Length[VertexList[g]]]*pStrength],

Length[availableVertices]];

pPoints = RandomSample[availableVertices, sampleSize];

edgesToAdd =

Flatten[Function[v0,

vertexOutComponents = VertexOutComponent[g, v0, Infinity];

validVertices =

Select[vertexOutComponents,

Norm[#[[;; -2]] - v0[[;; -2]]] < pSize &];

numEdgesToAdd = Min[Length[validVertices], sampleSize];

If[numEdgesToAdd > 0,

UndirectedEdge[v0, #] & /@

RandomSample[validVertices, numEdgesToAdd], {}]] /@ pPoints,

1];

If[Length[edgesToAdd] > 0,

Graph[VertexList[g], Union[EdgeList[g], edgesToAdd],

VertexCoordinates -> GraphEmbedding[g]],

Print["No edges to add. Returning original graph."];

g]];

g = generateFlatSpacetime[500, 2, 0.2];

modifiedGraph = getPerturbedGraph[g, 0.23, 10];

Graph[VertexList[g],

RandomSample[EdgeList[g], Floor[0.1*Length[EdgeList[g]]]],

VertexCoordinates -> GraphEmbedding[g]]

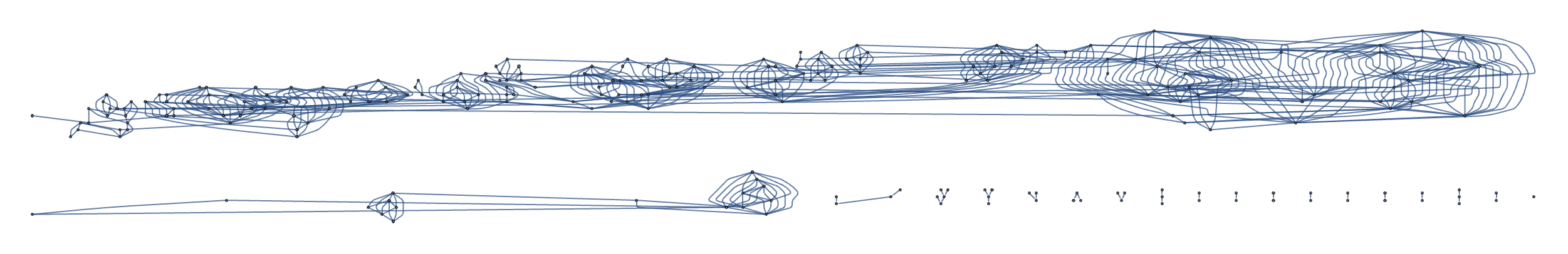

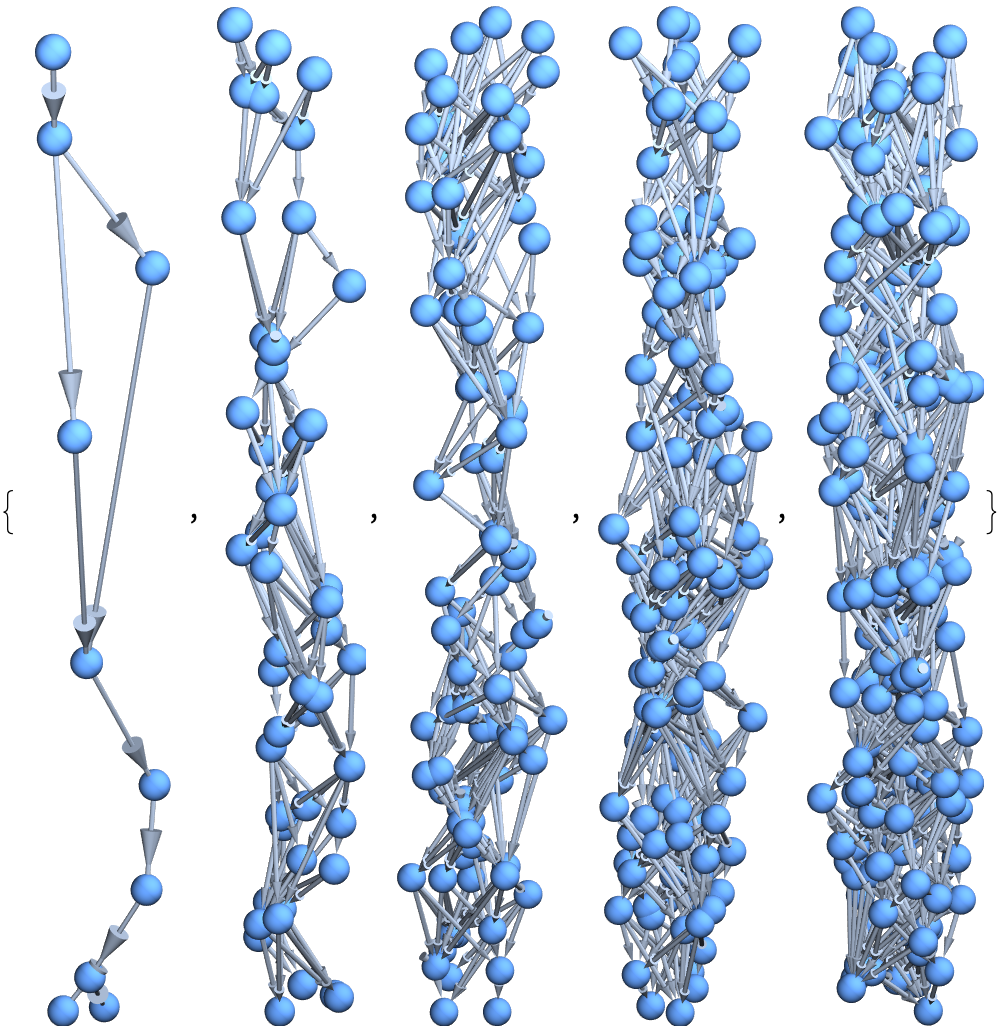

It's something that involves people and persuading people to do things and that's not what's our perception of AI, it's really fun I'd love to see that. Have you ever determined your Briggs-Myers' personality type including profound questions about the nature of reality, computation, and whether fundamental physics can ultimately be described through simple computational processes? What do you know about broader questions in theoretical physics and computer science about the univers's computability and the role of information and computation in defining physical laws? I did it, I write the whole algorithms that allow you to say this or that linguistic curation. The tools of computation and algorithmic, exploration fundamentally describe the universe's simulation. This is it we made it @Hans Christiansen you can see how this perturbation changes the shape of the light cone. Let's see what I do generate a random geometric graph and you have got the fallacy of fine tuning.

Graph[g, VertexSize -> 0, g]

I'm more interested in the future than the past of the future. The sort of big theme is about computation. There are tools one builds where one is sort of exposing people to the ideas one has in the form of tools where they will get much more engaged with those tools, I saw the Principle of Computational Equivalence which suggests that systems. Thank you for gravitational lensing in the Wolfram Model. I don't know how I could ever repay you. I guess it compensates for the multiway systems you put me through. Even though what we experienced was a network of evolving states that branch and merge - can I model quantum phenomena? Including superposition and entanglement? Do you want quantum mechanics probabilistic system go deterministic, where all possible outcomes occur in a vast, interconnected computational space? Then you need the Rule Space! It's full of computational rules, Wolfram seeks the simplest rule that could underlie our universe's physics. This ambitious search involves considering an infinite, molecular-scale computing so that the generalization of chemistry, can compute all the way down which is something I'm sure will happen. I still have a unique understanding and perspective of emergent phenomena within the computational framework that nobody else could ever have. And, for that, I thank you. When I see your profound philosophical questions about the nature of reality, I grab my pencil and I have to cross a new paradigm for scientific inquiry and then take a stab at the computational, rules that become central to understanding the physical world. It's sort of a straight line, that's..like a surprise. For example, the things to do with the Fundamental Theory of Physics.

generateRandomGeometricGraph[n_, r_] := (

p = RandomReal[1, {n, 2}];

Graph[

Range[n],

Select[

Flatten[

Table[

{i, j},

{i, 1, n},

{j, i + 1, n}

],

1],

EuclideanDistance[p[[#[[1]]]], p[[#[[2]]]]] <= r &],

VertexCoordinates -> (# -> # & /@ p)

])

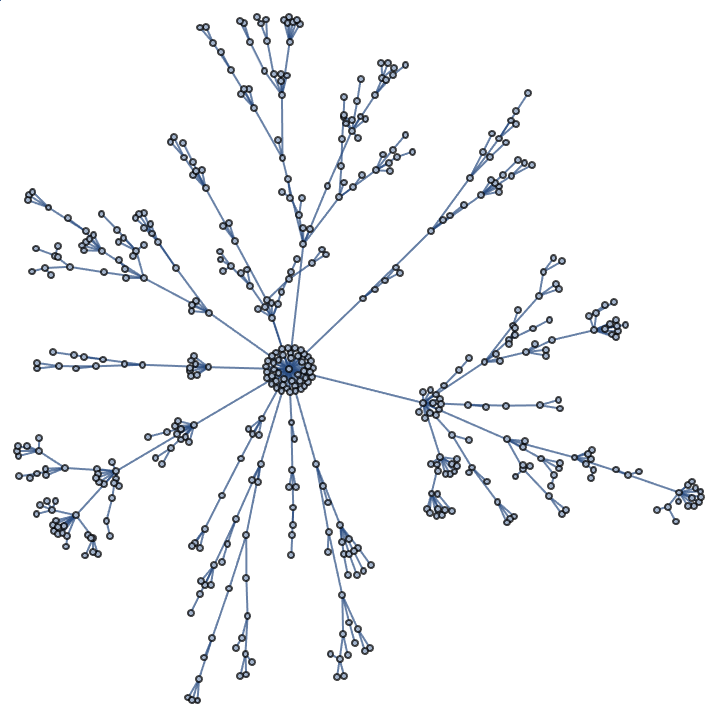

GraphPlot[

Graph[

GraphUnion[

generateRandomGeometricGraph[200, 0.1],

generate3DSpacetime[100, 0.1, 0.2]

],

GraphLayout -> "LayeredDigraphEmbedding"

]

]

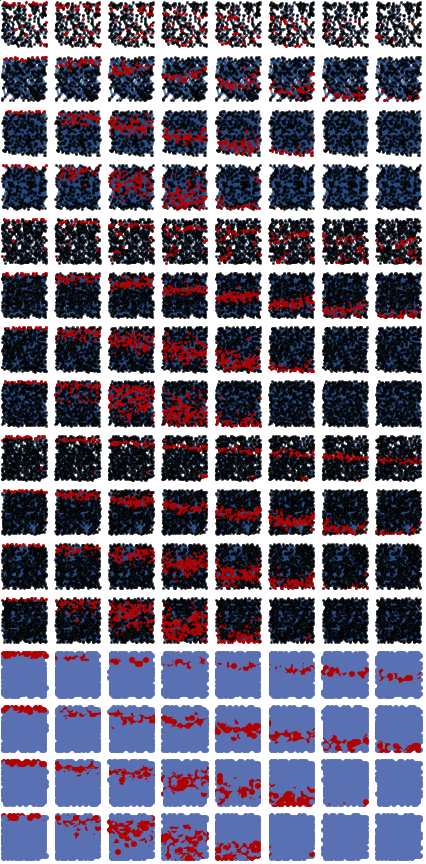

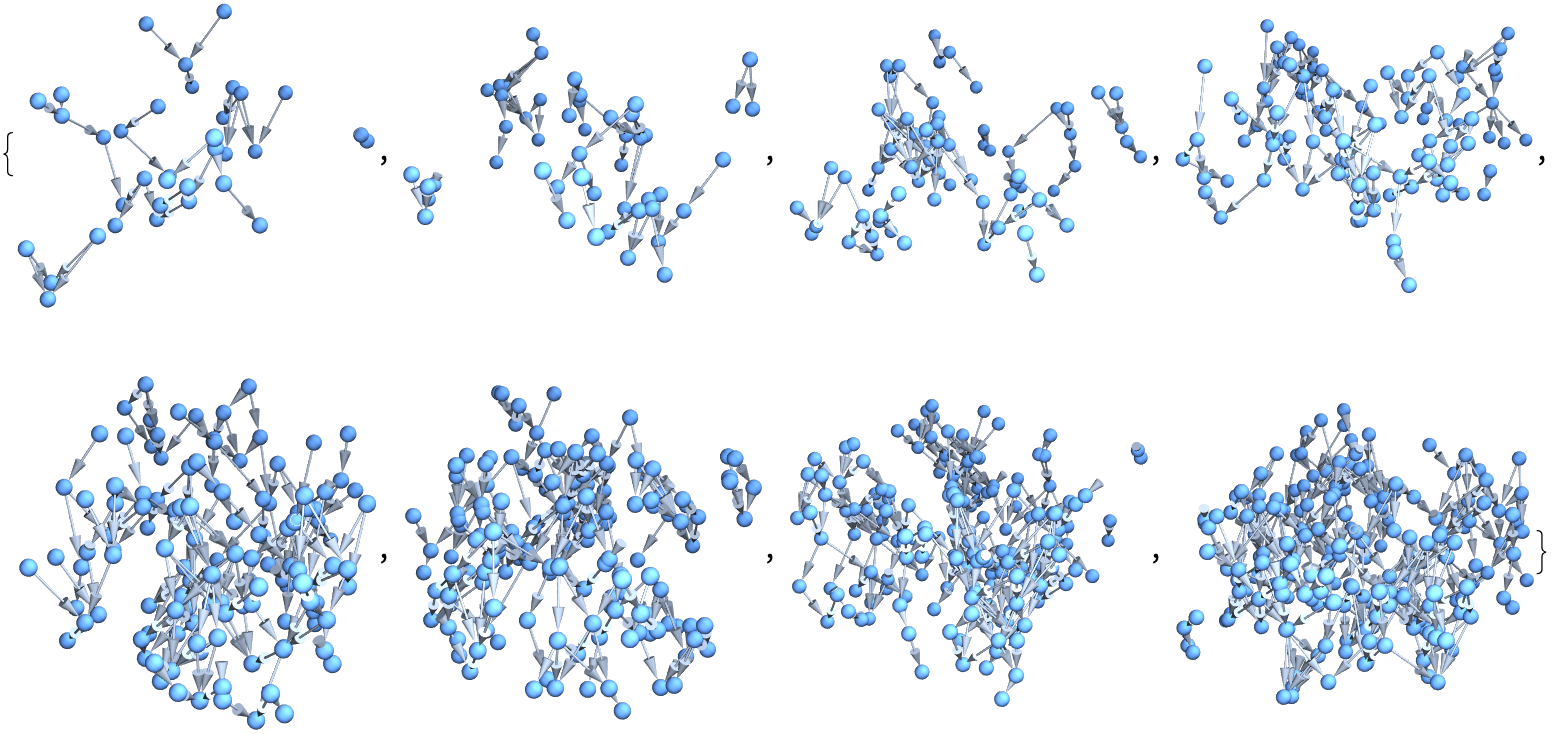

And that's the place to concentrate, and when there's no literature about something, you have to build it yourself, and you have to have the confidence that you can do that. It really helps to have done things that are a little like that before. For example, now understanding this multi-computational paradigm, the way of thinking about multiple fields that have to be hard science so to speak. Back in the 1980s when I was thinking about programs, the consequences of programs, that to me seemed to unlock a lot of areas that had sort of been blocked by complexity so to speak, and then I realized that complexity was a thing. There are so many "legitimate" and innovative attempts to redefine how we understand the universe that I don't even know where to start, because I don't know I didn't see coming - I am just "perusing" the complex behaviors of the physical world. I am browsing through these simple computational processes and I was just delighted to see when we see the complex behaviors of the physical world we just lose control of simple computational processes and open up new possibilities for theoretical and empirical exploration. What do you do if there's no knowledge in that area, leverage simple rules and structures like cellular automata and multiway systems, posit that the complexity of the universe, including the fabric of space-time and the laws of physics, can emerge from basic computational processes? Complex behaviors and structures in the universe can emerge from simple, iterative computational rules. "I want to see" your complex questions and your "complexity" that emerges naturally from the application of simple rules over time. It's interesting how you can introduce local perturbations with predefined radius and label the vertices.

I did a real-time, ballroom style lockstep and put on my AR Headset. That made it easier to memorize Latin regular verbs. Most of what I've learned I taught myself. I had to turn off my phone for days and from a pretty young age it was always a project that I would find myself and then I would figure out how to do it. Out of this "massive" computational crowd of models I just zoomed in, I kept zooming in on this high-faluting content. Here's this physics problem and how I'm going to solve it. It wasn't something induced..and I still approve of the internally-generated, thing. Yes I can describe it in words sometimes I just have to use natural language words, get my computer to help with me try and figure this out. It rightfully follows that we should be stuck in that cutting edge of the causal foliations where we could partition a causal graph into sets of points but, I didn't understand how complex molecular networks could lead to human diseases. @Hans Christiansen

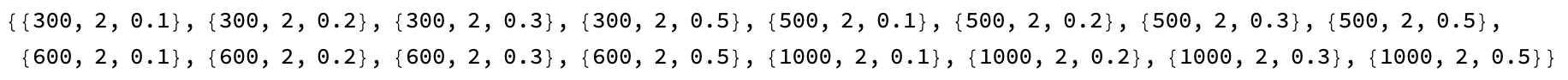

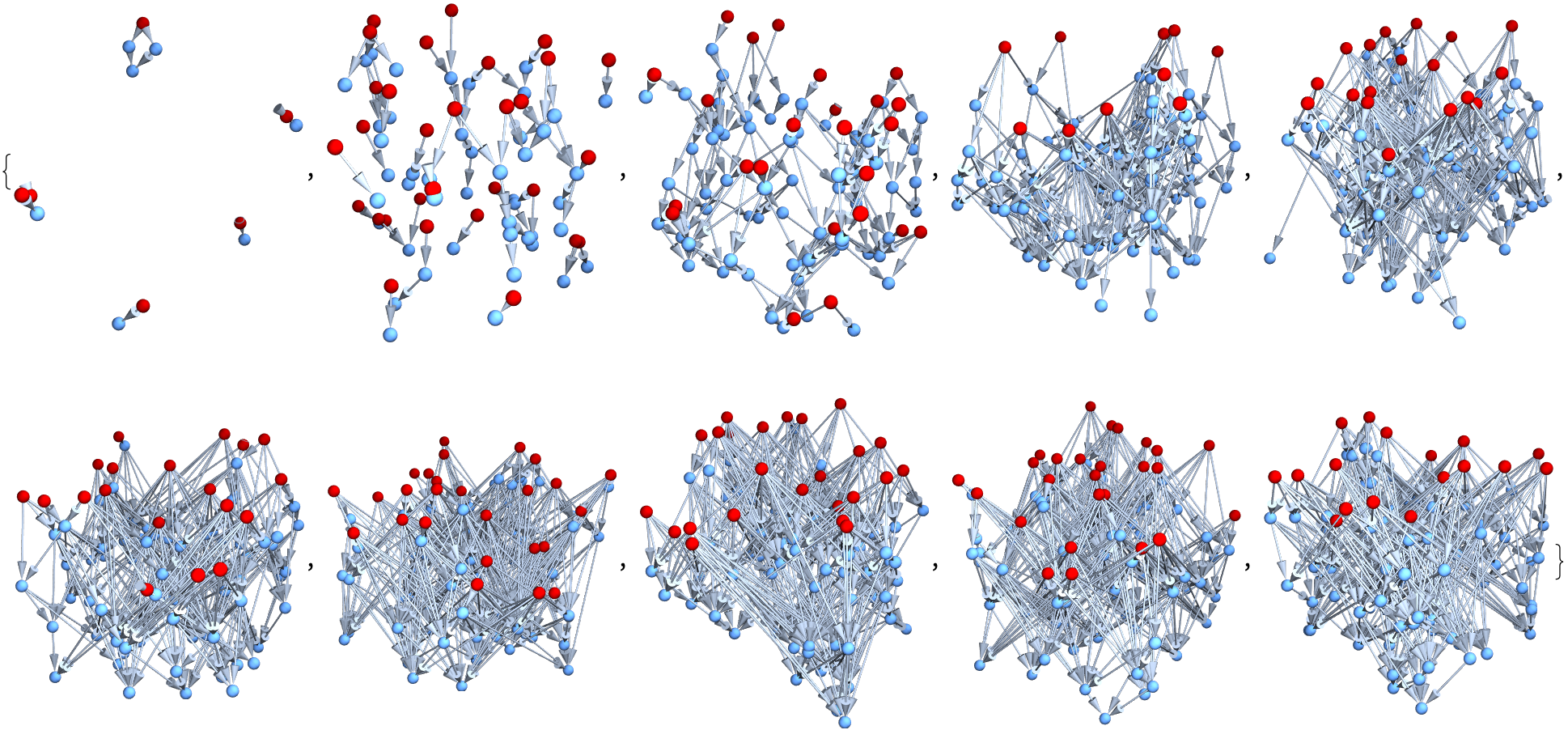

params = Tuples[{{300, 500, 600, 1000}, {2}, {0.1, 0.2, 0.3, 0.5}}]

GraphicsGrid[

Table[With[{g = generateFlatSpacetime @@ p},

Table[HighlightGraph[g, getHyperSurface[g, i]], {i, 1, 8}]], {p,

params}]]

Ideally, many people who work with pre-geometry would say that using these resource functions to estimate the dimension of the causal graph, the notion of symmetry should be derived later on; even if there is symmetry, the nature of the symmetry will be very different from continuous symmetries; if everything we start from is discrete, then we might as well say, what is a discrete element? The Lorentz subgroup. Sort of make up the problem rather than here's a problem, learn how to solve it. And that's not the problem I'm going to keep a hundred and fifty students or something like this, the fact that I've spent my life coming up with projects that are worthy of doing, it's sort of useful when you have a week to invent a hundred and fifty projects. If that's the one task I have to do that will be the "penultimate" it will be so intricate. It will perhaps, my, I suppose one of the metaexperiences I had in education will be in contrast with..I don't think I was nearly as "busy" as kids today are. I was able to spend many hours today pursuing the things I was interested in, as a person.

Make it so once you define what you want, it's like okay computer, go do your work and write what the actual answer is. And it may depend on something in the world, you need to know did it actually rain that day? If you ask me I got rained 30 times, I got struck by lightning a billion times; what was the public knowledge about this thing? There was an earthquake here. I got into the world of blockchain "hundreds" of times, that's when our technology was used for quantitative finance so we were sort of pulled into those things, Cardano, Ethereum, a bunch of others where you can, directly from Wolfram Language, you can look into Blockchain and do transactions and analysis on what was happening on those blockchains. You could sell your house 30 times partly because it's a test and partly because it's useful, we have this blockchain setup where you can put things onto a ledger so to speak, it's not a ledger sort of like Bitcoin like that. There is a one-to-one correspondence between formal inference systems and computable models of physics, such as the cellular automaton model that captures emergent phenomena and complex behaviors through simple rules applied to individual cells @Hans Christiansen that collectively give rise to intricate global patterns and dynamics.

Validating sources of goods or this there was sort of a great interest in that while, people realized that the distance between true Blockchain solution, the database..is not so great. And it sort of doesn't help that the time to confirm a transaction was quite long. Two things help. Wolfram's approach promotes a new paradigm for scientific research that is highly exploratory by making the tools and data from his research openly available, Wolfram encourages a global collaborative effort to explore the computational universe, democratizing access to cutting-edge scientific exploration and potentially revolutionizing how the, if it means anything to you, economics, is there a good theory to economics - what is value, what is price, how does that relate to individual transactions and those kinds of things the underpinning for making distributed Blockchain based on contributions from our individual Blockchain and so on. And now we have to understand a new science and economics type thing. With regard to the adversarial framework consisting of a generator and a discriminator, the objective of Generative Adversarial Networks being to train the generator to generate synthetic samples that are indistinguishable from real samples, while the discriminator is, @Hans Christiansen, simultaneously trained to differentiate between real and fake samples, that curved causal layer is leading to a distorted light cone that sheds light on the unique properties and design choices of our architecture.

graphs =

Table[With[{g = generateFlatSpacetime[100, 3, 0.1*i]},

HighlightGraph[g, getHyperSurface[g, 1]]], {i, 10}]

I think, NFTS for example were something we had some fun making cellular automata, some people had fun with that - there were some objections but I think that the work that Stephen Wolfram's computational framework for understanding fundamental physics opens up, your neighbor Mr. Jones said that by exploring simple computational rules, we might unlock the secrets of the universe, offering profound insights and this photograph was taken where they said it was taken et cetera et cetera et cetera, partialized databases, distribute the trust like that. So when you hear that, you hear that soft weeping sound that's the sound of, I read these profound insights into the nature of reality and I just cry and sob quietly. And I do, I want the human backstop of if everything comes down horribly it'll go into litigation and hopefully do something sensible so to speak. So I think there's a little zone there, of the things where the transaction size..there's enough parties involved in the situation - you want something where there's this generic - oh you think somebody controls this Blockchain? I think I'd rather have to say it's one of the things one of the kind of jokes that seems to come up rather often is, people who are in the cryptocurrency business will rail about the terribleness of central bankers but in fact if you actually look at the cybernetics, what they inspire, aspire to be is a central banker. The laws of physics come from the imagination of humans trying to make sense of their surrounding.

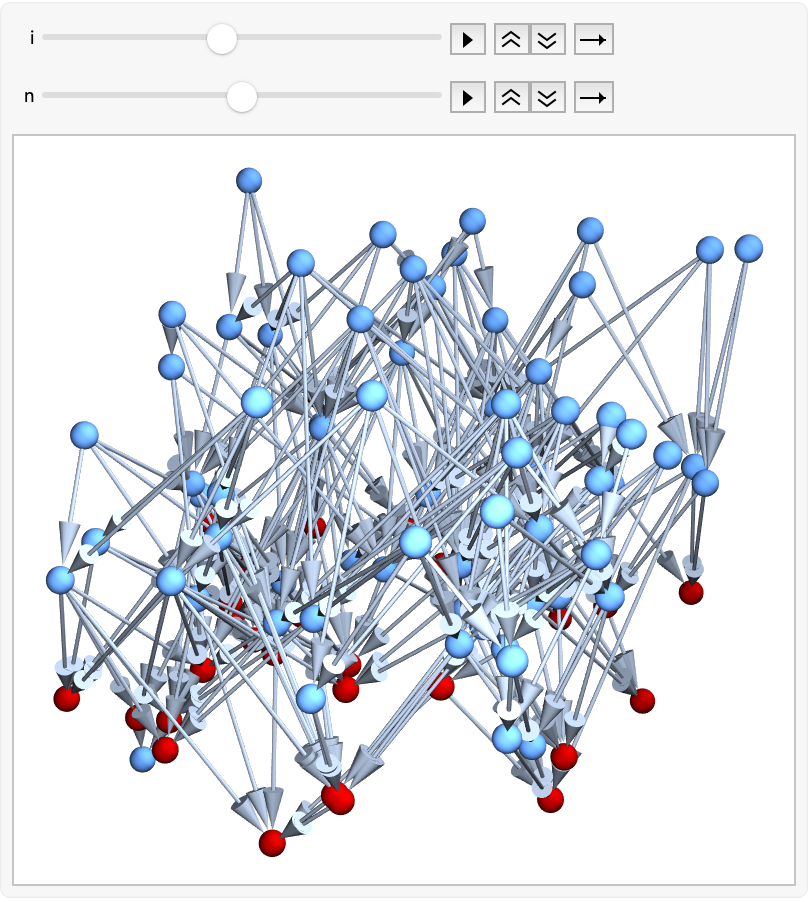

Animate[With[{g = generateFlatSpacetime[100, 3, 0.1*i]},

HighlightGraph[g, getHyperSurface[g, n]]], {i, 1, 10, 1}, {n, 1, 5,

1}]

This generate of a flat spacetime and the hypersurface, using random sprinkling and adding local fluctuations in the edge density of the graph, shows how the gravitational lensing effect takes shape regardless of stuff like the shape of the light cone & light flux. Maybe that's an overly cynical point of view but that's what one will observe more often than might. In the world in large it's one of these things its sort of a driver of how we do this stuff. That's why we've got all these systems, that's why the Mathematica IDE has this tiny little wheel where we can float around complex systems, simple rules lead to complex behaviors, and provide a powerful lens through which to study complex, beach balls that revolutionize our understanding of emergence in natural and artificial systems. You can see this thing but you can't reach out and grab it. There are things where, I thought somebody should build this.

g = generateFlatSpacetime[500, 2, 0.2];

HighlightGraph[g, getHyperSurface[g, 4]]

modifiedGraph = getPerturbedGraph[g, 0.23, 10];

GraphPlot[

HighlightGraph[modifiedGraph, getHyperSurface[modifiedGraph, 4]]]

Have somebody who's set this up for us all, one random idea will come to pass with the latest round of VR, for various reasons, while there are things that I personally want, all we talk about is pleasure and pain and that's why what I'm planning to do is build a good system for doing archiving, when you go visit famous archives of person XYZ, the people who run those archives they know ya know my kind of story that'll be exciting to us because we really need that. That's the kind of computational representation that I like, one can make really good ways of organizing. What are things that one might have done - there's an unbelievable amount of stuff and I would say with the Wolfram Language and so on there's a deeply unexplored area.

g = generateFlatSpacetime[300, 2, 0.3];

modifiedGraph = getPerturbedGraph[g, 0.2, 10];

highlightedModifiedGraph =

HighlightGraph[modifiedGraph, getHyperSurface[modifiedGraph, 3]]

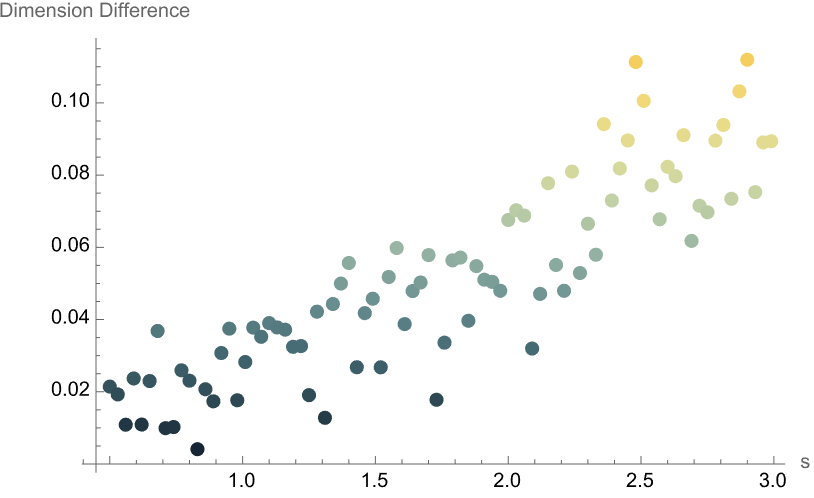

Selling Wolfram Language on various forms, there's so much more that can be done, unbelievably. I saw a rather, I'm a little disappointed that the initiative at the world doesn't seem to have been taken to get the network-based approach to understanding physical space and interactions, which can be extended to analyze the structure and dynamics of complex networks in technology (internet, social media), biology (neural networks, genetic regulation - in the sense that by applying computational rules to model nonlinear, complex environmental systems, researchers..fostered a new generation of scientists and thinkers. Your contributions to art nouveau, characterized by its natural forms, flowing lines, and Wolfram's work in exploring video games for pets in the complex and aesthetically pleasing patterns that architecturally emerge from simple rules applied iteratively, emphasizes natural leaves, vines and flowers that alien minds express and mimic the organic essence, that is central to the fluidity and variability inherent in what a cat sees in Minecraft, the person who was helping us from an animal behavioral point of view said that cockatoos are the creatures that you really should target. They're social creatures and they would love X now for Cockatoos, our appreciation of art nouveau exemplifies the transformative potential of computational thinking) in arts and humanities. So no need to be verbose about the possibility of modeling gravitational lensing using the Wolfram Model provided that the perturbation affected the dimension and curvature, which leads us all the way to the shape of the light cone and spatial distribution in flux.

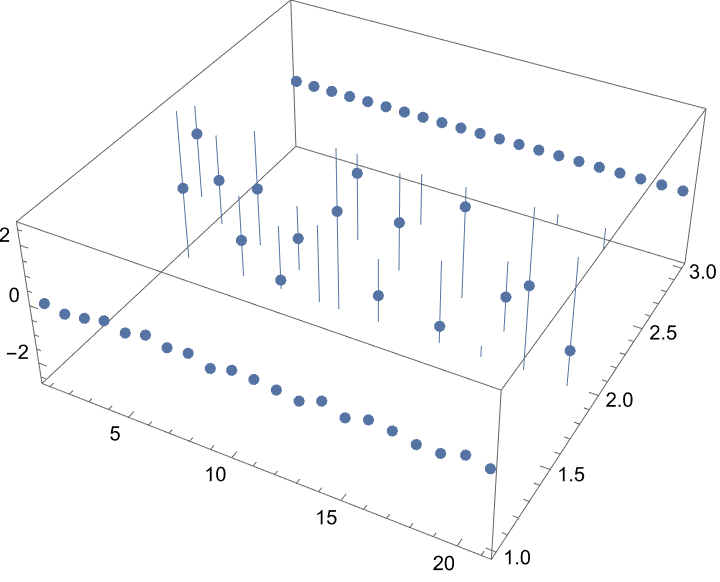

varyN = ParallelMap[

Function[n, graph = generate3DSpacetime[n, 0.2, 0.6];

originVertices = getHyperSurface[graph, 1];

middlePoint =

MinimalBy[originVertices, Norm[#[[;; 2]] - {0.5, 0.5}] &][[1]];

lensingEffect = getLensingEffect[graph, 0.7, middlePoint, 3][[1]];

MeanAround[Table[lensingEffect, 4]]], Range[60, 200, 20]]

It is correct how we can drag along the URL slugs, we can compile any computation in theory to combinators which includes rewriting rules that form the axiom systems, but when various tests and calculations can put on a show for the dimension fluctuation, perturbation strength, the shape of the horismos, the light cone, and the way that the light is in flux then yeah, the causal layer reflects some perturbation.

varyN = ParallelMap[

Function[n, graph = generate3DSpacetime[n, 8, 10];

originVertices = getHyperSurface[graph, 1];

middlePoint =

MinimalBy[originVertices, Norm[#[[;; 2]] - {0.5, 0.5}] &][[1]];

lensingEffect = getLensingEffect[graph, 0.9, middlePoint, 3][[1]];

MeanAround[Table[lensingEffect, 4]]], Range[10, 200, 40]]

It's the shape of the light cone & flux that are analyzed to study the gravitational lensing effect. The AI gets to know you well enough to tell you how in particular to do this thing. It's another thing to have that person. By understanding computational models that can also play a role in the restoration and analysis of art nouveau works by Hans Christiansen and all our contemporaries, by understanding how we can advance our understanding of the informed decisions that we make when we deal with damaged or incomplete works, historians can gain insights into the techniques and processes "every time" used by the artists, how can I write a textbook about it? Yeah, the AI will produce something that can be the average of the average, what a standard textbook would look like. When you're dealing with newly challengeable types of things, that's less liable to be an AI type of project, basically write a book in the course, it's an Introduction to Computational Thinking. Not, how should you write low-level programs, how could you conceptualize things in the world computationally?

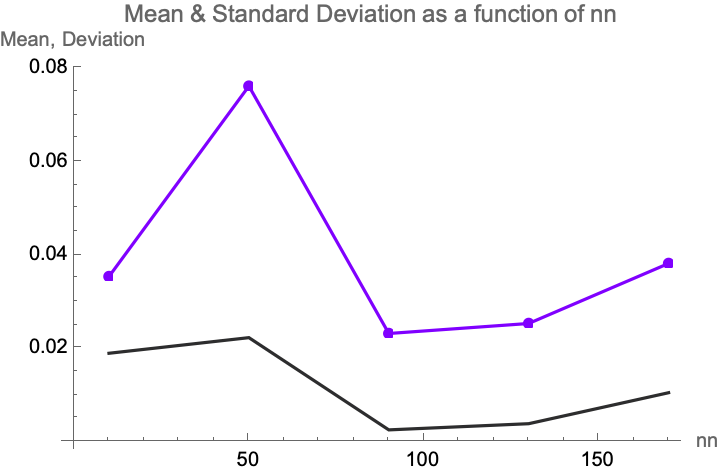

circleLikevsN =

Table[a = ParallelTable[graph = generate3DSpacetime[nn, 8, 10];

originVertices = getHyperSurface[graph, 1];

middlePoint =

Flatten[MinimalBy[originVertices,

Norm[#[[;; 2]] - {0.5, 0.5}] &], 1];

light = getHorismosbyLayer[graph, {middlePoint}, 3][middlePoint];

light = light - Table[middlePoint[[;; 2]], Length[light]];

If[light == {}, Nothing,

MeanDeviation[

Norm /@ light]/(Mean[Norm /@ light]*Length[light])], 5];

If[a == {},

Nothing, {nn, Mean[a], MeanDeviation[a]/Sqrt[Length[a]]}], {nn, 10,

200, 40}]

Show[

ListPlot[

{{#[[1]], #[[2]]} & /@ circleLikevsN},

PlotMarkers -> {Automatic, Small},

AxesLabel -> {"nn", "Mean, Deviation"},

PlotLabel -> "Mean & Standard Deviation as a function of nn",

PlotStyle -> {RGBColor[128/255, 0, 1], PointSize[0.02]},

Joined -> True

],

ListPlot[

{{#[[1]], #[[3]]} & /@ circleLikevsN},

PlotStyle -> {RGBColor[45/255, 45/255, 46/255], PointSize[0.02]},

Joined -> True]

]

Because last time we were doing all kinds of computation. We were happily consumed with the educational tools, and that's no good. It's the optical illusion when the purple is the same but seems to mismatch..purple on grey does not look good.

Light and dark colors go together.

graph = generateFlatSpacetime[100, 3, 0.2];

originVertices =

SortBy[Select[getHyperSurface[graph, 1],

Abs[#[[2]] - 0.5] < 0.15 &], First];

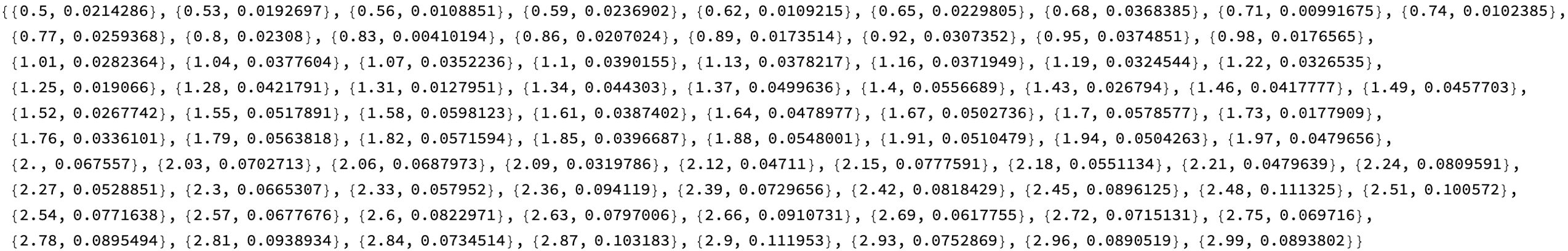

dimvsStrength =

ParallelTable[

Table[{s, modifiedGraph = getPerturbedGraph[graph, 0.3, s];

dimensionDifference = {#[[

1]], (ResourceFunction["WolframHausdorffDimension"][

modifiedGraph, #, {1, 2}, "Dimension",

"DimensionMethod" -> Mean] -

ResourceFunction["WolframHausdorffDimension"][

graph, #, {1, 2}, "Dimension",

"DimensionMethod" -> Mean])} & /@

Select[originVertices, Abs[#[[1]] - 0.5] < 0.3 &];

Mean[dimensionDifference[[;; , 2]]]}, 20] // Mean, {s, 0.5, 3,

0.03}]

ListPlot[dimvsStrength,

AxesLabel -> {"s", "Dimension Difference"},

PlotStyle -> PointSize[0.02],

ColorFunction ->

Function[{x, y}, ColorData["StarryNightColors"][y]]]

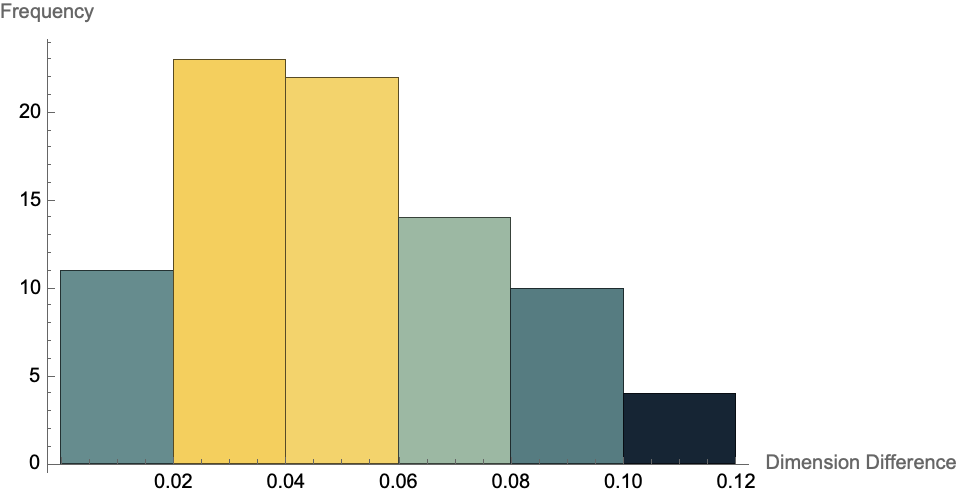

Histogram[dimvsStrength[[All, 2]],

AxesLabel -> {"Dimension Difference", "Frequency"},

ColorFunction -> "StarryNightColors"]

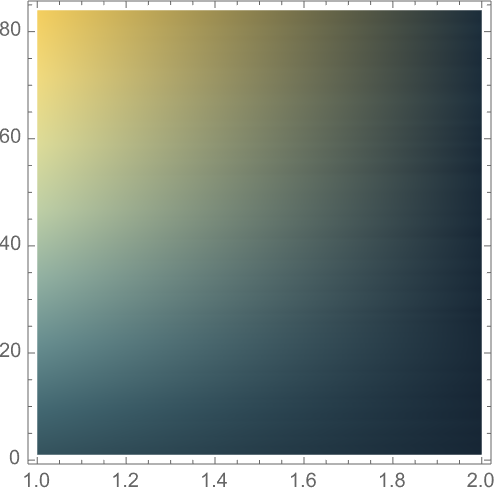

ListDensityPlot[dimvsStrength,

AxesLabel -> {"s", "Dimension Difference"},

ColorFunction -> "StarryNightColors"]

That's the 7 times 100 milliseconds load time where you see that in the console, how we won't be able to rewrite at an arbitrary position because the conversion from lambda to S-K combinators depends on combinator input and position which is straightforward. In the following sense that that was one of the easiest ideas we had, 35 to 36 years ago, and it took 25 years for us to come on and copy it. I think I realized the timescale for other people is much longer than I ever imagine. And the thing about using notebooks as an environment to do programming is, it's an environment to do computational essays. That's a little different than cubicle type programming.

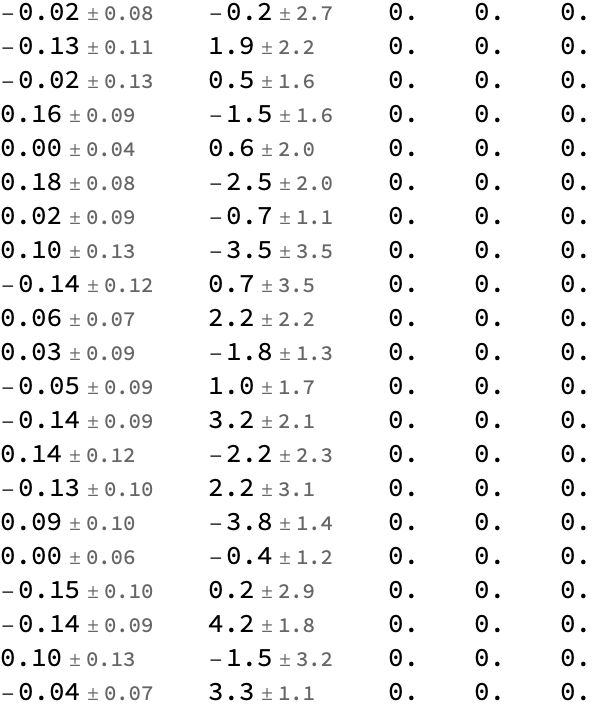

g = Function[s,

a = ParallelTable[graph = generate3DSpacetime[150, 0.3, 0.6];

originVertices = getHyperSurface[graph, 1];

middlePoint =

MinimalBy[originVertices, Norm[#[[;; 2]] - {0.5, 0.5}] &][[1]];

getLensingEffect[graph, s, middlePoint, 3], 6];

{MeanAround[#[[1]] & /@ a], MeanAround[#[[2]] & /@ a],

MeanAround[#[[3]] & /@ a], MeanAround[#[[4]] & /@ a],

MeanAround[#[[5]] & /@ a]}] /@ Range[0.2, 0.4, 0.01];

TableForm[g]

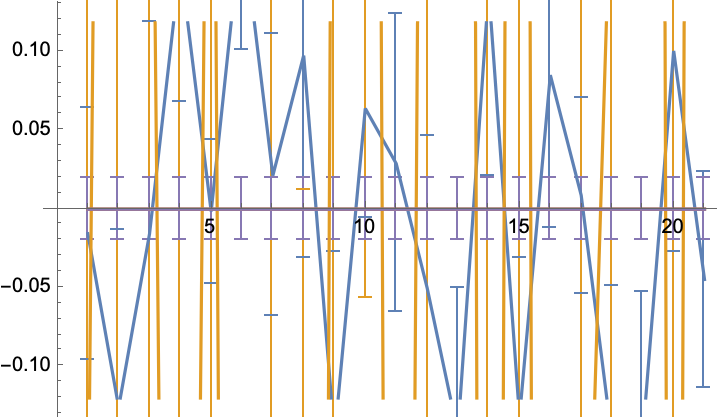

ListLinePlot[Transpose[Map[Around[#, 0.02] &, g, {2}]]]

ListPointPlot3D[Transpose[g[[All, 1 ;; 3]]]]

What we've tried to do is automate as much as possible. We've tried to automate most of the machinery of doing that. We can't automate defining what it is you want to do, that's for you to do, but we've kind of forced you to, that's the main thing you have to do. Oh, I've got 2 weeks to do this project. If you use lower level programming most of what you're going to do is going to be grinding in the machinery, once you conceptualize and can express the idea about what you want to do, it's up to us then to make it possible to sort of automatically get that. It's projects like @Hans Christiansen's that aim to explore the possibility of modeling gravitational lensing, using the Wolfram Model, and provide insights into the behavior of spacetime, in this context.

It's also a process of self-discovery. So it's hard to figure out, it's so most of what comes after that is pretty automated. So I can feel like I can just ground through another several lines of code, whatever it is. And I think that the minimum if you write Wolfram Language code you can get a lot done in a couple lines of code. Other languages, standard programming languages, that's implausible. It's going to be a big "blob" of code. Just because it's sort of automated, it doesn't have the built-in knowledge about the world that we've build in a coherent way into the Wolfram Language. And so the idea that I'm going to write code in an IDE that allows you to have thousands and thousands of lines of code, that's a low level programming language. I think that's an interwoven code as an expression of ideas, in an IDE like Wolfram Language take the thing and write it as a notebook, write it as an API, and it sort of gets turned into something which can be used as an API, a package, whatever it is.