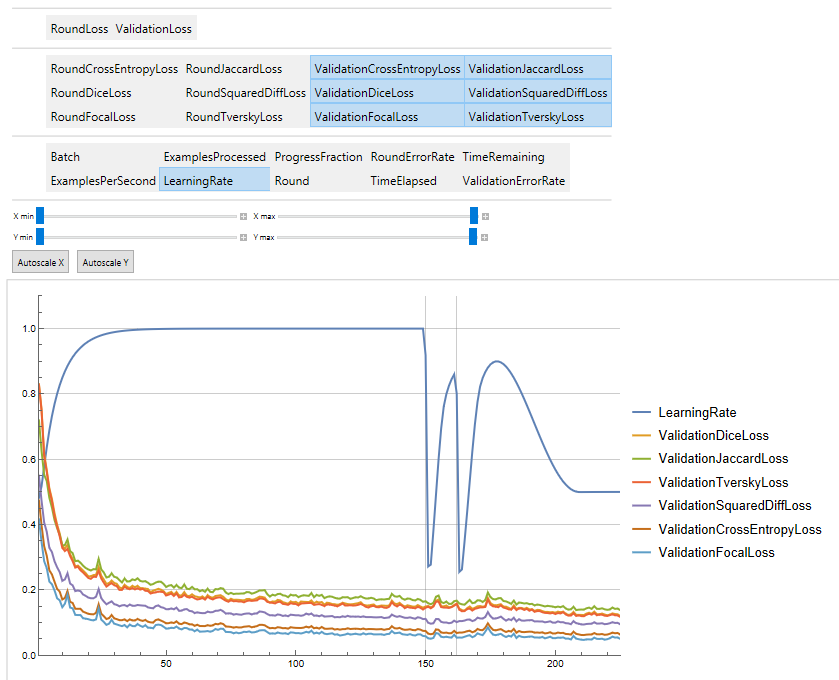

I'm training UNET neural networks for muscle segmentation (link) and implementing LearningRateSchedules that hopefully help with the last extra bit of performance I need. However when implementing it i observed some weird behaviour.

For "ADAM" the LearningRateSchedule is a slow run in till max value curve, but after long training it gets stuc in occilating solution therefor i wanted to test some other LearningRateSchedules.

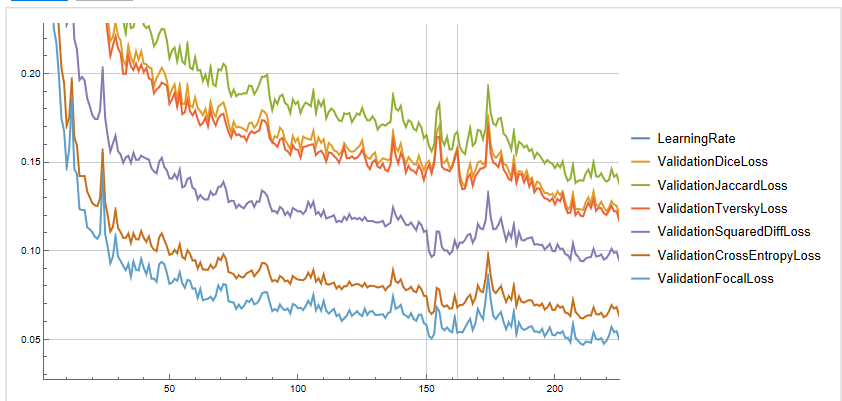

after restarting the trianing the schedule dit almost as i expected.  and it did help the training especially regariding the intersection based loss functions.

and it did help the training especially regariding the intersection based loss functions.

however the schedule I implemented was not done as expected.

I build a toy example that shows that for "SGD" everyting works fine but for "ADAM" the default LearningRateSchedule is always superimposed on the one I provide and the Automatic one is changing based on the round and batch size and in most cases does not even reach the specified learning rate.