Abstract

This Summer School Wolfram Project represents an image-based approach for gesture recognition with the use of machine learning and neural network training.

Up to now gesture recognition remains a complex problem solved with different techniques, each of them has its pron and cons. It has a wide range of applications: from sign language recognition to virtual reality simulation. Sign language is not universal and almost each country has its unique, although they are quite similar in many ways.

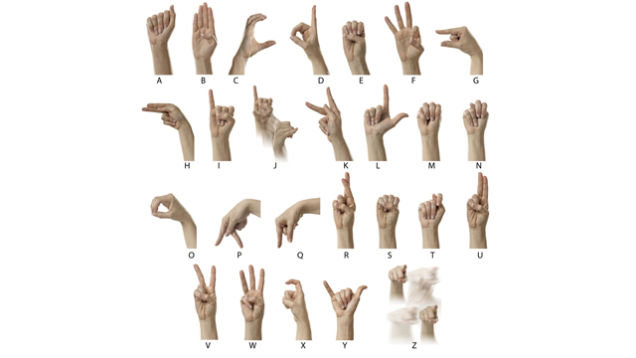

American sign language

Two methods of recognition and 4 different training sets are used. All gestures in this work come from American and Polish sign languages.

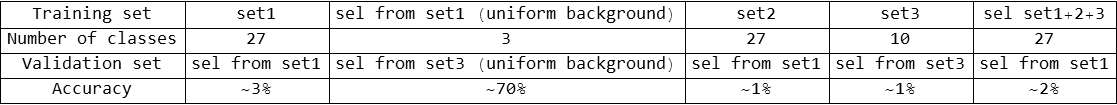

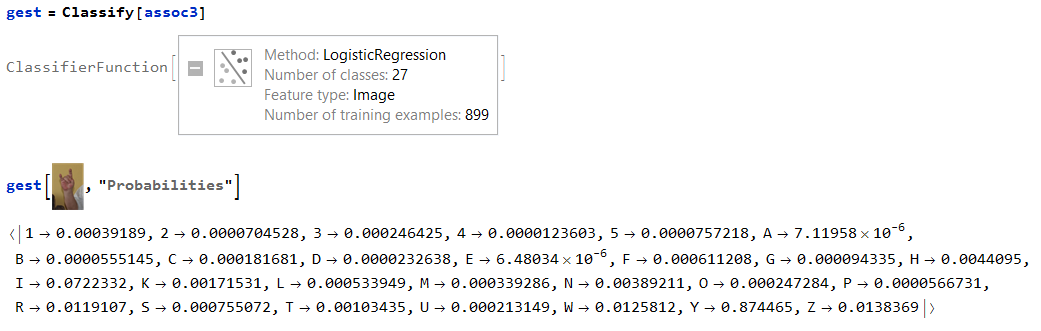

First method is to classify data via Classify function. It was implemented for a complex data-set of images, captured in an uncontrolled environment, and showed insufficient result with accuracy less than 3%. The reason is that image database contains full body images and it's hard to distinguish hands only.

Second method involves neural network training via NetTrain. The dataset includes 3477 images for 5 gestures of ASL. Training is tested on two sets of images with uniform and non-uniform backgrounds. This method produced 76-77% accuracy result and it can be further improved up to 100% for static gestures by enlarging datasets.

Classify Function

First dataset contains 899 images of 12 different people for 27 gestures from Polish sign language (set1). It is complemented by 2072 full body images of 20 different people (set 2) and images captured from one person (set 3).

Test sets are generated from selection of original training sets. Several combinations of training and validation data selections were testified:

None of big data-sets gave sufficient result. Nevertheless, Classify can produce sufficient results with carefully sorted data.

NetTrain

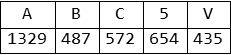

Training and validation database for the network training includes images of only hands. There are 3477 images in training set, 317 test images with uniform background and 317 images with complex background. Table below shows how many times each gesture is represented.

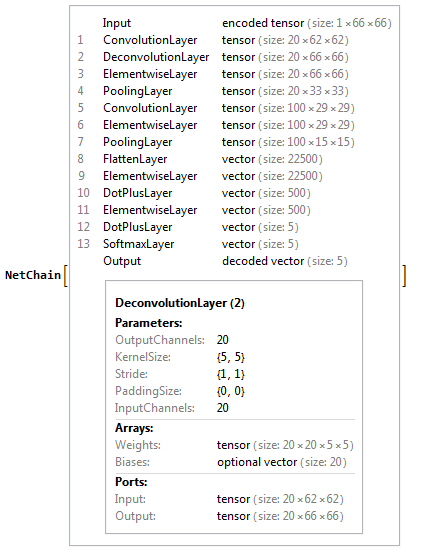

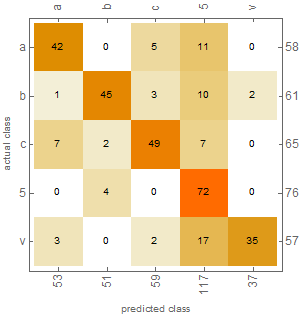

Training is proceeded with a 13-layer setup. Resulting accuracy for uniform background validation data is about 77%, and about 75% for complex background data .

cm = ClassifierMeasurements[lenet, uniTestData];

cm["Accuracy"]

0.766562

Confusion matrix is presented below.

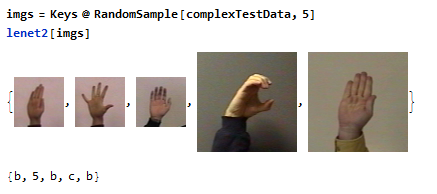

Examples

img

lenet[img,"TopProbabilities"]

{"b" -> 0.981646}

Conclusion

Sufficient identification requires differentiation of human body and background, as well as of different parts of body.

In order to improve recognition results, database enlargement together with image pre-processing should be done. Feature extraction algorithms would allow to remove background and to distinguish hands on an image.

Preferable method to use is neural network training, as it confidently wins over Classify Function in that case.