Introduction

A function for evaluating combinators was implemented in the Wolfram Language. Some results about different ways in which combinators can evaluate were found while working on optimization of combinator evolution. This project was done as a part of the Wolfram Mentorship program in collaboration with Todd Rowland.

There are two very interesting combinators, which are functions, called S and K. The interesting thing about these two combinators is that using just these two combinator functions, one can write any program (it is a universal language). The definitions of these two combinators are K[x_][y_]:=x and S[x_][y_][z_]:=x[z][y[z]]. There are other combinator rules which are defined in a similar manner which have also been implemented.

The EvaluateCombinator function which was made can show how combinators change one step at a time, as the combinator rules can usually be applied multiple times before the combinator reaches a fixed point. Sometimes combinators don't reach a fixed point, but continue growing and changing forever, or continuously loop between a set of states. Also, a feature was added to the EvaluateCombinator function to allow evaluation to proceed in two different ways. Rules are always applied left to right, but one can specify whether patterns are matched from the outer levels to the inner levels, or from inner levels to outer levels.

Because of the universality of the simple set of SK combinators, these combinators are often used for studying computation. A decision was made to attempt to also add a feature to the EvaluateCombinator function to speed up / optimize the evaluation of SK combinators by adding extra rules to skip steps in their evolution.

The code for the EvaluateCombinator function, the code used for analyzing the data, some information about how to use the EvaluateCombinator function, and numerous examples of the function working are attached to this post. Get["state.mx"] can be added to the "combinatorReport.nb" file to get the attached state.mx file, which can set the values of all the symbols in the combinatorReport.nb file.

Example

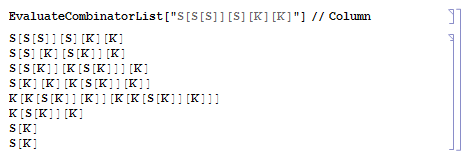

Here is an example of the EvaluateCombinatorList function showing the evaluation of an SK combinator one step at a time.

Patterns in Combinator Evolution

To optimize combinator evolution, a search was performed for extra rules which might speed up the evolution of these combinators by finding patterns in the ways that combinators evaluate. These patterns could possibly be used as extra rules as an attempt to skip steps in combinator evolution.

The initial rules analyzed were rules which matched different patterns of combinator expressions. There were 2,582 possible expressions which had up to 5 terms in them, not including the original two S and K rules.

These 2,582 patterns were evaluated to see what fixed point they reached (all combinators of size 6 or smaller reach fixed points.) (NKS p.1122) Here is an example of one of the patterns, "S[S[K][x_][y_]]" being evaluated:

The rule for this combinator would be S[S[K][x_][y_]]->S[y]. These combinator rules were narrowed down by filtering only patterns such as this one which lead to rules that skip one or more steps of evolution. This filtering reduced the number of combinators being considered for optimization down to 288.

Less general rules were then filtered out in favor of more general rules. For instance S[K][S][x_] :> x is removed in favor of S[K][y_][x_] :> x. Cases were then removed in which applying earlier shorter combinator patterns would have the same effect as applying a larger one. For instance the rule S[S[K][y_][x_]] :> S[x] isn't needed since the earlier shorter rule S[K][y_][x_] :> x applied to S[S[K][y_][x_]] will also give S[x] in one step.

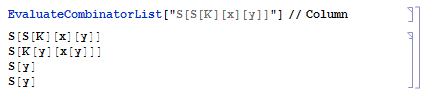

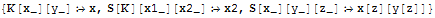

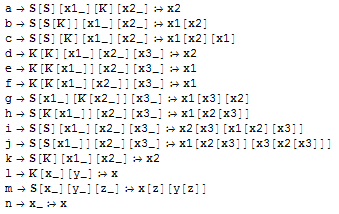

Using these two extra criteria, the list of rules for combinator expressions up to 5 terms was reduced from 2,582 possible rules down to just 13 non-redundant ones. This even includes the original S and K rules. One can think of these as possible "ways" in which size 5 combinators can evaluate. They are the following:

This list is in the reverse order in which they were used when evaluating combinators. When evaluating combinators, it was decided that the most efficient way to go about looking at rules would be first to look at larger rules, then sort rules of the same size by specificity, and then sort these further by the shortness of the output of the rule. This order was chosen to match rules which skip more steps, and which lead to smaller more manageable combinators, first.

If one looks at combinator expressions with up to 6 terms, there are 22,994 possible rules, and again a relatively small number, 67, are non-redundant in this way.

Applying Optimization

Next there is the matter of applying these found rules to combinator expressions. Combinators of various sizes were randomly generated. These combinators were then evaluated, while adding extra rules to see if this sped up the combinator evolution. One would think that the more steps and the more often rules are applied, the more extra rules would help speed up evolution. Large combinators generally have more rule applications, and run for more steps. Larger and larger combinators were tested to look for any speedups.

There is one issue with using large combinators, however, one which will be discussed more later.

Basically the issue is that larger combinators are also more likely to not reach a fixed point. Generally only combinators which reach a fixed point were of interest for testing optimizing rules, since the extra rules would likely be used primarily for evaluating halting combinators. (if one were looking at non-halting combinators, one would presumably want to look at step-by-step evolution, and the extra rules would likely be skipping steps in unpredictable increments.)

Based on some preliminary experimenting into how large combinators evolved, combinators which seemed unlikely to halt in two ways were first analyzed. Combinators which didn't halt after a certain number of steps, and combinators which didn't halt before growing to a certain size were selected. As elaborated more on later in this paper, larger combinators DO seem to either quickly begin and continue growing exponentially, or to quickly begin evolving in a repetitive way. What were later confirmed to be a small minority of combinators which did neither of these things, and instead grow non-exponentially, but in a complex way for many steps, were ignored. (These are rather interesting combinators, however, it seems especially hard to predict what these will do in the future, whether they will continue having complicated slow growing behavior, whether they will begin growing exponentially at some point, or whether they will in the end exhibit repetitive behavior after all.)

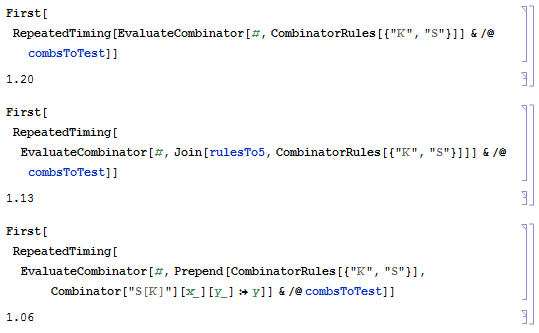

However, even with combinators of size 1000, the extra rules only modestly sped up evolution.

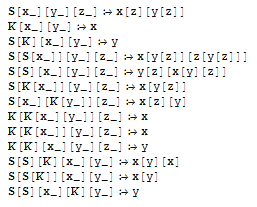

Different selections and orders of the 11 found optimization rules, along with the original S and K rules, were tested to see which would speed up evaluation the most. First, each extra rule was added one at a time, and also the added rule was added at almost each possible index in the rule list. Some positions were not checked, because, for example, putting the extra rule S[K][x_][y_]:>y after s[x_][y_][z_]:>x[z][y[z]], as opposed to before it, would mean the extra rule would not be used. The extra rule would be skipped over by the other original and more general rule. The fastest rule permutation turned out to be

When used to evaluate the same combinators as above, the average timing was a shorter 1.00s.

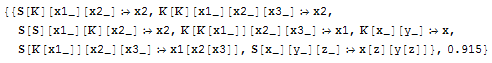

The fact that this rule was fastest is interesting since S[K][x_][y_]:>y is equivalent to the "I" combinator, (I[y_]:>y, I is essentially the same as S[K][x_]) which is often used in practice to simplify the use of S K combinators. Extra rules were added in all possible combinations. 2, 3, and then 4 different rules were added. These are the fastest rules in each of those situations:

Interestingly, when one looks at all possible rule sets of a given size, the fastest always has the same elements as the rule set one size smaller, but with one new rule added, and in most cases in a different order. When one looks at the timing as a function of where the rule came up due to the Wolfram Language's Permutation function, it looks like there could be some pattern, or relationship, between the timing and where it showed up in the permutation.

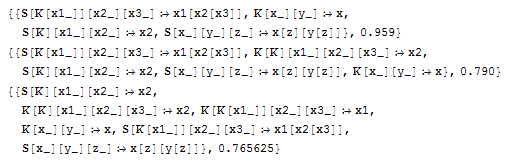

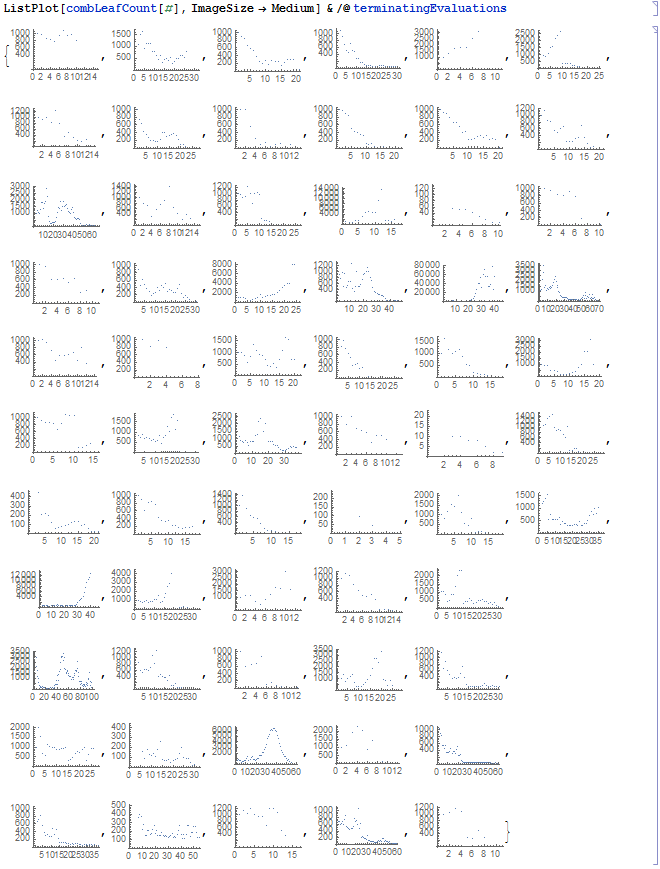

The x axis is what order the new rules were produced by the Permutation function. The y axis is the average timing for evaluating combinators using those rules. The three graphs correspond to rules with one, two, three, and four extra combinator patterns added to the optimization rule, respectively from left to right and top to bottom.

To speed up finding rules of size 7, only rules similar to the fastest rule of size 6, but with one new rule part added, were tested.

The fastest of these, however, shown above, is slower than the fastest rule set that has one less rule. This could be due to the fact that extra rules will generally significantly slow down evolution, that is, if the rule has no effect of skipping steps in evaluation.

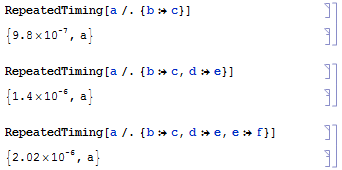

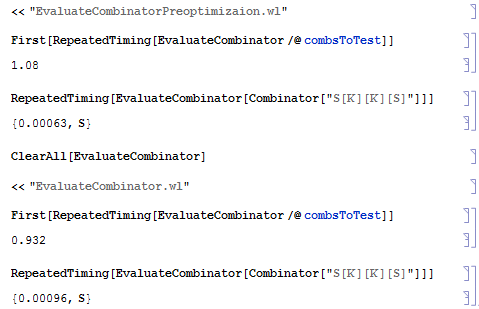

Thus, extra rules can only help in the case of large combinators, and it appears that only up to a certain point do extra rules continue to speed up evolution. Taking into consideration the fact that extra rules had a modest effect on speeding up evolution of size 100 combinators, and that extra rules would slow down the evolution of smaller combinators, in the end only the combinator rule which corresponds to the "I" combinator was added to the actual EvaluateCombinator function. One could possibly try taking into account the size of a combinator before deciding how many extra rules to add, which could be attempted in the future. All evaluations up to this point have been performed using a version of the EvaluateCombinator function which does NOT have extra optimization rules. Next the timing of evaluation between the old EvaluateCombinator function was compared, and a more recent version which has the optimization rule included. The evaluation also runs a bit faster with the function allowed to detect the rule needed instead of having the rule explicitly added as a parameter as was done earlier for comparison purposes with other rule selections.

Random Non-Terminating Combinators

The way in which large combinators evaluated was investigated in order to learn more about why the rules found only had a modest effect on the speeding up of combinator evolution.

First, the evolution of 400 randomly generated size 100 combinators was run, and most of these combinators quickly did one of two things. They either began growing exponentially, or began having repetitive behavior. Only one, in fact, looked to be doing neither. Here are some details of what I found.

First of all, it is known that 2 of the 16,896 possible size 7 combinators don't reach fixed points, and these combinators grow exponentially. (NKS p. 1122) "At size 8, out of all 109,824 combinator expressions it appears that 49 show exponential growth. And many more show roughly linear growth." (NKS p. 1122) It seems that this becomes a trend with even larger combinators being more and more likely to grow exponentially. This could be partially due to a larger combinator being more likely to contain a smaller combinator part which grows exponentially.

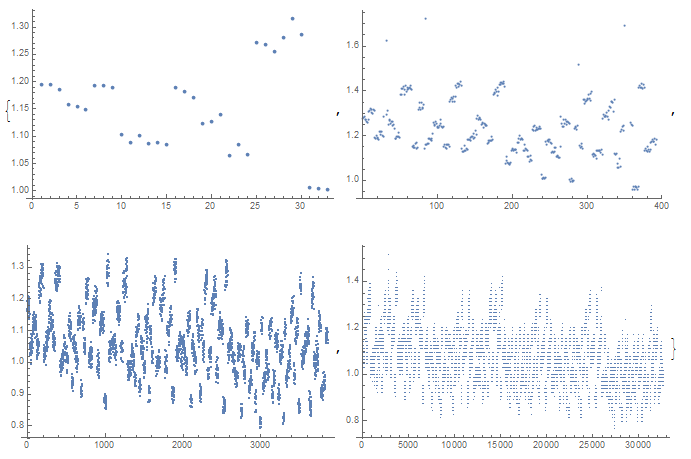

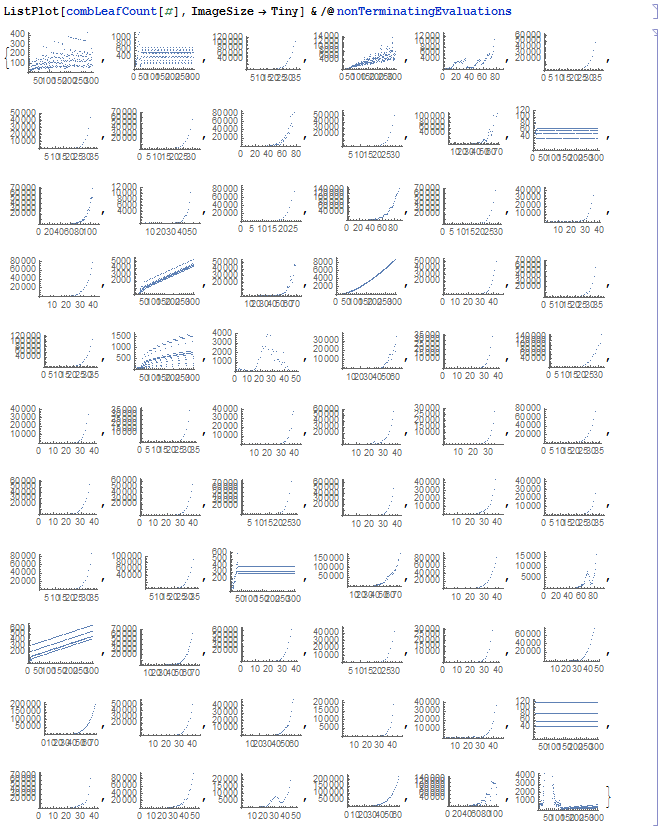

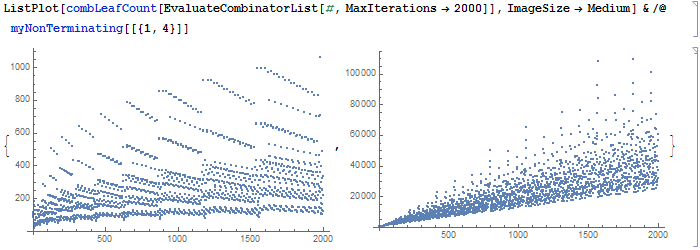

The behavior of larger combinators which grow exponentially was investigated. 400 random size 100 SK combinators were generated, using SeedRandom[1] as a starting seed for the random combinator generator. These combinators were then evaluated, with evolution pausing when one of three outcomes happened during said evolution: 1) the combinator evolution reached a fixed point (no longer changes with replacement rules applied) 2) the combinator evolution reached 300 steps 3) the size of the combinator went above 2,000.

Combinators which lead to criterion 1 (reached a fixed point) were filtered out, leaving only combinators which took many steps and/or became very large without halting. Only 66 of the original 400 randomly generated combinators had not halted or grown too large by this many steps. These 66 combinators were then evaluated a second time, this time again for a maximum of 300 steps, but for maximum combinator size of 200,000 to see if they would halt after reaching this size.

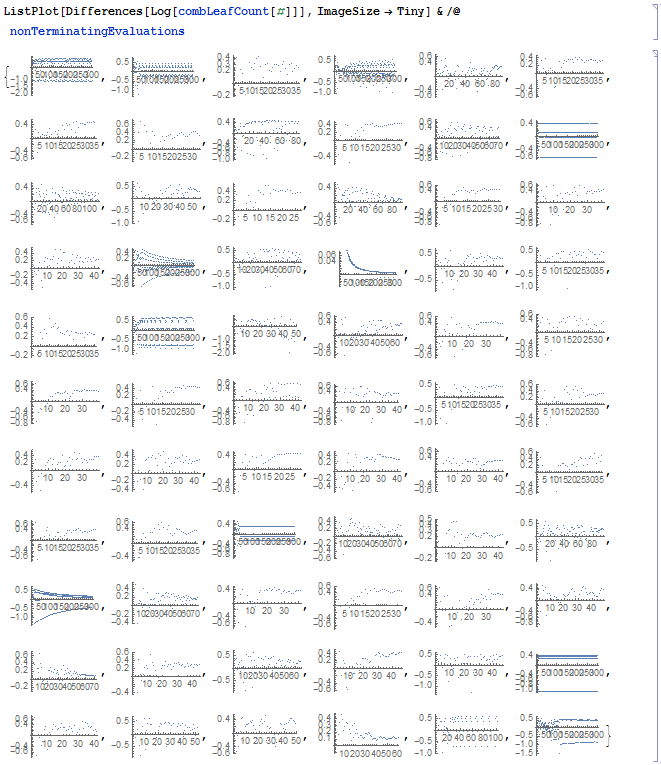

This shows the same plots, but with the natural logarithm taken, and then with the differences taken between points. It shows that most of the combinators which do grow exponentially still appear to be exhibiting complex behavior.

Of the 66 combinators, most of these, 53 of them, appear to have been selected by going above length 2,000 before they reached step 200. One of them got above size 2,000, but below 200,000 (it reached a maximum size of 3,861) but reached a fixed point anyway before step 300. Four of them got to step 300 by beginning to loop through the same few values repeatedly. Six of them began to grow in what appears to be a repetitive pattern.

This left two combinators which evaluated for 300 steps WITHOUT seeming to settle down into an obviously repetitive pattern, and also without growing above size 200,000. These were run for more steps. When run for 2,000 steps, they both appeared to show complicated behavior without becoming repetitive or growing exponentially, although the behavior may be nested.

The combinators which grew to size 200,000 all looked as if they were going to continue growing at an exponential rate, although checking with a larger allowed combinator size, and potentially also checking with more allowed steps, could possibly show them to begin exhibiting some other behavior at some point.

Here are some conclusions from looking at size 100 combinator evolution. Most of these size 100 combinators halted before 200 steps. Of the ones that didn't, most seemed to start growing exponentially. Most of the rest went into a repetitive pattern. The only one that didn't match the others had apparently the rarest kind of behavior, which neither transitions into a repetitive pattern of evolution, NOR looks to grow exponentially. Of the 400 randomly generated combinators analyzed, again only two looked to be in this sparse category of size 100 combinators. It seems that combinators of this size are not likely to have very complicated long term behavior without growing exponentially early on.

Random Terminating Combinators

Some very large combinators which DO reach a fixed point were also analyzed, to see what kind of behavior they had. 200 size 1000 combinators were generated. This time the combinators which DID reach a fixed point before 300 steps, and also before reaching a size of 200,000, were analyzed. There were 62 of these. All of these ones which reached a fixed point did so within 100 steps (one ran for exactly 100 steps). The largest size any of them reached was 154,820 (that one ran for 44 steps).

Frequency of Optimization Rule Usages

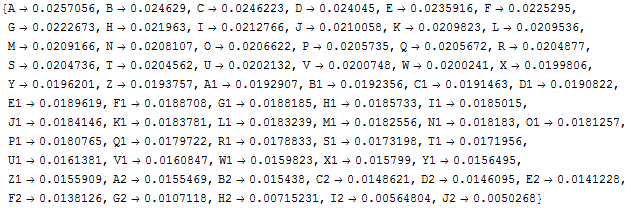

Finally, the number of times each of the extra rules which were used for optimization purposes was actually used during the evaluation of one of these terminating combinators was determined.

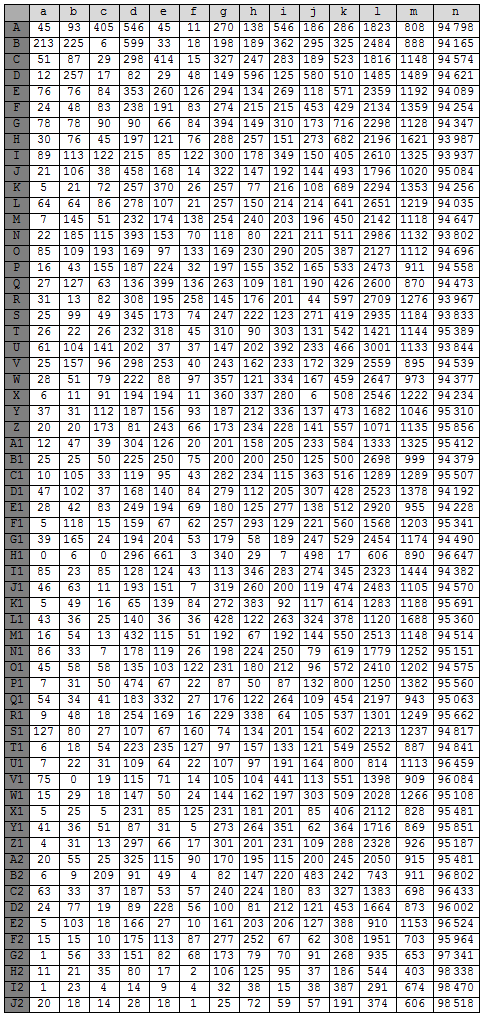

This shows what fraction of the time an extra optimization rule (besides the usual S and K rules) was used during the attempt at optimized evolution. The combinators are sorted by how often the rules were used.

The capital letters refer to different randomly generated size 100 combinators. The details of these combinators (and all the other code used to generate this report) can be found in the "combinatorReport.nb" file attached to this post.

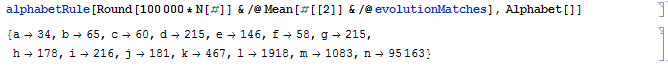

The following breaks down how often each rule was used individually in evaluation out of 100,000, and also shows the number out of 100,000 times when a part of the combinator evolution did not match any rule.

Conclusions

Out of the numerous possible rules up to size five that could be made to skip combinator evolution, only 11 were not redundant in some way. This made it easier to look through different possible ways to use them for speeding up combinator evolution. Looking through different ways to use them was interesting. However, in the end, adding extra rules also generally slows down evolution, as each rule needs to be compared with all parts and sub-parts of a combinator expression. In the end, a decision was made to only use one rule, which happens to work the same way as the commonly encountered "I" combinator. This rule was the extra rule which matched the most with large terminating combinators while evaluating.

Optimization rules are generally helpful for combinators which terminate. As for non-terminating combinators, one would most likely want to look at the evolution step by step, and optimization rules would skip steps in a generally hard to predict fashion. The majority of large combinators, say of size 100, appear to not terminate. Most begin to grow exponentially rather quickly, and some start exhibiting repetitive behavior. A couple of interesting cases were found where there was no exponential growth, but there also seemed to be less repetitive behavior, and it is unclear whether these will in fact terminate at some point.

In the future, one could try to figure out what pattern there could be behind which combinator rules speed up evolution the most. One could also experiment with changing which rules are used depending on aspects of the initial combinator such as its size. Longer combinators would likely be optimized more by having more rules. One could also look at optimization rules beyond SK combinators. Combinator expressions which actually include the "I" combinator could be a start. One could also look at the evolution of other sizes of combinators. Finding and analyzing more large or small combinators with non-exponential and also non-repetitive behavior could be interesting.

One could also visualize the evolution of combinators of sizes other that 100, to see how other sized combinators evaluate.

Another thing to do would be to do more analysis on how often the extra rules were used. Can something be learned by looking into how some patterns match more often than others? One would expect that combinator patterns which cause the expression to shrink would match more often say in a combinator which terminates as opposed to a combinator which continues to grow exponentially. Generally one would expect different sets of optimization rules to match during periods of growth as opposed to periods of shrinking, and in the plots of terminating combinators there did seem to be stretches of continuous growth versus shrinkage.

Attempting to optimize combinator evolution also makes one think of the idea of computational irreducibility. Perhaps some ways combinators evaluate can be sped up in some parts of their evolution, but in general it could be that much of the time when combinators are being evaluated one shouldn't expect to be able to speed the evaluation up much. Perhaps the evolution is already near a maximal level of complexity where the only way to see what happens next is to go step by step, and one shouldn't expect to be able to skip steps in a way that can save much time in the end.

Combinators are certainly an interesting system for studying complexity, and in a way that is simple enough that patterns can be found and attempts can be made to exploit these patterns for optimization purposes.

Attachments:

Attachments: