Abstract

Chronological dating is essential for various tasks such as summarization and document retrieval. This project proposes a novel method of dating historical texts using a Recurrent Neural Network that works both on the character level and the word level. The results show a significant improvement in the accuracy of detection compared to using a word level only RNN. The error span is between 1 year and a century for most cases. Though it achieved a decent performance for the texts originating from the 19th century, the accuracy declines significantly for older texts due to their scarcity and the non-homogeneous distribution of the provided dataset.

Data Collection and Pre-processing

The training data is composed of public domain books collected from Openlibrary, an online project created by Aaron Swartz, Brewster Kahle and others. Wolfram Language supports a Service Connect that allows a direct interaction with the Openlibrary API.

Data Collection

In[106]:= openlibrary = ServiceConnect["OpenLibrary"]

Out[106]= ServiceObject["OpenLibrary",

"ID" -> "connection-1f55c291dcb5feaa290ece0cd1c97ed2"]

In[107]:= BookDatabase = <||>;

nbCalls = 0;

In[109]:= GetTextRequest[keys_] := {nbCalls++;

Normal@openlibrary["BookText", {"BibKeys" -> {keys}}]}

In[110]:= GetValidTextKey[keys_] :=

SelectFirst[keys,

MatchQ[Pause[.1]; Normal@openlibrary["BookText", {"BibKeys" -> {#}}],

KeyValuePattern[_ -> _String]] &];

GetFirstText[list_] := FirstCase[list, Except["NotAvailable", _String]]

In[112]:= GetTexts [keys_] :=

Quiet[GetFirstText[

Values[Normal@

openlibrary["BookText", {"BibKeys" -> RandomSample[keys, UpTo[50]]}]]]]

In[113]:= AddBook[b_] :=

BookDatabase[b["FirstPublishYear"]] =

If[MatchQ[BookDatabase[b["FirstPublishYear"]], _Missing],

{GetTexts[b["EditionKey"]]},

Append[BookDatabase[b["FirstPublishYear"]], GetTexts[b["EditionKey"]]]

]

In[114]:= AddSubject[subj_String] :=

Module[{searchResults},

(*Database init*)

BookDatabase = <||>;

(*Searching books*)

searchResults =

Select[Normal@

openlibrary["BookSearch", {"Subject" -> subj, MaxItems -> 90}],

#["HasFulltext"] &];

(*Downloading Text*)

GeneralUtilities`MonitoredMap[AddBook, searchResults];

Print[subj <> " DOWNLOADED!"];

(*Exporting*)

Export["C:\\Users\\Tarek\\OneDrive\\Documents\\Portfolio\\opportunities\\\

Wolfram Summer Camp\\Dating Historical Texts\\" <> subj <> ".wxf",

BookDatabase];

Pause[180];

]

(*TESTING*)

In[115]:= AddSubject /@ {"Religion", "Games", "Drama", "Action", "Adventure", "Horror",

"Spirituality", "Poetry", "Fantasy"}

During evaluation of In[115]:= Religion DOWNLOADED!

During evaluation of In[115]:= Games DOWNLOADED!

During evaluation of In[115]:= Drama DOWNLOADED!

During evaluation of In[115]:= Action DOWNLOADED!

During evaluation of In[115]:= Adventure DOWNLOADED!

During evaluation of In[115]:= Horror DOWNLOADED!

During evaluation of In[115]:= Spirituality DOWNLOADED!

During evaluation of In[115]:= Poetry DOWNLOADED!

During evaluation of In[115]:= Fantasy DOWNLOADED!

Training the Neural Net

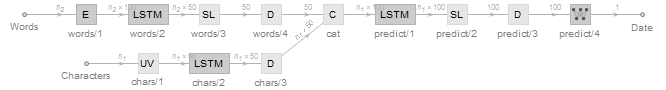

The project uses a hybrid Word-level and Character-level Recurrent Neural Network. The word-level processing is built on the GloVe model in order to compute vector representations for words. The limitation to using a Word-level only network is that most of old books include words that were not included in the training data for GloVe. Thus, adding a character-level Network Chain seems to improve the prediction accuracy since it helps process previously unseen corpora.

Define the net

In[24]:= net = NetGraph[<|

"chars" -> {

UnitVectorLayer[],

LongShortTermMemoryLayer[50],

DropoutLayer[.5]

},

"words" -> {

NetModel[

"GloVe 100-Dimensional Word Vectors Trained on Wikipedia and Gigaword 5 \

Data"],

LongShortTermMemoryLayer[50],

SequenceLastLayer[],

DropoutLayer[.5]

},

"cat" -> CatenateLayer[],

"predict" -> {

LongShortTermMemoryLayer[100],

SequenceLastLayer[],

DropoutLayer[.5],

LinearLayer[1]

}

|>,

{

NetPort["Characters"] -> "chars",

NetPort["Words"] -> "words",

{"chars", "words"} -> "cat",

"cat" -> "predict" -> NetPort["Date"]

},

"Characters" -> NetEncoder[{"Characters", characters}],

"Date" -> NetDecoder["Scalar"]

];

Create training data

In[32]:= sample[text_String, n_: 1024] :=

Module[{len, offset},

len = StringLength@text;

offset = RandomInteger[{1, len - n - 1}];

StringPadRight[

charPreprocess@

StringTake[text, {Max[1, offset], Min[len, offset + n - 1]}], n]

];

In[33]:= getSample[KeyValuePattern[{"FullText" -> text_String,

"FirstPublishYear" -> d_DateObject}]] :=

With[{s = sample[text]},

<|"Characters" -> s, "Words" -> s, "Date" -> dateToNum@d|>

];

$samples = 100000;

In[43]:= import = Flatten[Import /@ FileNames["*.wxf", $dataDir, Infinity]];

withDate = Cases[import, KeyValuePattern["FirstPublishYear" -> _DateObject]];

trainingData =

RandomSample[

Flatten@Table[getSample /@ withDate, Ceiling[$samples/Length[withDate]]],

UpTo[$samples]];

Length@trainingData

Training

results = NetTrain[

net,

trainingData,

All,

ValidationSet -> Scaled[.25],

TargetDevice -> "GPU",

MaxTrainingRounds -> Quantity[8, "Hours"],

BatchSize -> 48,

TrainingProgressCheckpointing -> {"Directory", $dataDir <>

"Trained_Networks\\", "Interval" -> Quantity[15, "Minutes"]}

];

In[66]:= trained = results["TrainedNet"];

Save trained net

In[67]:= Export["PredictTextDate.wlnet", trained]

Out[67]= "PredictTextDate.wlnet"

Testing

Testing and Results

In[25]:= CalculateAccuracy[title_String] := Module[{text, predDate, actualDate},

text = processForInput[sample[ResourceData[title]]];

actualDate = ResourceObject[title]["SourceMetadata"]["Date"];

predDate = numToDate[net[text]];

{IntegerPart[

Abs[UnitConvert[DateDifference[actualDate, predDate], "Years"]]],

actualDate, DateObject[predDate, "Year"]}

]

In[50]:= titleList = {"Friends, Romans, Countrymen", "On the Origin of Species",

"Agnes Grey", "Alice in Wonderland", "The Pickwick Papers",

"The Wheels of Chance", "Pellucidar",

"The Adventures of Huckleberry Finn", "The Emerald City of Oz",

"The Old Curiosity Shop", "Adam Bede", "A Study in Scarlet",

"Micah Clarke", "Prufrock"};

In[51]:= accuracyList = CalculateAccuracy /@ titleList;

In[52]:= resultsTable =

Dataset@SortBy[

Join[{{"Error", "Actual Date", "Predicted Date"}}, accuracyList], #[[2]] &];

In[53]:= meanAccuracy = N@Mean@accuracyList[[All, 1]]

Out[53]= Quantity[25.8571, "Years"]

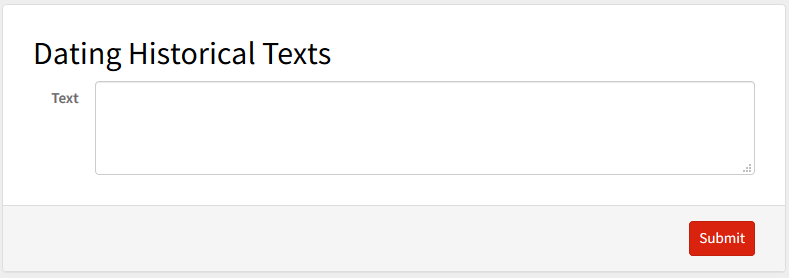

Want to test it out?

We have launched a micro-site that implements the current neural network architecture in order to allow the prediction of publication dates of an input text. Link: Dating Historical Sites Microsite

We have launched a micro-site that implements the current neural network architecture in order to allow the prediction of publication dates of an input text. Link: Dating Historical Sites Microsite

You can also try testing the code using the Wolfram Code below. Link: Download Code

Acknowledgements

This project could not have been accomplished without the support, encouragement and insight of my mentor: Mr. Richard Hennigan.

Attachments:

Attachments: