Let's try with more concrete example:

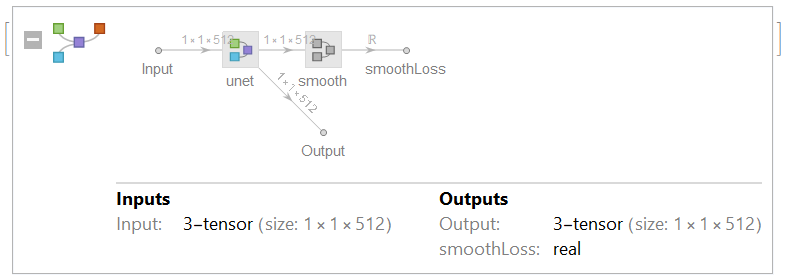

I created the following net:

It gets a 1X1X512 signal as "Input", and produces some manipulation through "unet" to achieve "Output", which is the desired signal (which resemble to the input signal), by calculating the standard loss.

In addition, "Output" enters to "smooth" layer to calculate the "smoothLoss".

However - the "Target" port for the two losses should be different: for the "output" calculation the target is as the input, and for the "smoothLoss" the target should be zero.

I tried to implemented it as:

testTrain = NetTrain[fullNet, <|"Input" -> inputDataNorm, "Output" -> inputDataNorm, "smoothLoss" -> ConstantArray[0, Length[inputDataNorm]]|>, LossFunction -> {"Output", "smoothLoss"}]

But I got messy results, and I'm sure somthing is wrong with that code.

Can someone help me please ?