The direct approach would be to try to determine the cdf of x1 conditional on x1 > x2:

cdf = Probability[x1 <= x0 \[Conditioned] x1 > x2,

{x1 \[Distributed] NormalDistribution[mu1, sigma1],

x2 \[Distributed] NormalDistribution[mu2, sigma2]},

Assumptions -> {mu1 <= mu2, sigma1 > 0, sigma2 > 0}]

But this just returns the command. This is either because Probability doesn't know how to obtain the answer or because there is no closed-form answer. So more basic approaches are needed.

We first determine the joint pdf of x1 and x2 given that x1 > x2 and then integrate over x2 to get the pdf of x1 conditional on x1 > x2.

(* Probability that x1 > x2 *)

p = Probability[x1 > x2,

{x1 \[Distributed] NormalDistribution[mu1, sigma1],

x2 \[Distributed] NormalDistribution[mu2, sigma2]},

Assumptions -> {sigma1 > 0, sigma2 > 0}]

(* 1/2 Erfc[(mu1 - mu2)/(Sqrt[2] Sqrt[sigma1^2 + sigma2^2])] *)

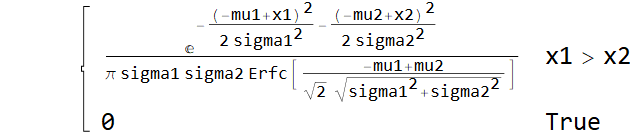

(* Joint distribution of x1 and x2 given that x1 > x2 *)

jointPDF = Piecewise[{{PDF[NormalDistribution[mu1, sigma1], x1]*

PDF[NormalDistribution[mu2, sigma2], x2]/p, x1 > x2}}, 0]

(* Integrate over x2 to get marginal pdf for x1 given that x1 > x2 *)

pdfx1 = Integrate[jointPDF, {x2, -Infinity, x1},

Assumptions -> {x1 \[Element] Reals, mu1 <= mu2, sigma1 > 0,

sigma2 > 0}]

(* (E^(-((mu1 - x1)^2/(2 sigma1^2)))*

Erfc[(mu2 - x1)/(Sqrt[2] sigma2)])/(Sqrt[2 \[Pi]] sigma1 Erfc[(-mu1 + mu2)/(Sqrt[2] Sqrt[sigma1^2 + sigma2^2])]) *)

There doesn't appear to be a nice closed form for the cdf, mean, or variance.

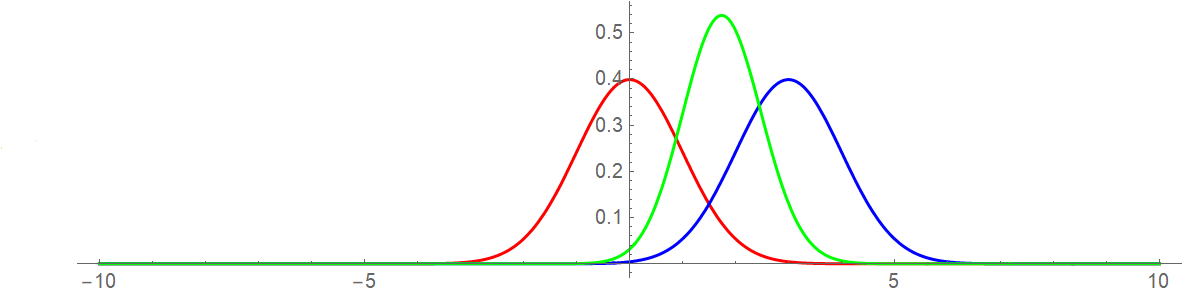

This does appear to match what you got from R:

parms = {mu1 -> 0, mu2 -> 3, sigma1 -> 1, sigma2 -> 1};

Plot[{PDF[NormalDistribution[mu1, sigma1] /. parms, x1],

PDF[NormalDistribution[mu2, sigma2] /. parms, x1],

pdfx1 /. parms}, {x1, -10, 10},

PlotStyle -> {Red, Blue, Green}, PlotRange -> All, AspectRatio -> 1/4]