It looks like in 13 some things about what could be compiled in FunctionLayer changed.

In 12.3, I had to write some ugly code to get the anchor box to box output conversion network to compile in a FunctionLayer, so before it looked like this:

FunctionLayer[Apply[Function[{anchorsIn, grid, conv}, Block[

(* This is a really bad function because in 12.2, FunctionLayer was not compiling nice functions. *)

(* ... *)

(* We assume the net has been reshaped to dimensions n x Anchors x dim x dim. *)

{

boxes = conv[[1 ;; 4]],

classPredictions = conv[[6 ;;]],

confidences = LogisticSigmoid[conv[[5]]]

},

(* We first need to construct our boxes to find the best fits. *)

Block[{

boxesScaled =

Join[((1.0/(inputSize/32.0)*Tanh[boxes[[1 ;; 2]]] // TransposeLayer[{2 <-> 3, 3 <-> 4}]) +

(grid // TransposeLayer[{1 <-> 3, 3 <-> 2}]) // TransposeLayer[{4 <-> 2, 3 <-> 4}]),

(Transpose @ (5*LogisticSigmoid[ boxes[[2 ;; 3]]]) * anchorsIn // Transpose)]},

<|

"boxes" -> boxesScaled,

"confidences" -> confidences,

"classes" -> classPredictions

|>

]]]]]

In 13, it seems like compiling nested blocks/modules/whatever does not work anymore, but we can simplify this expression to a single module now without getting compilation errors.

FunctionLayer[Apply[Function[{anchorsIn, grid, conv}, Module[

(* We assume the net has been reshaped to dimensions n x Anchors x dim x dim. *)

{

boxes = conv[[1 ;; 4]],

classPredictions = conv[[6 ;;]],

confidences = LogisticSigmoid[conv[[5]]]

},

(* We first need to construct our boxes to find the best fits. *)

<|

"boxes" -> Join[((1.0/(inputSize/32.0)*Tanh[boxes[[1 ;; 2]]] // TransposeLayer[{2 <-> 3, 3 <-> 4}]) +

(grid // TransposeLayer[{1 <-> 3, 3 <-> 2}]) // TransposeLayer[{4 <-> 2, 3 <-> 4}]),

(Transpose @ (5*LogisticSigmoid[ boxes[[2 ;; 3]]]) * anchorsIn // Transpose)],

"confidences" -> confidences,

"classes" -> classPredictions

|>

]]]]

Lastly, it seems the convention for calling ThreadingLayer and FunctionLayer with multiple arguments changed:

before, you could do

ThreadingLaye[...][x, y]

but in 13 you need to do:

ThreadingLayer[...][{x, y}]

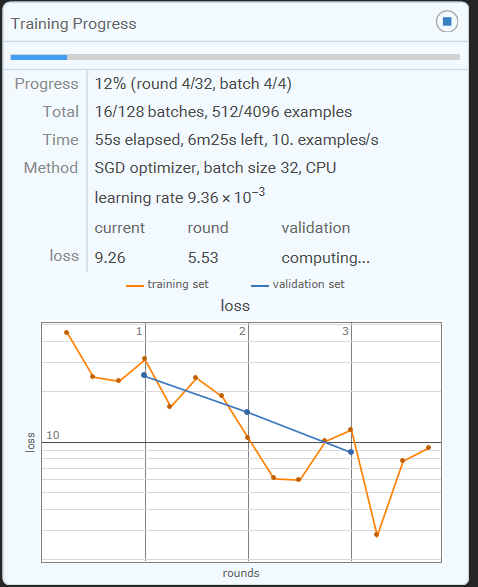

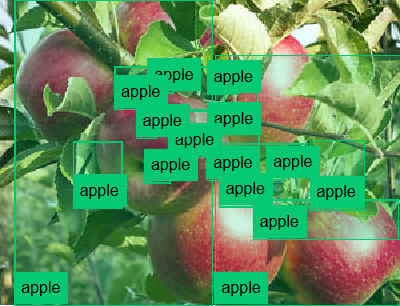

And now it works again:

I have pushed these changes to the Wolf Detector github repo, so older versions are probably broken now. But it now works in 13.2. It is also worth noting that 13 added the built-in function TrainImageContentDetector, but IDK what algorithm it is using. Maybe someone from WRI can chime in on that?