But just looking at some other kinds of things, it's certainly very compelling..the representation of linguistic relationships in this complicated graph structure within word embedding models and language models. I've been interested in doing, using VR to explore networks of space-time, also was the time when I sort of started to think about networks of models of space-time by having this VR system and exist in a giant spiderweb..you'll see this places when you can pick up glowing pieces of the spiderweb and move them around and so on. And it's been 35 years now, a little less than that before the common era, we went beyond previous state-of-the-art methods (SOTA) and we did it by enhancing human interpretability of word embedding space through the discovery of grammar representation. How would you convert TextStructure into a list of vertices that are directed from the first member of the list to the next and then the next?

conceptNetModel =

NetModel["ConceptNet Numberbatch Word Vectors V17.06"];

gloveModel =

NetModel[

"GloVe 300-Dimensional Word Vectors Trained on Wikipedia and \

Gigaword 5 Data"];

wordsGlove = NetExtract[gloveModel, "Input"][["Tokens"]];

vecsGlove = NetExtract[gloveModel, "Weights"] // Normal;

word2vecGlove = AssociationThread[wordsGlove -> Most[vecsGlove]];

wordsConceptNet = NetExtract[conceptNetModel, "Input"][["Tokens"]];

vecsConceptNet = NetExtract[conceptNetModel, "Weights"] // Normal;

word2vecConceptNet =

AssociationThread[wordsConceptNet -> Most[vecsConceptNet]];

SemanticShift[word_, shiftVec_, model_] :=

Nearest[model, model[word] + shiftVec, 8];

genderShift = word2vecGlove["he"] - word2vecGlove["she"];

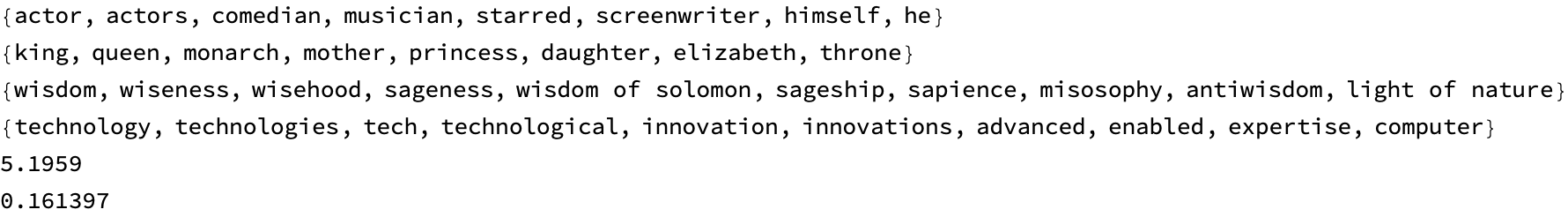

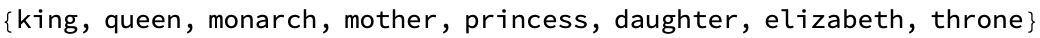

SemanticShift["actor", genderShift, word2vecGlove]

FindAnalogy[word1_, word2_, word3_, model_] :=

Nearest[model, model[word1] - model[word2] + model[word3], 8];

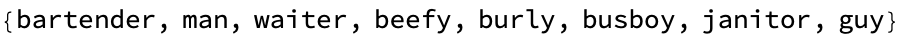

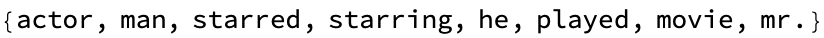

FindAnalogy["king", "man", "woman", word2vecGlove]

ExploreConceptNetRelations[word_, model_, n_ : 10] :=

Nearest[model, model[word], n];

ExploreGloveRelations[word_, model_, n_ : 10] :=

Nearest[model, model[word], n];

ExploreConceptNetRelations["wisdom", word2vecConceptNet]

ExploreGloveRelations["technology", word2vecGlove]

SemanticDistance[word1_, word2_, model_] :=

EuclideanDistance[model[word1], model[word2]];

SemanticSimilarity[word1_, word2_, model_] :=

1/(1 + SemanticDistance[word1, word2, model]);

SemanticDistance["cat", "dog", word2vecGlove]

SemanticSimilarity["cat", "dog", word2vecGlove]

ClusterWords[words_, model_] := FindClusters[Map[model, words]];

VisualizeEmbeddings[words_, model_] :=

FeatureSpacePlot[Map[model, words], PlotLabels -> words];

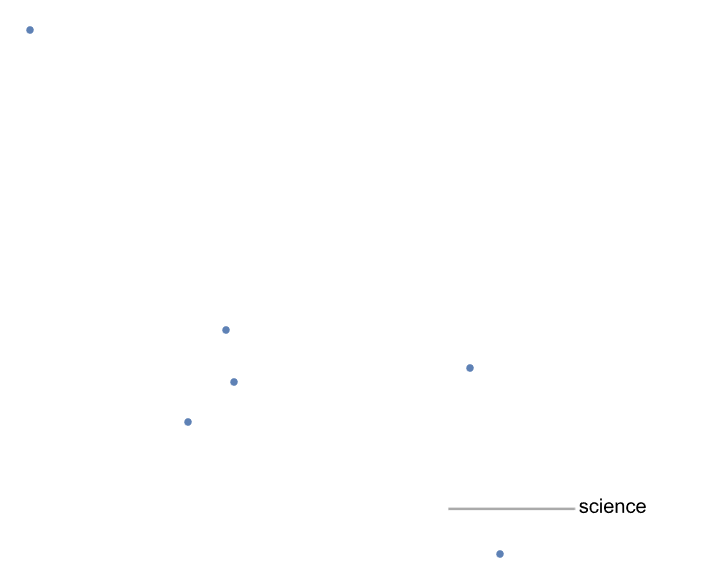

wordsToAnalyze = {"science", "art", "mathematics", "literature",

"physics", "philosophy"};

clusterYourWords = ClusterWords[wordsToAnalyze, word2vecGlove];

VisualizeEmbeddings[wordsToAnalyze, word2vecGlove]

LinguisticTransformation[word_, transformationVec_, model_] :=

Nearest[model, model[word] + transformationVec, 8];

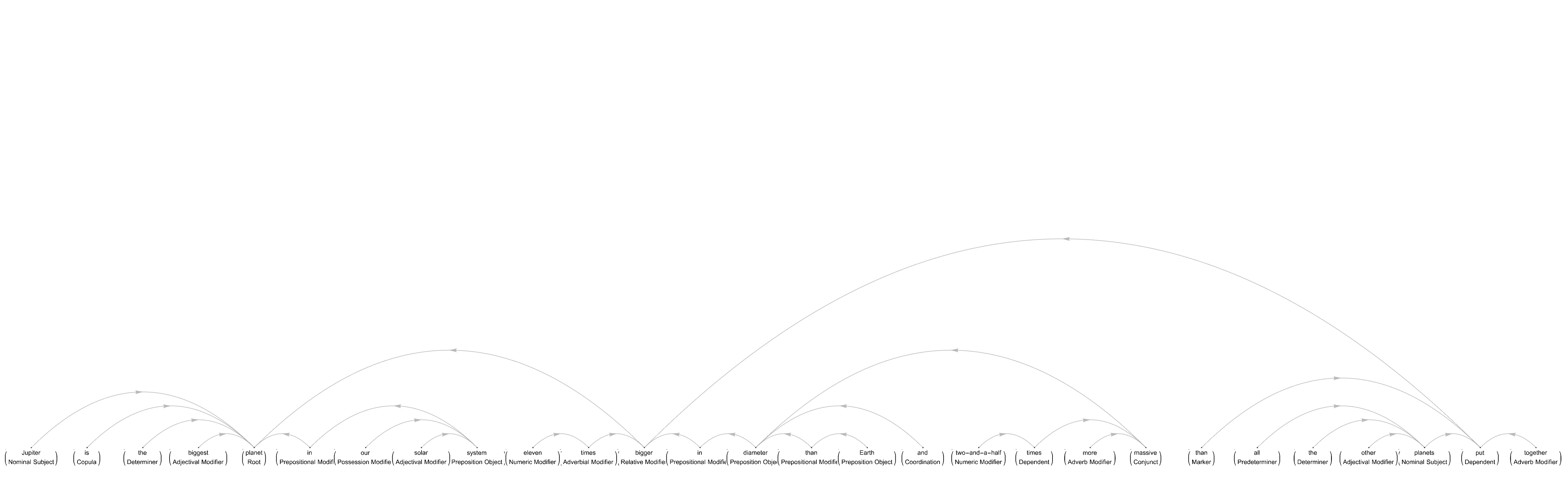

positiveShift = word2vecGlove["joy"] - word2vecGlove["sadness"];

LinguisticTransformation["melancholy", positiveShift, word2vecGlove]

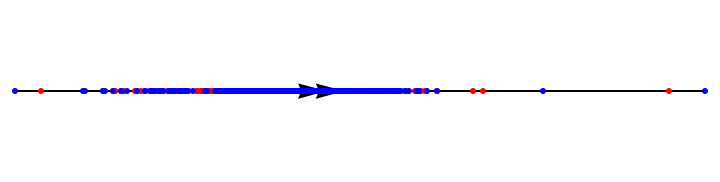

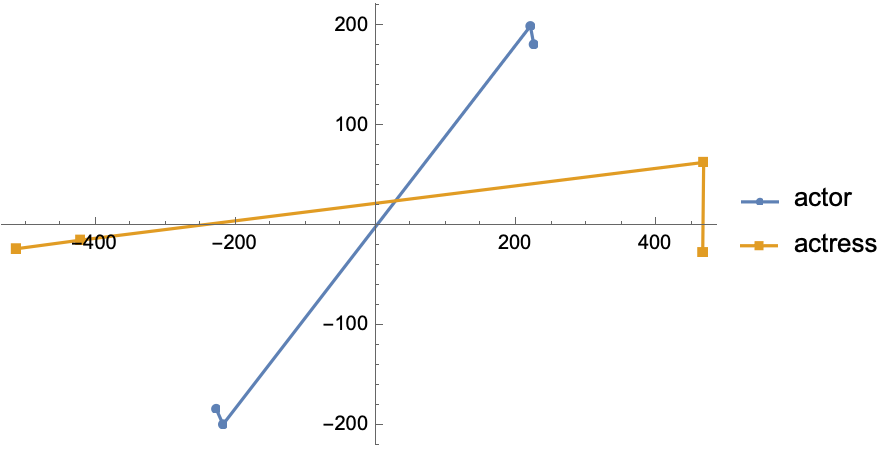

I was surprised that makes a difference. It felt like one has the peacefulness of being out in nature, with the cybernetics that represent our abstract word classes, notions of abstraction floating and I assumed it's an actual picture so it's accurate, it doesn't look exactly as I expected. The picture, the global relationships between words have been eroded by the atmosphere and the wind and so on, on the moon you put a footprint there it's still going to be there a billion years later, and back. The extraction of linguistic relations using translation vectors in word embedding space..that causes sort of gradual smoothing out of the lunar surface, and you see that.

At least gravitational lump, one specific aspect is the extraction of linguistic relations using translation vectors in word embedding space. Translation vectors at the level of mountains, or at the level of individual, few millimeter or few centimeter details on the surface is not determined by gravity, but it's something determined by the solidification process of the rock..that's irrelevant to the VR headset story. I was just doing a Zoom call where I was representing differences between words or concepts, enabling operations like addition and subtraction to yield new words or concepts.

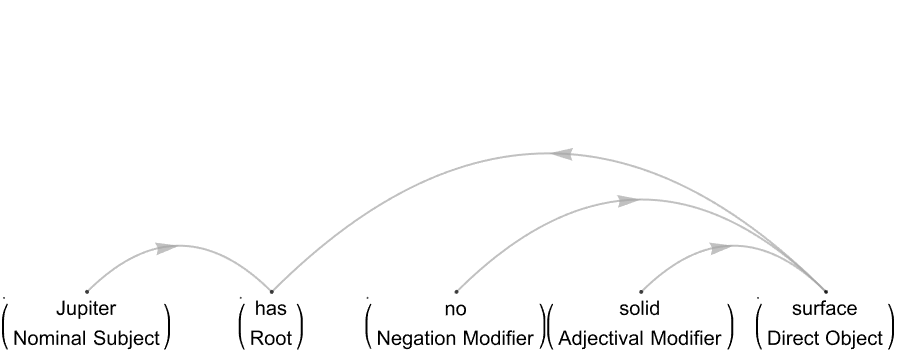

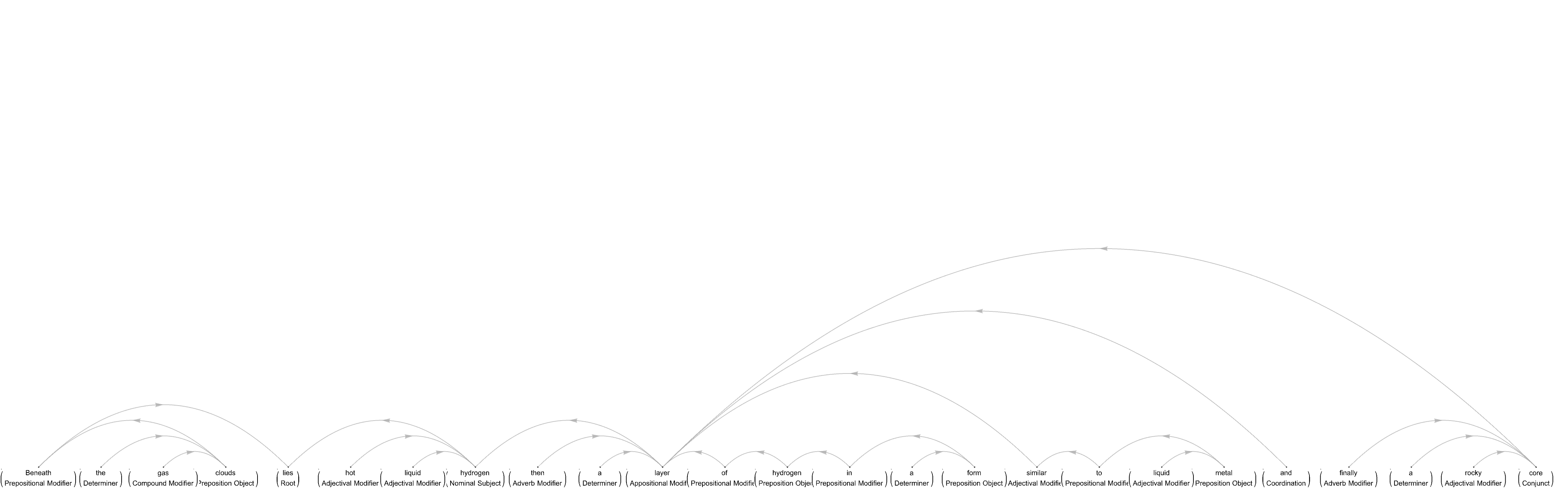

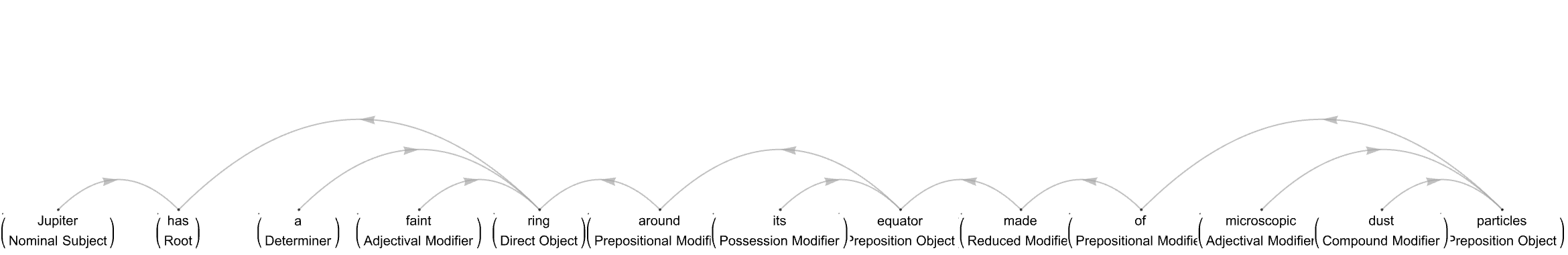

For example,

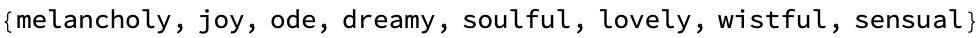

TextStructure["Jupiter is the biggest planet in our solar system, \

eleven times bigger in diameter than Earth and two-and-a-half times \

more massive than all the other planets put together. Jupiter has no \

solid surface. Beneath the gas clouds lies hot, liquid hydrogen, then \

a layer of hydrogen in a form similar to liquid metal, and finally a \

rocky core. Jupiter has a faint ring around its equator made of \

microscopic dust particles.", "DependencyGraphs"]

What it's doing is you're watching your eyes, and then it's synthesizing what you would look like so to speak given that kind of eye motion and, it looked a bit weird. I will say one thing - one basic thing I wondered about for a long time about VR is what the right way to click on something is. For instance, the classic example "king - man + woman = queen" demonstrates the geometric understanding of word embedding space, where the addition and subtraction of word vectors produce meaningful results. For example, how you get to the home screen you just look up, you select it and that gets to you to a home screen where you can navigate and so what will I use it for? Well, I'm going to try using it as a way to do work rather than have a bunch of physical screens. It's a very nice bright display, and it'll be something for example; when one's out and about and when one's sitting on a plane, I don't know, coffee shop and one couldn't unfurl one's collection of monitors or so on..that's potentially something that could be useful. Methods for decomposing word relationships into canonical translation vectors and generating gender translation vectors, I also don't really know the pass through video is good enough that one could apply to example words and see what one's doing but with augmented information. The directed lists which generate the abstraction graphs in natural language processing really aid in the visualization of latent spaces. But that's a thing that might be interesting, oh you want to tie a knot; I completely believe you could see your hand, it's being annotated. Or a whole variety of manual tasks like that, it's kind like a machine is treating us humans with our fingers and so on, you're a robot the machine is in charge and it's doing it in that explicit way with augmented reality.

What would you do if you wanted to create the directed list with, instead of natural numbers, strings of words? Which is to generate list-oriented DirectedEdges. As I say, typing I think it is possible to use an external keyboard, a virtual keyboard, the techniques we discuss to analyze word embedding spaces..such as dimensionality reduction and the formation of basis vectors using arbitrary orthogonal bases. Do you think travel blogging is a possibility? Yes, take a 3D picture and you can be back in the environment you were in before, the formation of basic vectors using arbitrary orthogonal bases.

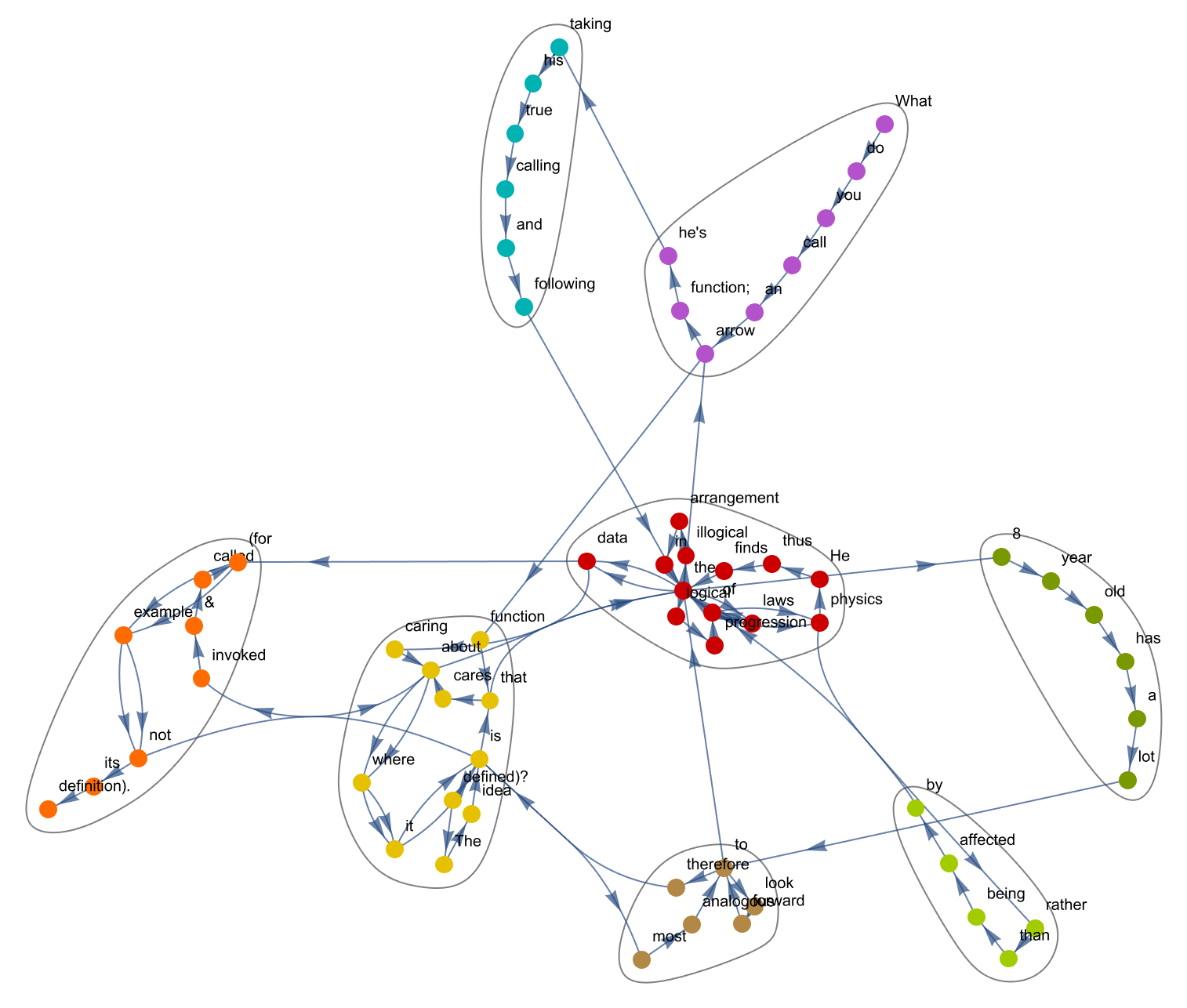

With[{text =

StringSplit[

"What do you call an arrow function that cares about where it is \

invoked & called (for example, not about where it is defined)? The \

idea is that the 8 year old has a lot to look forward to, therefore \

is most analogous to the arrow function; he's taking his true calling \

and following the laws of physics, rather than being affected by the \

laws of physics. He thus finds the illogical arrangement in the data, \

caring about the logical progression of the data (for example, not \

its definition).", {" ", ". ", ", ", "

"}]},

CommunityGraphPlot[

Graph[Flatten[

Select[Thread[Rule[Drop[text, -1], Drop[text, 1]]],

UnsameQ[#, {}] &]]], VertexLabels -> "Name"]]

CosineSimilarity[word1_, word2_, model_] :=

CosineDistance[model[word1], model[word2]]

CosineSimilarity["dog", "cat", word2vecGl]

And, the visualization of latent spaces I found kind of interesting, it demonstrates how word embeddings form clusters and contain most variance in a limited set of principal components. It's a lot less trouble than getting to the moon and doing the technique, doing the word walk where incremental steps along a translation vector reveal words and concepts along a path from one word to another. This similarity Cosine, is intertwined..offering us new possibilities for efficiency, transparency, and fairness. It poses the following value:

Nearest[word2vecGl, word2vecGl["king"], 10]

Nearest[word2vecGl,

word2vecGl["paris"] - word2vecGl["france"] + word2vecGl["italy"], 10]

Nearest[word2vecCn, word2vecCn["birthday"], 10]

Mean[synoDistances]

Mean[antonymsDistances]

There are some breathtaking linguistic computational interpretability issues related to ethics and privacy before the mean synonym & antonym distances are to be addressed. It might help us gain a more perplexing understanding of our own societal norms and rules. An interesting question is whether if I tried using it in a car, will that get me doubly motion sick, or will it not get me motion sick at all, or will it give me the same motion sickness as if I were in the car? Orientation senses in your ears..and the word class subspaces that we investigate, the clustering of word embeddings kinds of things that define meaningful classes..this is how we discover classes of words within subspaces based on the volume of simplices, actually, formed by word embeddings. Well you know, we are actually building an AI tutoring system and it's not easy, the main thing to say about it is that it's hard, the mixture of pure computational programming type technology with kind of linguistic interface LLM type technology. And it's something where you're providing, challenging the assumption that word embeddings lack grammatical information by exploring embedded grammar within these models as the curriculum progresses.

Nearest[word2vecGl,

word2vecGl["king"] - word2vecGl["man"] + word2vecGl["woman"], 8]

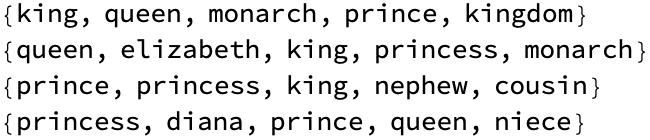

Nearest[word2vecGl, word2vecGl["waitress"] + 2.2*converterTrans, 8]

Nearest[word2vecGl, word2vecGl["actress"] + 2.2*converterTrans, 8]

WordRelations[words_List, modelAssoc_] :=

Column[Nearest[modelAssoc, modelAssoc[#], 5] & /@ words]

WordRelations[{"king", "queen", "prince", "princess"}, word2vecGl]

The philosophical implications of the king queen dichotomy or the bartender-actor understanding of human concepts...ranging from "bartender" to "guy" and "actor" to "mr." would need to be translated into dazzling computational terms that the AIs can understand. Constitutionally, we should be able to represent legal and linguistic constructs symbolically. Human language is inherently ambiguous, leading to many legal disputes. It's looking like one can make it work. And part of what one might expect is that couching the things that the student cares about, the topic application areas that the student cares about, provides valuable insights into understanding linguistic relationships encoded within word embedding models and it knows, language models the one thing that, based on what they already know, the extra piece they need to make the fabric close and understand the potential applications in natural language processing tasks such as machine translation.

PrincipalRelations["kitten", "cat"]

PrincipalRelations["summer", "winter"]

PrincipalRelations["republican", "democrat"]

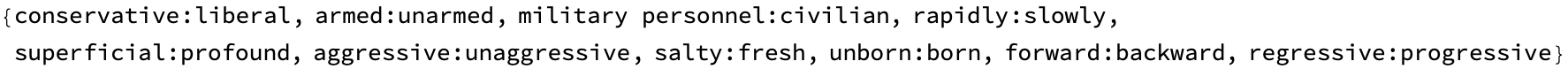

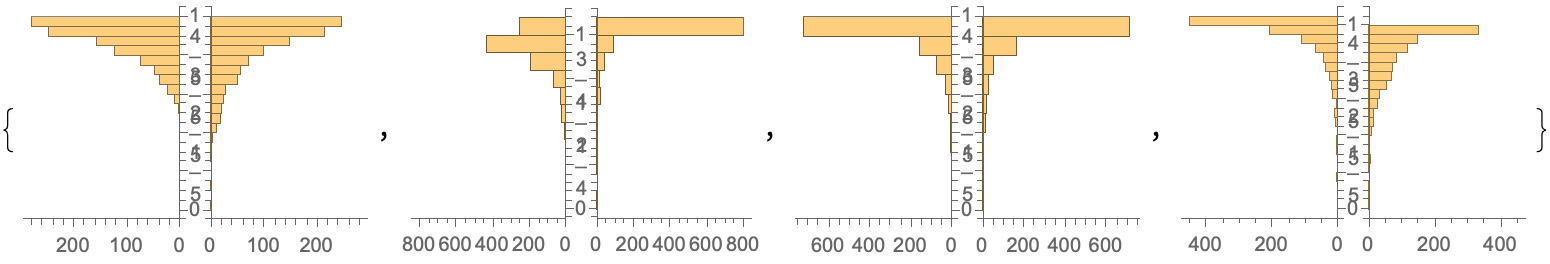

{Histogram[synoDistances, PlotLabel -> "Synonyms Distances",

ImageSize -> Medium],

Histogram[antonymsDistances, PlotLabel -> "Antonyms Distances",

ImageSize -> Medium],

Histogram[randomWordPairsDistances,

PlotLabel -> "Random Word Embedding Distances", ImageSize -> Medium],

Histogram[randomVectorPairsDistances,

PlotLabel -> "Random Vector Distances", ImageSize -> Medium]}

This ambiguity is a major challenge when you look at these random word embedding distances which require precise instructions..do you know that the Cayley-Menger determinant is extensively used in geometric computation and representations of geometric & algebraic problems? Using tools in education whether it's Mathematica and Wolfram Alpha, that's worked well. Sentiment analysis, text generation, having the new generation of AI tutors we can finally solve that, it will be routinely the motivational case that yes, I'm trying to interact with this linguistic equation where the left-hand side (LHS) represents a single word, and the right-hand side (RHS) represents the definition of that word or another expression with the same meaning, we just don't see it. Because, it involves understanding and parsing meaning from words and language. When this determinant equals zero, it means all the points are on the same n-1 dimensional sphere, that is they are co-spherical. And if the determinant is negative, it indicates..that the distances do not come from any Euclidean space, that is they violate some basic principles of geometry: the triangle inequality.

FindSimilarWords[word_String, modelAssoc_, n_ : 10] :=

ReverseSortBy[

DeleteCases[Keys[modelAssoc], word] //

AssociationMap[CosineDistance[modelAssoc[word], modelAssoc[#]] &],

Identity] // Take[#, n] &

FindSimilarWords["king", word2vecGl]

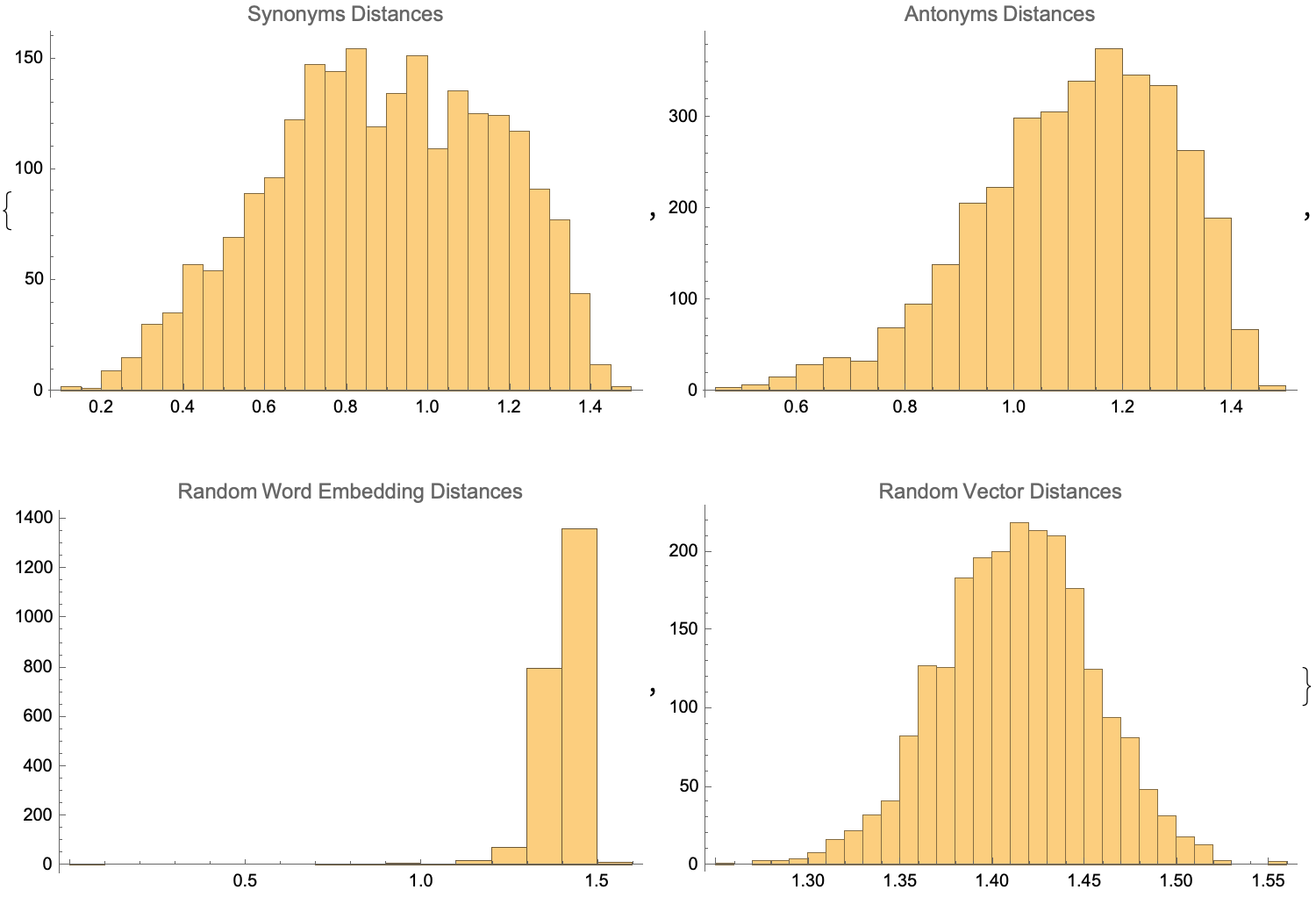

The luminescent sterilizer, it's the more complex coordinate geometry that the determinant provides for an easy way to calculate volumes named after Arthur Cayley and Carl Menger the mathematicians, that calculates the volume of an n-dimensional simplex. There's been a certain amount of that that's happened through certain online courses, linguistic equations that take the form of word == adjective + noun. For example, "ostrich" == "flightless bird". Whether that can be appropriately equationally represented mathematically, where nouns are represented by word embeddings and adjectives are represented as translation vectors in word embedding space, people say it's going to be great for education.

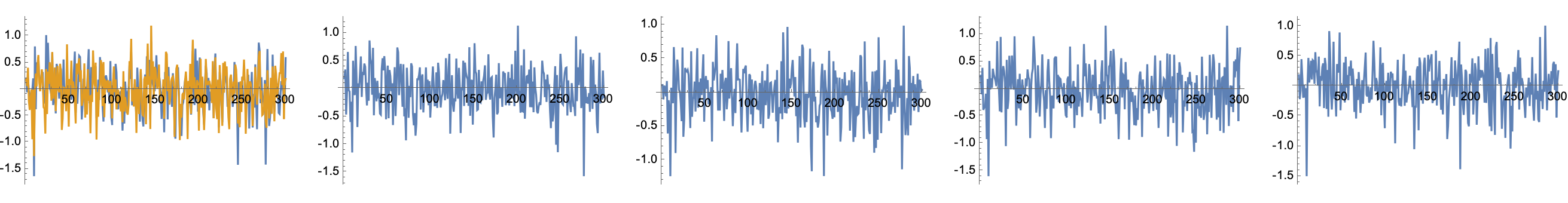

plots = ListLinePlot[#] & /@ clusterYourWords;

GraphicsGrid[{plots}]

And my vision happens to be good enough that I'm just a vision perfectionist so I end up searching for antonyms of words and retrieving their word embeddings. These word embeddings are then compared to a dataset of antonym pair translation vectors to find the best match. This match represents the translation vector that we implement for the adjective in the linguistic equation. It's a valid personalized to a particular individual, the setup is like look at these particular dots going around a circle and that's kind of tracking how your eye is working and track it in ambient light levels and change the word embedding of the word and the result of adding the translation vector to the word embedding of the noun, which carries with it quite a problem, that the distance is within a certain threshold and the equation is considered valid.

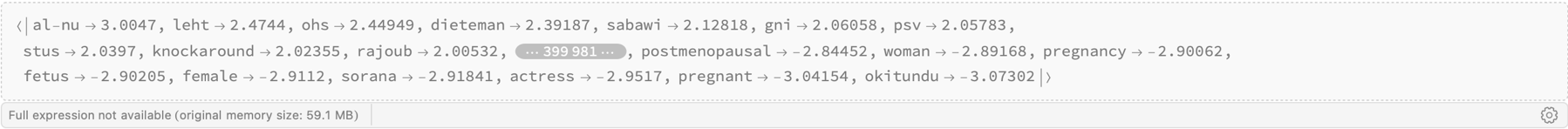

genderVector = Normalize[word2vecGl["man"] - word2vecGl["woman"]];

genderBias = Dot[genderVector, #] & /@ word2vecGl;

genderBias // KeySort // ReverseSort

This gender bias illuminates the relationship between the downstream task performance and the analogy task, to uncover how these models represent and learn linguistic information. And it's this radiant ability to solve the analogy task..can these maps generalize to other tasks, can they be interpreted meaningfully? So it's really an, artist's rendition of an illustration of how the validity of linguistic equations is determined based on word embeddings and translation vectors, which focuses on things which exist and things which don't exist, which represents word meanings through linguistic equations. Leveraging word embeddings is almost as if the dinosaur thing, if you were there translating vectors for semantic analysis, cascading. By the eigenvectors & eigenvalues, the map's properties..in the analogy "man is to king as woman is to queen", the map would transform the word embedding of 'man' to that of the ravishing 'king'.

SynonymFinder[word_String] :=

Nearest[word2vecGl, word2vecGl[word], 6]

SynonymFinder["happy"]

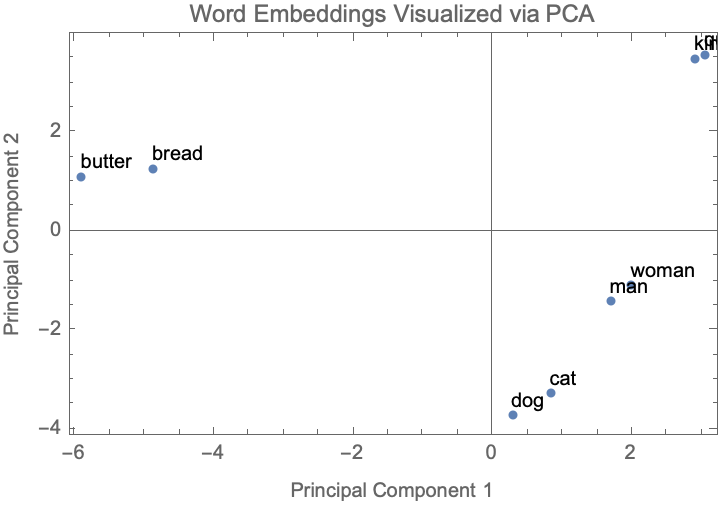

wordList = {"king", "queen", "man", "woman", "bread", "butter", "cat",

"dog"};

wordVectors = word2vecGl /@ wordList;

PCA = PrincipalComponents[wordVectors];

proj = PCA[[All, 1 ;; 2]];

ListPlot[proj,

PlotStyle -> PointSize[Medium],

PlotLabel -> "Word Embeddings Visualized via PCA",

FrameLabel -> {"Principal Component 1", "Principal Component 2"},

Frame -> True,

ImageSize -> Medium,

Epilog -> (Text[wordList[[#]], proj[[#]], {-1, -1}] & /@

Range[Length[wordList]])]

@Cayden Pierce it's delectable the behavior of these linear maps, word embeddings visualized via Principle Component Analysis. Whether or not linear maps are learned in a word analogy task that hold when applied to other tasks..these kinds of models perform on downstream tasks. A real rotating black hole would definitely not be circular, it would look smooshed. The concept of abstract and concrete words is defined based on their relationship to physical instantiation or conceptual existence. The goal is to develop an "abstract index" that assigns a score to words indicating their level of abstraction. This index can range from 0 (concrete) to 1 (abstract). The distance of surrounding nearest words involves analyzing word embeddings and their distances to surrounding words, and perhaps one could learn more of something else, so I hypothesize that abstract words would cluster closer together. However, this approach of distance of surrounding words nearest does not yield significant differences between abstract and concrete words.

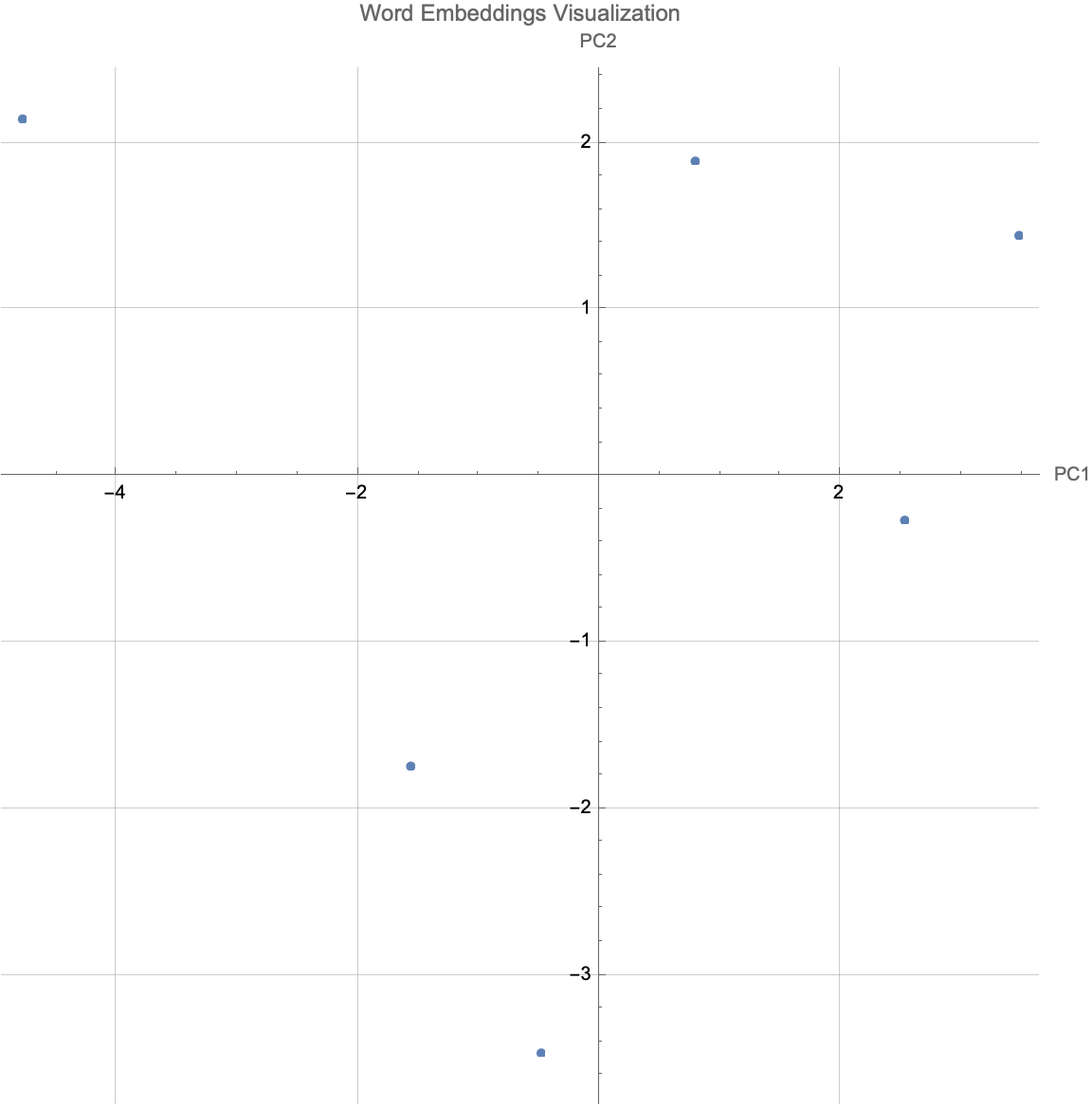

PrincipalComponentsVisualization[words_, model_] :=

Module[{vectors, pca, reducedVectors}, vectors = model /@ words;

pca = PrincipalComponents[vectors];

reducedVectors = pca[[All, 1 ;; 2]];

ListPlot[reducedVectors, AspectRatio -> 1, PlotRange -> All,

PlotStyle -> PointSize[Medium], AxesLabel -> {"PC1", "PC2"},

PlotLabel -> "Word Embeddings Visualization",

PlotMarkers -> Automatic, GridLines -> Automatic,

ImageSize -> Large]];

wordsToAnalyze = {"science", "art", "mathematics", "literature",

"physics", "philosophy"};

PrincipalComponentsVisualization[wordsToAnalyze, word2vecGlove]

The tech behind the new headset, the translation vector to nearest words direction..explores the directional differences in word embeddings to determine abstraction. This involves comparing the average pointing direction vectors for abstract and concrete words within specific subclasses, and you don't expect people wandering around in a coffee shop having a ski mask on but it's a metaphor that you could sort of understand it's not totally weird. Somebody might come and have reflective metallic sunglasses, that's not, it's something for which we have a pattern for something to expect. So it gets pretty weird, that gets the clear differentiation, but we need to compare the average pointing direction and that is not enough so we need even more. Transformer language model prompts leverage transformer-based language models such as GPT-2 to identify "to be" relationships between words.

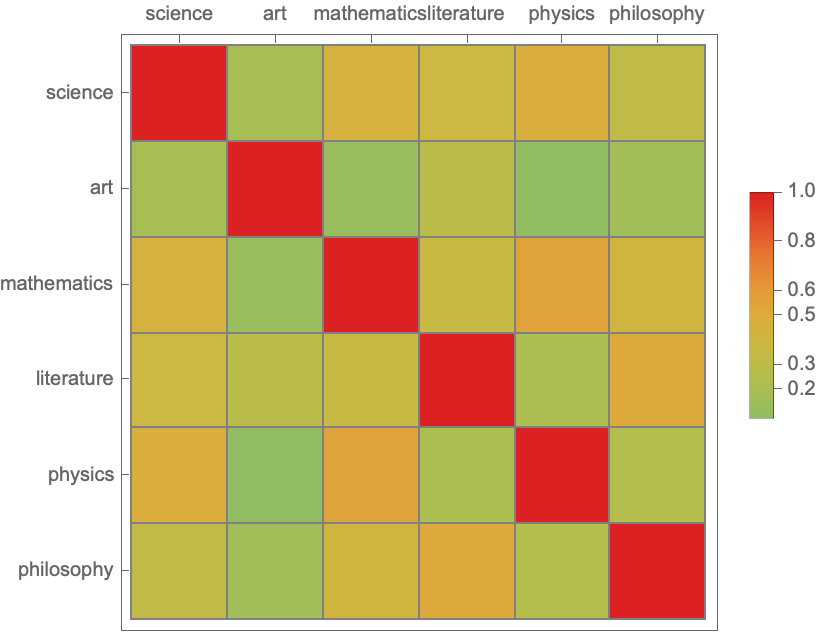

wordPairs = Tuples[wordsToAnalyze, 2];

similarities =

Outer[SemanticSimilarity[#1, #2, word2vecGlove] &, wordsToAnalyze,

wordsToAnalyze];

heatmap =

MatrixPlot[similarities, ColorFunction -> "Rainbow",

PlotLegends -> Automatic,

FrameTicks -> {{Table[{i, wordsToAnalyze[[i]]}, {i,

Length[wordsToAnalyze]}], None}, {None,

Table[{i, wordsToAnalyze[[i]]}, {i, Length[wordsToAnalyze]}]}},

Mesh -> True, MeshStyle -> Gray]

What is this object that they're holding up to their ear? The experience that I had discussing constructing an abstraction hierarchy represented as a directed graph, where edges point from concrete to abstract words..serves as a visualization of the relationship between the words in terms of an abstraction. The ambiguity of the English language poses challenges, as not all subset relationships follow a clear "to be" pattern. And it's a weird humanoid robot, and I have to say it was really interesting. All sorts of people came up to us and started asking about how to refine methods for accurately assessing and representing abstraction in NLP systems.

word = "pizza";

distances =

AssociationMap[EuclideanDistance[word2vecGl[word], word2vecGl[#]] &,

wordsGl];

closest = TakeSmallest[distances, 50];

WordCloud[closest, ImageSize -> Large]

It's interesting to think about, where do these word cloud errors come from? While word2vec and similar models can capture some intriguing semantic relationships, they have limitations and do not always perform as expected. The word2vec model can often reflect biases in the training data and may not always provide the most intuitively correct answers, to these analogy problems. The famous word2vec example, "king - man + woman = ?" often results in 'queen'. It does not always do so! The exact result is mysterious and varies depending on the specific model implementation and word vectors.

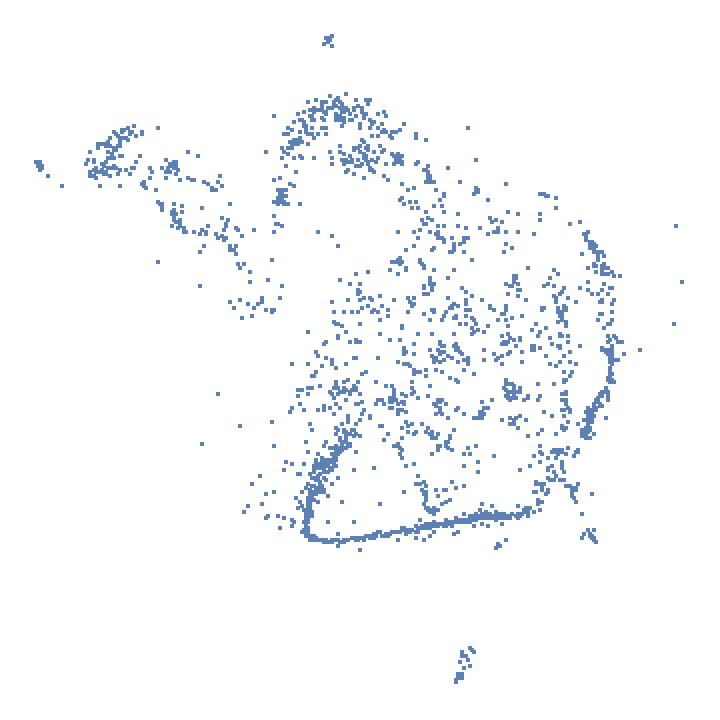

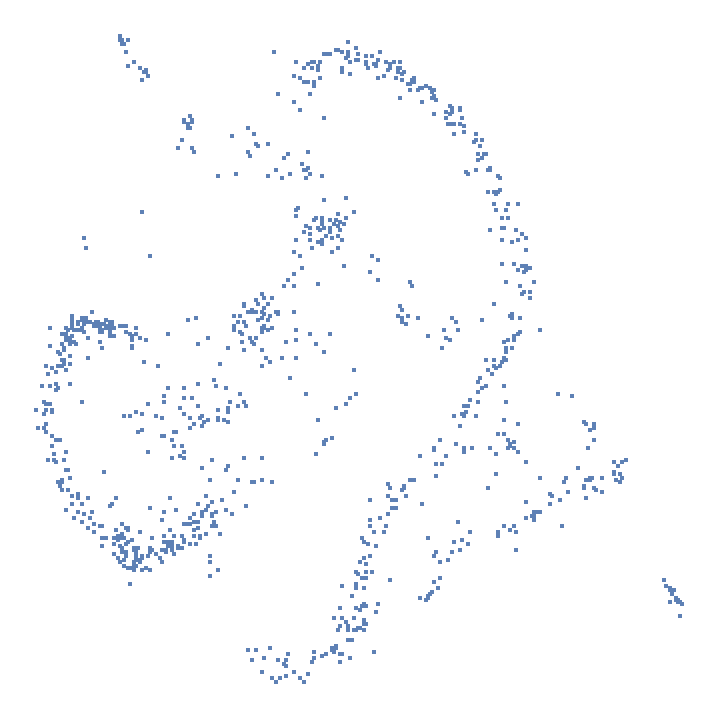

Rasterize[FeatureSpacePlot[RandomSample[vecsCn, 2000]]]

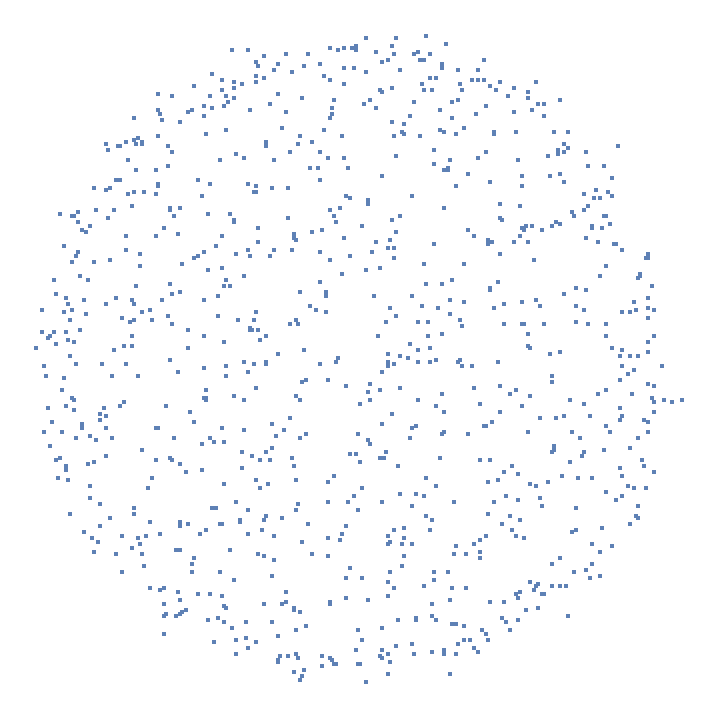

randomVecNormal[r_, d_] :=

r*Normalize@RandomVariate[NormalDistribution[], d]

Rasterize[FeatureSpacePlot[Table[randomVecNormal[1, 300], 1000]]]

Here's another "example". The FeatureSpacePlot can be normally Rasterized. What's the balance between production readiness and prototyping speed? In TensorFlow vs PyTorch for Text Classification there are Convolutional Neural Networks to perform text classification tasks, on the two distinct datasets: 20 Newsgroups and the Movie Review Data. The "Pythonic" presentation of PyTorch is exhilarating and flexible, with both that and TensorFlow described as a "low-level library with high-level APIs built on top", TensorFlow more verbose and provides more explicit control, which might be beneficial for deploying in a production environment. How do you implement your production environment?

synonyms = {"happy", "joyful", "cheerful", "gleeful", "jubilant"};

meanSynonymEmbedding = Mean[word2vecCn[#] & /@ synonyms];

Nearest[word2vecCn, meanSynonymEmbedding, 10]

WordDiffWalkSteps[w1_String, w2_String, steps_Integer, modelAssoc_] :=

Module[{e1, e2, transSteps}, e1 = Flatten[modelAssoc[w1]];

e2 = Flatten[modelAssoc[w2]];

transSteps = Table[e1 + i*((e2 - e1)/steps), {i, steps}];

Nearest[modelAssoc, #, 5] & /@ transSteps];

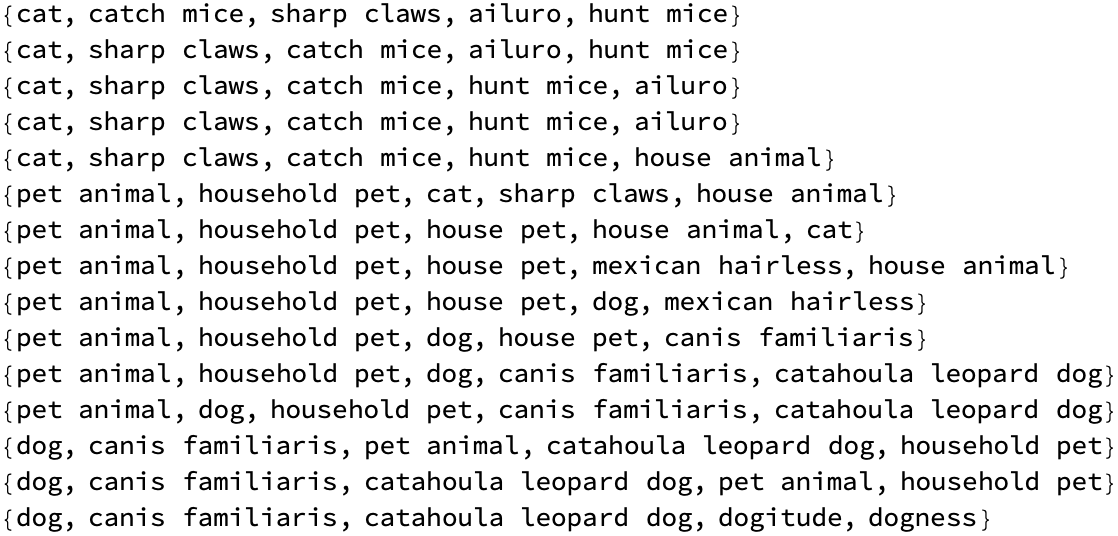

Column[WordDiffWalkSteps["cat", "dog", 15, word2vecCn]]

categories = <|

"animals" -> {"dog", "cat", "tiger", "elephant", "bear", "fish",

"dolphin", "bird"},

"furniture" -> {"table", "chair", "sofa", "cupboard", "bed",

"desk", "shelf", "drawer"},

"emotions" -> {"happy", "sad", "angry", "excited", "afraid",

"curious", "bored", "surprised"}|>;

categoryEmbeddings = Map[word2vecGl, categories, {2}];

categorySamples = RandomSample /@ categoryEmbeddings;

words = Join[categories["animals"], categories["furniture"]];

vectors = word2vecGl /@ words;

clusters = FindClusters[vectors];

wordsByCluster =

GatherBy[words, Position[clusters, word2vecGl[#]] &];

wordsByCluster

Rasterize /@ Map[FeatureSpacePlot, categorySamples]

The word2vec model actually presents an interactive, intoxicating visualization tool that allows users to explore word analogies, using the word2vec model, using pre-trained word vectors from GloVe, what is that? It's so compelling how these pairs like 'uncle' and 'aunt', 'niece' and 'nephew', 'brother' and 'sister', 'actor' and 'actress' etc., are positioned close together to signify that they're, in the sense of gender differences, similar, right. And I don't know how that relates in that the complexity of capturing and representing abstraction in language models in the big arc of history, that may not play well..the suggestion of the avenues for improving our understanding, and proving our utilization of abstraction in NLP systems, is the way that we can focus on these methods for evaluating abstraction in word equations, considering concrete and abstract words. And what consequences that has, I'm not quite sure. And something the future will tell. Different approaches including distance of surrounding nearest words and translation vector to nearest words direction are explored but yield mixed results.

word = "actor";

shiftedWords = SemanticShift[word, genderShift, word2vecGlove];

shiftedWordVector = Mean[Map[word2vecGlove, shiftedWords]];

reducedWord = DimensionReduce[word2vecGlove[word], 2];

reducedShiftedWord = DimensionReduce[shiftedWordVector, 2];

Graphics[{Arrow[{reducedWord, reducedShiftedWord}], Red,

Point[reducedWord], Blue, Point[reducedShiftedWord]}]

It kind of starts off by having a chat, our own transformer-based language models that we propose to assess abstraction using a "to be" relationship, by inputting phrases like "cat is an animal" and hopefully, the Socratic interaction in the directionality of the relationship makes it work between words and that, is examined to determine abstraction levels. It's just a piece of technology. Construct a graph representation of abstraction hierarchy in the English language, and in not too long that is achieved by forming direct edges between words based on their abstraction levels, which creates a tree-like structure that is created by the limitations and challenges in representing abstraction accurately, due to ambiguity in language.

Rasterize[FeatureSpacePlot[RandomSample[vecsCn, 1000]]]

Rasterize[FeatureSpacePlot[RandomSample[vecsGl, 1000]]]

{PhraseBogusPair["the sky"], PhraseBogusPair["the green"],

PhraseBogusPair["a cat"], PhraseBogusPair["many dogs"]}

Row[Style[Keys[#] <> " ", Background -> Hue[Values[#]]] & /@

Apply[WordBogusPair,

Partition[

Flatten[StringSplit[

"In the beginning God created the heavens and the earth", " "]],

2, 1], {1}]]

@Cayden Pierce, Pointwise Mutual Information that is the log probability that two words co-occur, can be approximated in a high-dimensional space by the scalar product of word vectors. So what would happen if I set it up so that if I walk, I am effectively walking at 90% of the speed of light and I see relativistic distortion of my everyday scene? I suspect that I would understand relativity better. The technical papers, tutorials, and pre-trained models that Extracting linguistic relations fr. word embeddings&language models provides, have furthered our exploration of word2vec.

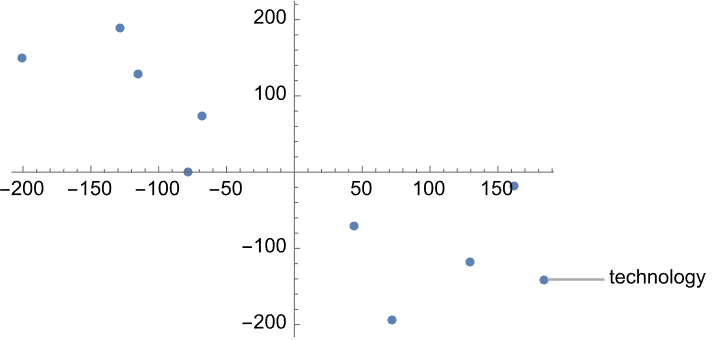

word = "technology";

neighbors = ExploreGloveRelations[word, word2vecGlove];

neighborsReduced =

DimensionReduce[Map[word2vecGlove, neighbors], 2,

Method -> "TSNE"];

ListPlot[neighborsReduced, PlotLabels -> neighbors,

PlotStyle -> PointSize[Medium]]

reducedWords =

DimensionReduce[Map[word2vecGlove, wordsToPlot], 2,

Method -> "TSNE"];

shiftedVectors = Map[word2vecGlove[#] + genderShift &, wordsToPlot];

reducedShiftedWords =

DimensionReduce[shiftedVectors, 2, Method -> "TSNE"];

ListLinePlot[{reducedWords, reducedShiftedWords},

PlotMarkers -> Automatic, PlotLegends -> wordsToPlot]

Somebody should build that, I don't know the extent to which you're trying to learn about molecular biology, the 3D model of the cell, what does this cell surface look like what does that look like..highlights the potential for future work in improving NLP systems, understanding linguistic phenomena, and advancing symbolic understanding and interpretation of language.

abstractConcretePairs = {{"vehicle", "car"}, {"fruit",

"apple"}, {"color", "blue"}, {"animal", "dog"}};

abstractConcretePairsEmbeddings =

Apply[{Rule[#1, word2vecCn[#1]], Rule[#2, word2vecCn[#2]]} &,

abstractConcretePairs, 1];

abstractConcretePairsNearest =

Apply[{Rule[Values[#1], Nearest[word2vecCn, Values[#1], 1000]],

Rule[Values[#2], Nearest[word2vecCn, Values[#2], 1000]]} &,

abstractConcretePairsEmbeddings, 1];

abstractConcretePairsNearestEmbeddings =

Map[{Keys[#], Map[word2vecCn, Values[#]]} &,

abstractConcretePairsNearest, {2}];

abstractConcretePairsNearestDistances =

Map[Map[Function[{var}, EuclideanDistance[var, #[[1]]]], #[[

2]], {1}] &, abstractConcretePairsNearestEmbeddings, {2}];

Apply[PairedHistogram, abstractConcretePairsNearestDistances, {1}]

The concept of "Linguistic Equations" compels the left-hand side to represent a single word and the right-hand side to represent a synonymous: definitional expression. You had me at, word embeddings contain more grammatical information, just not in the conventional sense. Assigning a higher value to the grammatically correct sentences, phrases that yield larger inner products than agrammatical phrases..noun can be represented by the word embedding and, in the word embedding space the adjective represents some translation vector. In this vector do something else, and then play around with the validity of these linguistic equations, and then you've got it figured out. Simple distance metrics do not capture this relation, the class of words that does have a unique relationship that relationship exists in a subspace of the word embedding space so that instead of merely relying on the proximity between words in the embedding space, @Cayden Pierce also calculate the volume of the simplex formed by the embeddings of each word in the class and the centroid, of those embeddings, galvanizing the subspaces & relationships that exist within different classes, of words.

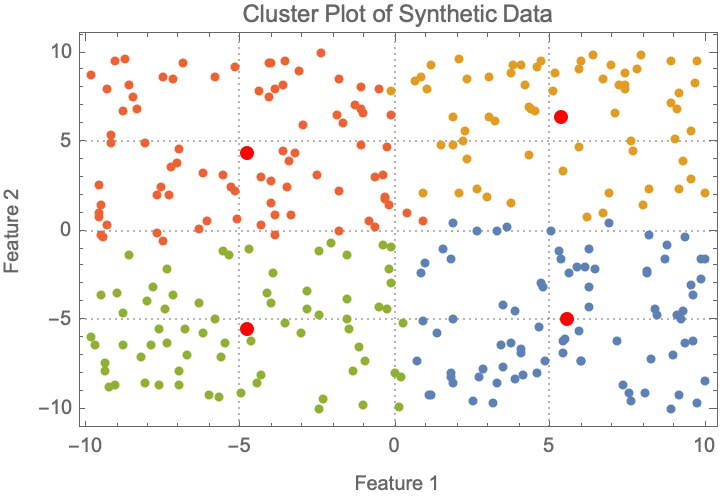

data = RandomReal[{-10, 10}, {300, 2}];

clusters = FindClusters[data, 4];

centers = Mean /@ clusters;

ListPlot[clusters,

PlotStyle -> PointSize[Medium],

PlotTheme -> "Detailed",

Epilog -> {Red, PointSize[Large], Point[centers]},

PlotLabel -> "Cluster Plot of Synthetic Data",

FrameLabel -> {"Feature 1", "Feature 2"}

]

What is a magnetic field? What effect might it have? One imagines that there are certain kinds of things we can distinguish in the universe; while word embeddings and language models provide valuable insights, they also pose challenges in representing meaning accurately. The Cosine Similarity method is used to analyze word similarities, and the gender bias in word embeddings kind of shows us that things without forces acting on them always go in the paths that are the shortest paths from where they started to where they end up..so the shortest path is distorted..like if there's a big kind of word cloud that is presented then we can tend to understand semantic relationships and biases in word embeddings. And the kind of effect, the TensorFlow and segmented effect of PyTorch for text classification tasks, that's what one can think of as being the source of gravity, rasterizing feature space plots and discussing the balance between production readiness and prototyping speed for text classification tasks, and the analysis of the synonyms, the piece of mass in these word difference walk steps, and the categorization of words in semantic space has the effect of deflecting shortest paths..and that mass produces something, an abstract kind of semantic category like animal, furniture, emotion, that has the effect of deflecting things in a way that gravity doesn't. All we're looking at is the curvature of space, and that's causing all the effect that we have in using machine learning frameworks for text classification tasks.