Thank you for your interest in this paclet!

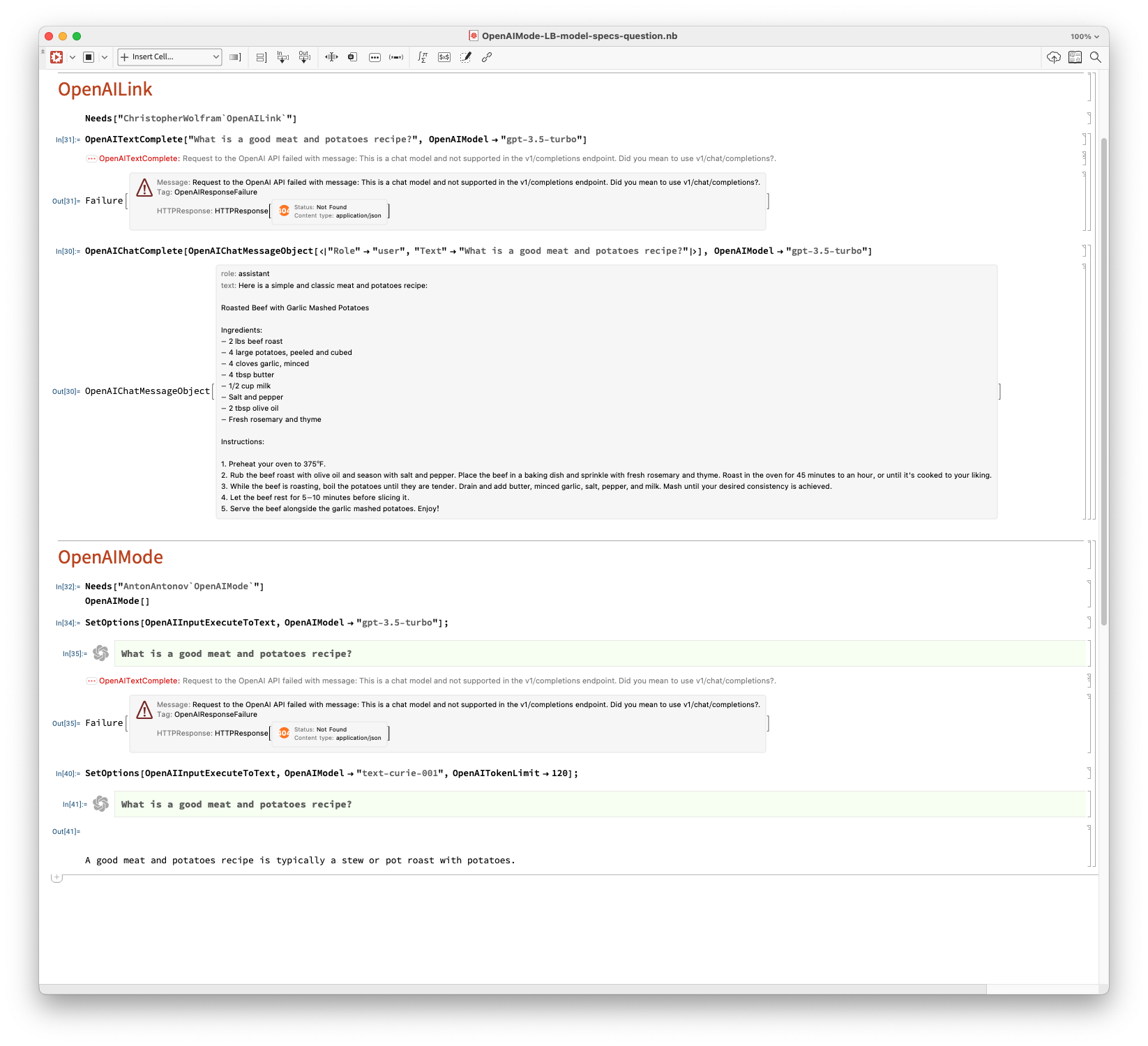

OpenAI completions are of two kinds: text completions and chat completions.

From my (recent) experiments, I know that text completions cannot be done with chat-models, and vice versa.

The problem you have -- with "OpenAILink" -- is that OpenAIMode`OpenAIInputExecuteToText uses ChristopherWolfram`OpenAILink`OpenAITextComplete.

In order to "fix" this in "OpenAILink" I can make OpenAIInputExecuteToText take an evaluator function. (Which, by the way, the underlying function OpenAIInputExecute does.) To a large extend I introduced that level of indirection to resolve these kind of problems.

I have to think about a proper design, though. OpenAIChatComplete takes chat message objects, so, they have to be specified too.

Please see the attached notebook.

Thank you very much Anton. This is great!

I'm having a problem installing the paclet. After step 3:

PacletSymbol["ChristopherWolfram/OpenAILink",

"ChristopherWolfram`OpenAILink`$OpenAIKey"] = "KEY";

I get the following error: ""Tag PacletSymbol in \!(PacletSymbol[\"ChristopherWolfram/OpenAILink\

\", \"ChristopherWolframOpenAILink$OpenAIKey\"]) is Protected."

|

|

|

Thank you for the recognition, Moderation Team!

|

|

|

Do I need to give it my OpenAI API? If so, how?

|

|

|

Thank you for interest in this paclet!

Do I need to give it my OpenAI API?

I assume you mean your OpenAI API authorization token. Then the answer is yes. Generally, it is assumed that the installation and setup steps of "OpenAILink" have been completed. (Before using "OpenAIMode".)

If so, how?

Here is one way (to do the complete setup):

PacletInstall["ChristopherWolfram/OpenAILink"]

Needs["ChristopherWolfram`OpenAILink`"]

PacletSymbol[

"ChristopherWolfram/OpenAILink",

"ChristopherWolfram`OpenAILink`$OpenAIKey"] = "<YOUR API KEY>";

SystemCredential["OpenAIAPI"] = "<YOUR API KEY>";

PacletInstall["AntonAntonov/OpenAIMode"]

Needs["AntonAntonov`OpenAIMode`"]

|

|

|

I’ve tried this out in WolframCloud on my iPad and some of the featured described here do not work:

- Although

Shift-| b gives a text completion cell there is no cell icon to the left (I assume an issue with Stylesheets for cloud notebooks)

- Setting

OpenAIInputExecuteToImage does not generate images

ResourceFunction["MermaidJS"] is not on the WFR.

|

|

|