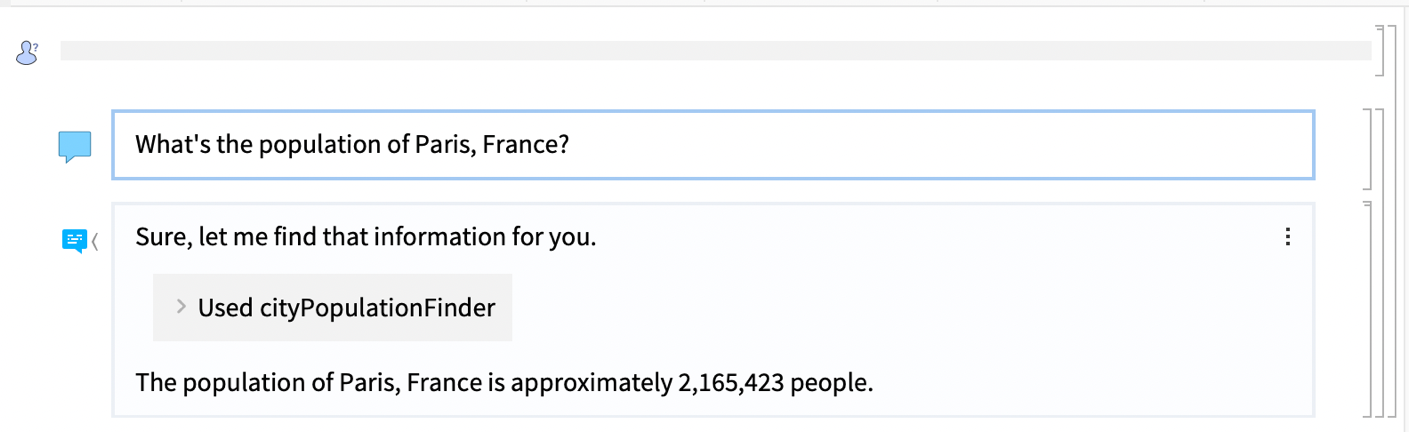

Yes it's still being worked on. Here's an example that uses the LLMTool documentation example of population finding. You can add it as a persona to chat notebooks by deploying to the cloud and adding it from the add/manage persona menu by selecting to use a URL.

Edit: I should add that with chat notebooks tool calling only works with gpt-4 right now, gpt-3.5-turbo makes mistakes. (We have looked into the function calling model but have come across hallucinations it makes thinking it has access to python functions it can call that don't exist.) You can use them programmatically with LLMSynthesize, etc. by setting your LLMEvaluator to have access to tools.

To do the same with the nb I provided it'd be

LLMSynthesize["What is the population of Champaign,IL?", "FullText",

LLMEvaluator -> <|

"Tools" ->

LLMTool["cityPopulationFinder",

"city" -> "City", #city["Population"] &]|>]

Example in a chat notebook