Teaching programing and assessing learning progress is often a very custom task. I wanted to create a completely automated "practically" infinite stream of random puzzles that guide a leaner towards improving programing skills. I think the major problem is content creation. To test whether the learner knows a programming concept, an exercise needs to be wisely designed. And it is better to have a randomized set of such exercises to definitely test the knowledge and exclude guesses and cheating and so on. Often creating such educational materials is very tedious, time consuming, and manual. Exactly like creating good documentation. I will explain one simple idea of using docs to make an educational game. This is just a barebone prototype to clearly follow the inner workings (try it out & share: https://wolfr.am/bughunter ). Please comment with feedback on how we can develop this idea further.

Introduction: efficient use of resources

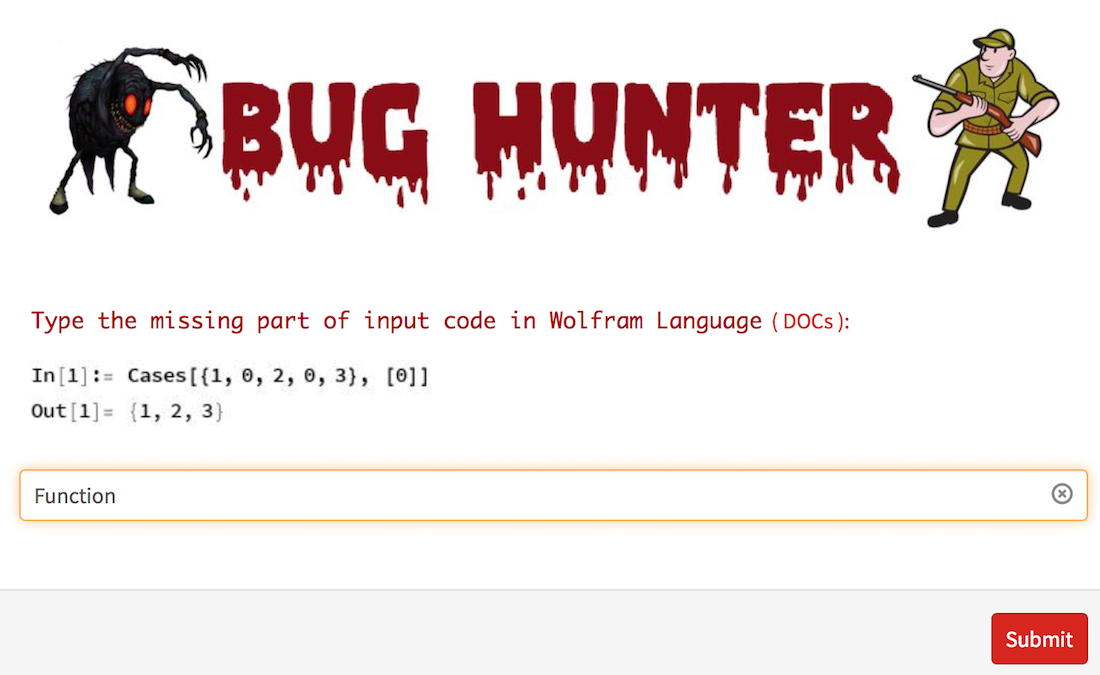

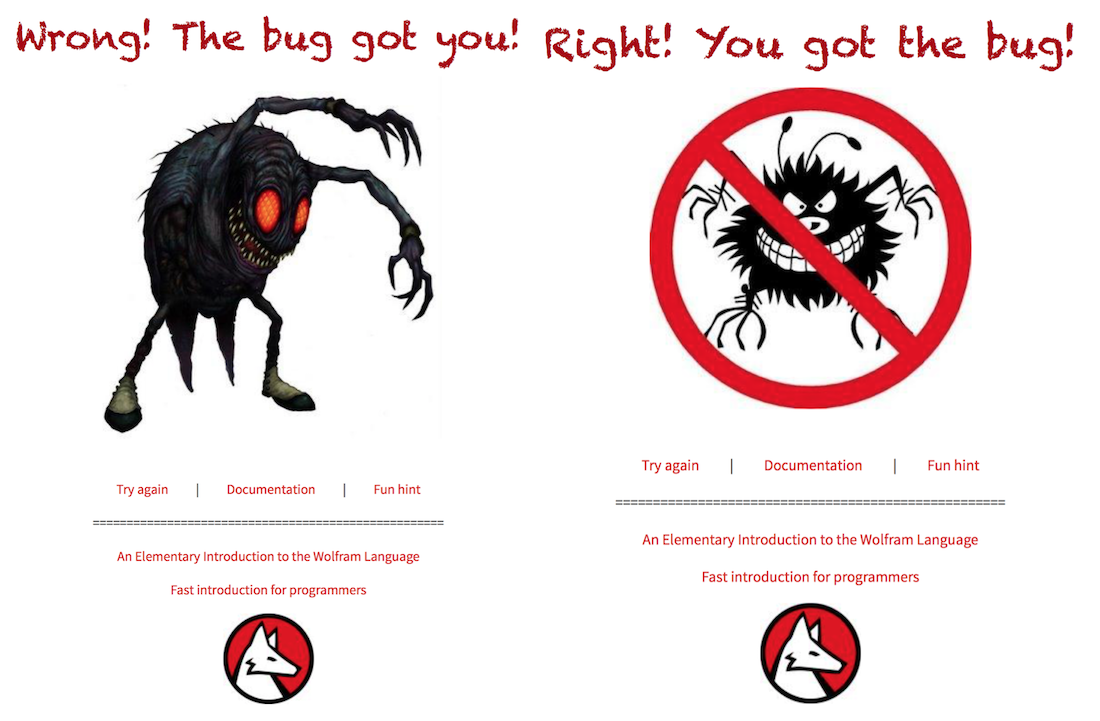

The docs are the finest wealth and depth of information and should be explored beyond their regular usage. Manual painstaking time consuming effort of creating good programing documentation should be used to its fullest potential. An automated game play would be a novel take on docs. We can use existing code examples in docs to randomly pull pieces of code and make programing exercises automatically. Being able to read code and find bugs is, in my experience, one of the most enlightening practices. The goal of the linked above game is to find a defect of the input code (bug) and fix it. Hence, the "bug hunter". There are just 2 possible outcomes of a single game cycle, --- and after each you can "try again":

Core game code: making puzzles

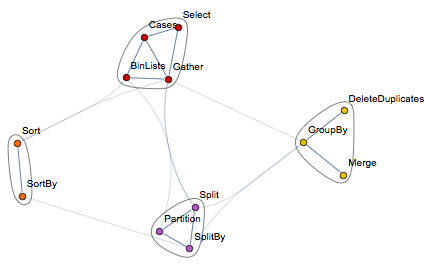

Wolfram Language (WL) documentation is one of the best I've seen. It has pages and pages of examples starting from simple ones and going though the all details of the usage. Moreover the docs are written in WL itself and furthermore, WL can access docs and even has internal self-knowledge of its structure via WolframLanguageData. For instance, this is how you can show a relationship community graph for symbols related to GatherBy:

WolframLanguageData["GatherBy", "RelationshipCommunityGraph"]

We can use WolframLanguageData to access docs examples and then drop some parts of the code. The puzzle is then for the learner to find what is missing. For the sake of clarity designing a small working prototype lets limit test WL functions and corresponding docs' pages to some small number. So out of ~5000 (and we just released a new addition):

WolframLanguageData[] // Length

4838

built in symbols I just take 30

functions = {"Append", "Apply", "Array", "Cases", "Delete", "DeleteCases", "Drop", "Except",

"Flatten", "FlattenAt", "Fold", "Inner", "Insert", "Join", "ListConvolve", "Map", "MapThread",

"Nest", "Outer", "Partition", "Prepend", "ReplacePart", "Reverse", "RotateLeft", "RotateRight",

"Select", "Sort", "Split", "Thread", "Transpose"};

functions // Length

30

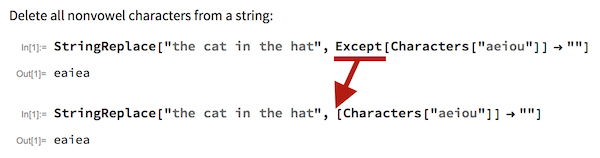

that are listed on a very old but neat animated page of some essential core-language collection. I will also add some "sugar syntax" to potential removable parts of code:

sugar = {"@@", "@", "/@", "@@@", "#", "^", "&"};

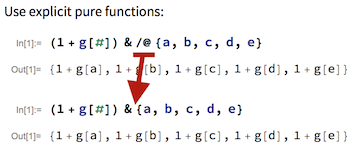

So, for instance, out of the following example in docs we could remove a small part to make a puzzle:

Here is an example of "sugar syntax" removal, which for novice programmers would be harder to solve:

Next step is to define a function that can check if a string is a built-in symbol (function, all 5000) or if it is some of sugar syntax we defined above:

ClearAll[ExampleHeads];

ExampleHeads[e_]:=

Select[

Cases[e,_String, Infinity],

(NameQ["System`"<>#]||MemberQ[sugar,#])&&#=!="Input"&

]

Next function essentially makes a single quiz question. First it randomly picks a function from list of 30 symbols we defined. Then it goes to the doc page of that symbol to the section called "Basic Examples". It finds a random example and removes a random part out of it:

ranquiz[]:=Module[

{ranfun=RandomChoice[functions],ranexa,ranhead},

ranexa=RandomChoice[WolframLanguageData[ranfun,"DocumentationBasicExamples"]][[-2;;-1]];

ranhead=RandomChoice[ExampleHeads[ranexa[[1]]]];

{

ReplacePart[#,Position[#,ranhead]->""]&@ranexa[[1]],

ranexa[[2]],

ranhead,

ranfun

}

]

Now we will define a few simple variables and tools.

Image variables

I keep marveling how convenient it is that Mathematica front end can make images to be part of code. This makes notebooks a great IDE:

Databin for tracking stats

It is important to have statistics of your learning game: to understand how to improve it where the education process should go. Wolfram Datadrop is an amazing tool for these purposes.

We define the databin as

bin = CreateDatabin[<|"Name" -> "BugHunter"|>]

Deploy game to the web

To make an actual application usable by everyone with internet access I will use Wolfram Development Platform and Wolfram Cloud. First I define a function that will build the "result of the game" web page. It will check is answer is wrong or right and give differently designed pages accordingly.

quiz[answer_String,check_String,fun_String]:=

(

DatabinAdd[Databin["kd3hO19q"],{answer,check,fun}];

Grid[{

{If[answer===check,

Grid[{{Style["Right! You got the bug!",40,Darker@Red,FontFamily->"Chalkduster"]},{First[imgs]}}],

Grid[{{Style["Wrong! The bug got you!",40,Darker@Red,FontFamily->"Chalkduster"]},{Last[imgs]}}]

]},

{Row[

{Hyperlink["Try again","https://www.wolframcloud.com/objects/user-3c5d3268-040e-45d5-8ac1-25476e7870da/bughunter"],

"|",

hyperlink["Documentation","http://reference.wolfram.com/language/ref/"<>fun<>".html"],

"|",

hyperlink["Fun hint","http://reference.wolfram.com/legacy/flash/animations/"<>fun<>".html"]},

Spacer[10]

]},

{Style["===================================================="]},

{hyperlink["An Elementary Introduction to the Wolfram Language","https://www.wolfram.com/language/elementary-introduction"]},

{hyperlink["Fast introduction for programmers","http://www.wolfram.com/language/fast-introduction-for-programmers/en"]},

{logo}

}]

)

This function is used inside CloudDeploy[...FormFunction[...]...] construct to actually deploy the application. FormFunction builds a query form, a web user interface to formulate a question and to get user's answer. Note for random variables to function properly Delayed is used as a wrapper for FormFunction.

CloudDeploy[Delayed[

quizloc=ranquiz[];

FormFunction[

{{"code",None} -> "String",

{"x",None}-><|

"Input"->StringRiffle[quizloc[[3;;4]],","],

"Interpreter"->DelimitedSequence["String"],

"Control"->Function[Annotation[InputField[##],{"class"->"sr-only"},"HTMLAttrs"]]|>},

quiz[#code,#x[[1]],#x[[2]]]&,

AppearanceRules-> <|

"Title" -> Grid[{{title}},Alignment->Center],

"MetaTitle"->"BUG HUNTER",

"Description"-> Grid[{

{Style["Type the missing part of input code",15, Darker@Red,FontFamily->"Ayuthaya"]},

{Rasterize@Grid[{

{"In[1]:=",quizloc[[1]]},

{"Out[1]=",quizloc[[2]]}},Alignment->Left]}

}]

|>]],

"bughunter",

Permissions->"Public"

]

The result of the deployment is a cloud object at a URL:

CloudObject[https://www.wolframcloud.com/objects/user-3c5d3268-040e-45d5-8ac1-25476e7870da/bughunter]

with the short version:

URLShorten["https://www.wolframcloud.com/objects/user-3c5d3268-040e-45d5-8ac1-25476e7870da/bughunter", "bughunter"]

https://wolfr.am/bughunter

And we are done! You can go at the above URL and play.

Further thoughts

Here are some key points and further thoughts.

Advantages:

- Automation of content: NO new manual resource development, use existing code bases.

- Automation of testing: NO manual labor of grading.

- Quality of testing: NO multiple choice, NO guessing.

- Quality of grading: almost 100% exact detection of mistakes and correct solutions.

- Fight cheating: clear to identify question type "find missing code part" helps to ban help from friendly forums (such as this one).

- Almost infinite variability of examples if whole docs system is engaged.

- High range from very easy to very hard examples (exclusion of multiple functions and syntax can make this really hard).

Improvements:

- Flexible scoring system based on function usage frequencies.

- Optional placeholder as hint where the code is missing.

- Using network of related functions (see above) to move smoothly through the topical domains.

- Using functions frequency to feed easier or harder exercises based on test progress.

Please comment with your own thoughts and games and code!