Never display entities:

t = AbsoluteTime[];

AbsoluteTiming[

ent = Entity["Plant", "Species:GlycineMax"]["TaxonomyGraph"]][[1]]

AbsoluteTime[] - t

2.05579

2.057142

AbsoluteTiming[ToBoxes[ent]][[1]]

79.7398

A previous spelunking session showed me that EntityValue makes calls to Internal`MWACompute, which if I remember correctly just calls the Wolfram|Alpha API (you can actually completely spelunk how it makes these calls I believe; haven't figured out how to abuse that yet.)

The display call clearly asks for way to much data, which it stores in $UserBaseDirectory/Knowledgebase. So I think for this plant dataset the first time you evaluate that it downloads a bunch of data, which causes the slowdown you see.

I tried to illustrate that:

retDat =

AssociationMap[

With[{

ent = AbsoluteTiming[RandomEntity[#]],

size = AbsoluteTiming[EntityValue[#, "EntityCount"]]},

<|

"Size" -> size[[2]],

"DisplayTime" -> AbsoluteTiming[ToBoxes[ent[[2]]]][[1]],

"RetrievalTime" -> ent[[1]],

"SizeRetrievalTime" -> size[[1]]

|>

] &,

entNames (* A cached version of EntityValue[] *)

];

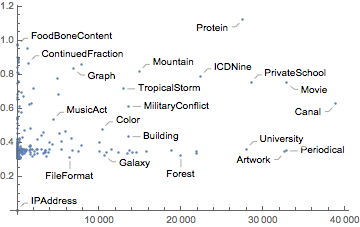

ListPlot[

KeyValueMap[

Callout[{#2["Size"], #2["DisplayTime"]}, #] &,

retDat

]

]

But this seems pretty random so I don't really know Maybe RandomEntity is messing with things. More likely I'm just wrong about that. The data may also be thrown off if the EntityValue retrieves via a paclet mechanism.

Unfortunately I've got no way to really work around this slow-down, except for never displaying an Entity (which I try to never do).