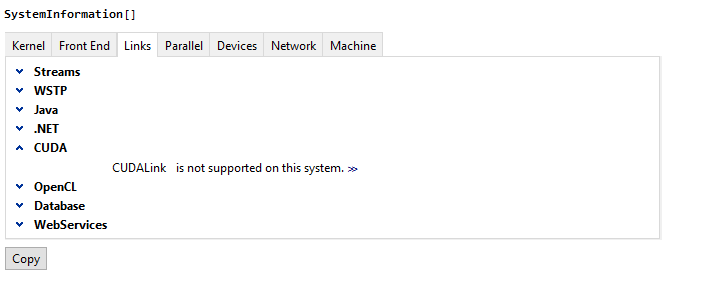

Hi on Windows 10

It seems that 11.3 is not finding the NVidea and 11.2 does.

I have two Video cards on this Zbook (main Intel and second NVidea)

MM11.3

Needs["CUDALink`"]

TextGrid[CUDAInformation[]]

Gives

CUDAInformation::invdevnm: CUDA is not supported on device Intel(R) HD Graphics 530. Refer to CUDALink System Requirements for system requirements.

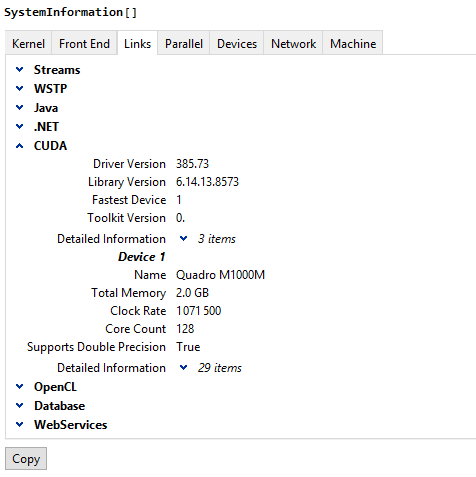

With 11:2

TextGrid[{1 -> {"Name" -> "Quadro M1000M", "Clock Rate" -> 1071500,

"Compute Capabilities" -> 5., "GPU Overlap" -> 1,

"Maximum Block Dimensions" -> {1024, 1024, 64},

"Maximum Grid Dimensions" -> {2147483647, 65535, 65535},

"Maximum Threads Per Block" -> 1024,

"Maximum Shared Memory Per Block" -> 49152,

"Total Constant Memory" -> 65536, "Warp Size" -> 32,

"Maximum Pitch" -> 2147483647,

"Maximum Registers Per Block" -> 65536,

"Texture Alignment" -> 512, "Multiprocessor Count" -> 4,

"Core Count" -> 128, "Execution Timeout" -> 1,

"Integrated" -> False, "Can Map Host Memory" -> True,

"Compute Mode" -> "Default", "Texture1D Width" -> 65536,

"Texture2D Width" -> 65536, "Texture2D Height" -> 65536,

"Texture3D Width" -> 4096, "Texture3D Height" -> 4096,

"Texture3D Depth" -> 4096, "Texture2D Array Width" -> 16384,

"Texture2D Array Height" -> 16384,

"Texture2D Array Slices" -> 2048, "Surface Alignment" -> 512,

"Concurrent Kernels" -> True, "ECC Enabled" -> False,

"TCC Enabled" -> False, "Total Memory" -> 2147483648}}]