I don't know the exact meaning of $EqualTolerance, but its value is 7 bits.

In[108]:= Internal`$EqualTolerance/Log10[2]

Out[108]= 7.

What does that mean? It is not sufficient to compare the last 7 (binary) digits. Using a decimal example, the last 1 digit of 999 and 1000 are not the same even though their difference is only 1. Similarly, a<1 but c>b>1.

If we offset them, we get rid of this problem.

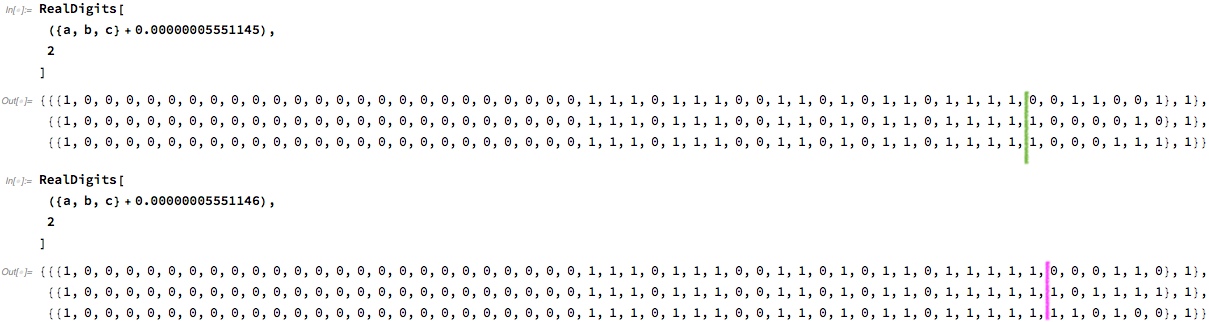

What is the difference between ({a, b, c} + 0.00000005551145) and ({a, b, c} + 0.00000005551146) (see my last post)?

Let's see their binary digits:

Notice that the one that returns False has 7 digits of difference, the other only 6. 7 is exactly the threshold. The behaviour must have something to do with this.

But I still do not understand it.