I posted the following question in

stackexchangeTrying to differentiate <> elements of the image, and although a method was identified in the site, it is still slow and maybe another avenue of exploration is available.

Wanted to see if MorphologicalComponents could be of help. It does allow to quickly split the image into the different letters (with the exception where the letters touch each other), but now Im stuck to see if there is a method that will allow to match or cluster the different images together.

i = Import["http://i.imgur.com/qpFUSTo.jpg?1"];

bwimg = Binarize@i;

myedges[v_] := ((ImageData@

ImageConvolve[#, {{-3, -10, -3}, {0, 0, 0}, {3, 10,3}}])^2 + (ImageData@ImageConvolve[#, {{-3, 0, 3}, {-10, 0, 10}, {-3, 0, 3}}])^2) &@(Binarize@v);

mc = MorphologicalComponents[bwimg];

bb = ComponentMeasurements[mc, "BoundingBox"];

itemCount = Last@First@ComponentMeasurements[mc, "LabelCount"];

myletters = Table[Tooltip[Image@myedges[ImageTrim[bwimg, x /. bb]], x], {x, itemCount}]

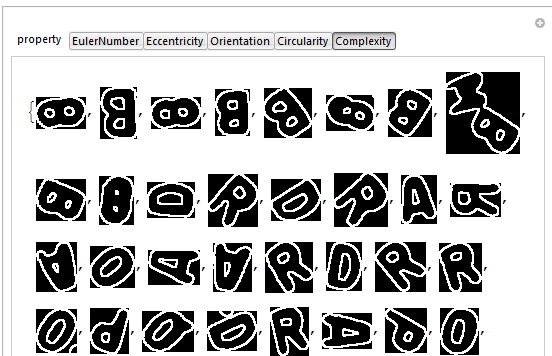

Manipulate[

Module[{sort = Sort[ComponentMeasurements[mc, property], #1[[2]] > #2[[2]] &][[All, 1]]}, Tooltip[Image@myedges[ImageTrim[bwimg, # /. bb]], #] & /@ sort], {property, {"EulerNumber", "Eccentricity", "Orientation", "Circularity", "Complexity"}}]

Any ideas on how to take it from here (if this is indeed the correct path) would be welcomed.