Hi,

I am certainly not an expert on this, but here are some thoughts.

I use this corpus. It is only 15 million words, but it is free. When I unzip the file on the desktop I get a folder called OANC-GrAF. There are lots of annotations, but I am only interested in the txt-files:

fileNames = FileNames["*.txt", "~/Desktop/OANC-GrAF/", Infinity];

Altogether there are

Length[fileNames]

8824 txt-files. We can import and analyse all sentences. Here I only use the first three txt-files and only the first 5 sentences, to check whether it works:

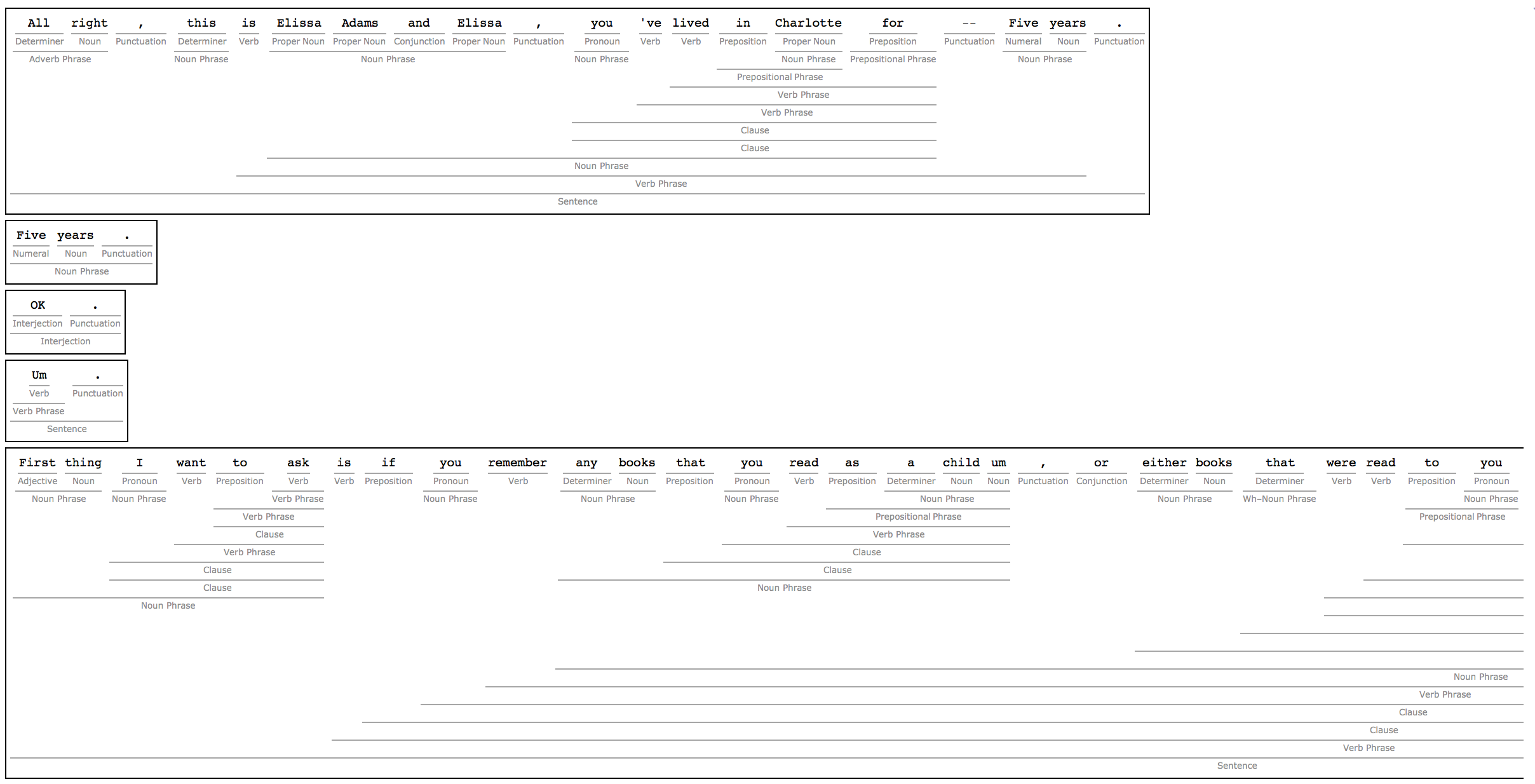

Column[Framed /@ (TextStructure /@ Flatten[TextSentences[Import[#]] & /@ fileNames[[1 ;; 3]]][[1 ;; 5]])]

I can extract lots of information such as:

(TextStructure[#, "PartOfSpeech"] & /@ Flatten[TextSentences[Import[#]] & /@ fileNames[[1 ;; 3]]][[1 ;; 5]])

or like this

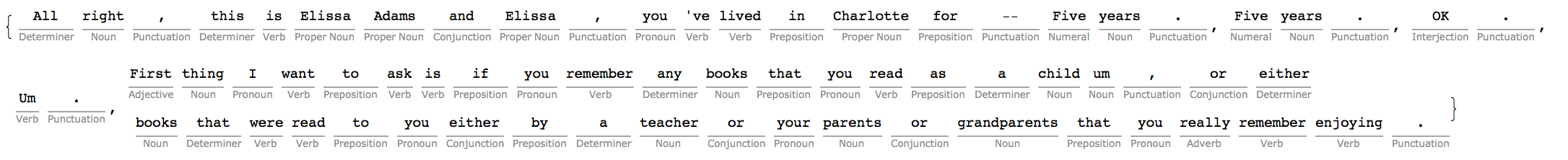

(Normal[TextStructure[#, "PartOfSpeech"]] /. TextElement -> List & /@ Flatten[TextSentences[Import[#]] & /@ fileNames[[1 ;; 3]]][[1 ;; 5]])

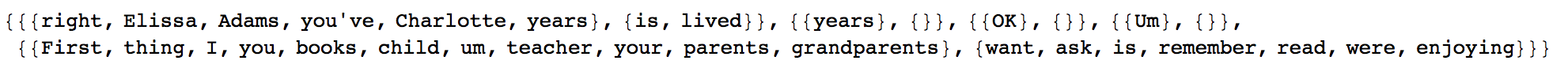

I can also look for nouns and verbs in the sentences:

({DeleteDuplicates[TextCases[#, "Noun" | "ProperNoun" | "Pronoun"]], DeleteDuplicates[TextCases[#, "Verb"]]} & /@

Flatten[TextSentences[Import[#]] & /@ fileNames[[1 ;; 3]]][[1 ;; 5]])

We can now make a graph of this by drawing edges between nouns and verbs in the same sentence:

Graph[Flatten[

Outer[Rule, #[[1]], #[[2]]] & /@ ({DeleteDuplicates[

TextCases[#, "Noun" | "ProperNoun" | "Pronoun"]],

DeleteDuplicates[TextCases[#, "Verb"]]} & /@

Flatten[TextSentences[Import[#]] & /@ fileNames[[1 ;; 1]]])],

VertexLabels -> "Name", VertexLabelStyle -> Directive[Red, 14],

EdgeStyle -> Directive[Arrowheads[{{0.01, 0.6}}], Opacity[0.2]],

VertexSize -> Medium, GraphLayout -> "BalloonEmbedding",

ImageSize -> Full]

where I only use the entire first txt-file. We can also use different types of embedding like so:

Graph[Flatten[

Outer[Rule, #[[1]], #[[2]]] & /@ ({DeleteDuplicates[

TextCases[#, "Noun" | "ProperNoun" | "Pronoun"]],

DeleteDuplicates[TextCases[#, "Verb"]]} & /@

Flatten[TextSentences[Import[#]] & /@ fileNames[[1 ;; 1]]])],

VertexLabels -> "Name", VertexLabelStyle -> Directive[Red, 14],

VertexSize -> Medium,

EdgeStyle -> Directive[Arrowheads[{{0.01, 0.6}}], Opacity[0.2]],

GraphLayout -> {VertexLayout -> {"MultipartiteEmbedding"}},

ImageSize -> Full]

With a bit of patience it is possible to analyse the entire corpus. For example:

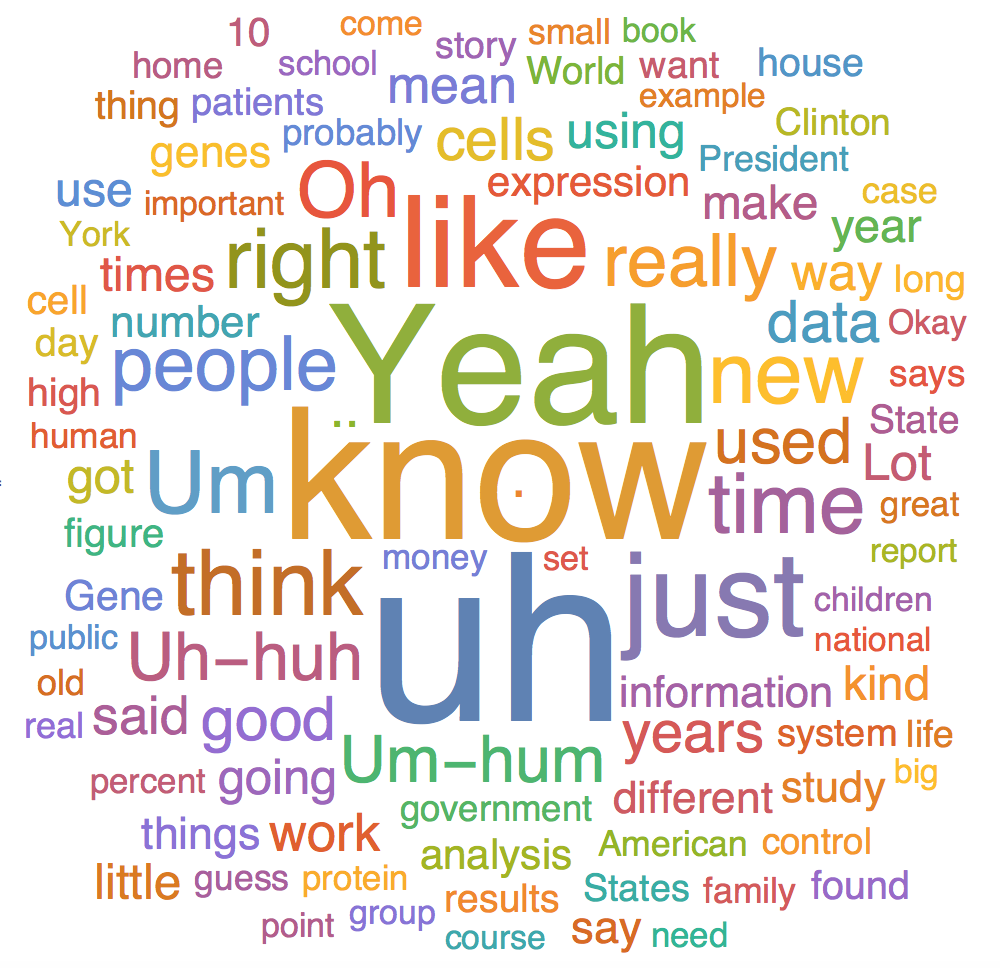

WordCloud[DeleteStopwords[Flatten[TextWords[Import[#]] & /@ fileNames]], IgnoreCase -> True]

gives

For the analysis that you are interested in the function TextStructure in combination with the option "DependencyString" might be useful. For example

Cases[(TextStructure[#, "DependencyString"] & /@ Flatten[TextSentences[Import[#]] & /@ fileNames[[1 ;; 3]]][[1 ;; 5]])[[1]], {"nsubj", {_, _}}, Infinity]

gives

{{"nsubj", {"this", 4}}, {"nsubj", {"you", 11}}}

which makes sense given that the first sentence is"

Flatten[TextSentences[Import[#]] & /@ fileNames[[1 ;; 3]]][[1 ;; 5]]

This is of course only showing the principle. It is not a solid analysis, but I hope it helps.

Cheers,

M.