Marco, thank you for all of this very usable information. Following these instructions I was easily able to get a similar setup working, the main difference is that my GPU is an NVIDIA GTX 1080 Ti. I'm posting some benchmarks for GPU comparison (and some details about the setup the end).

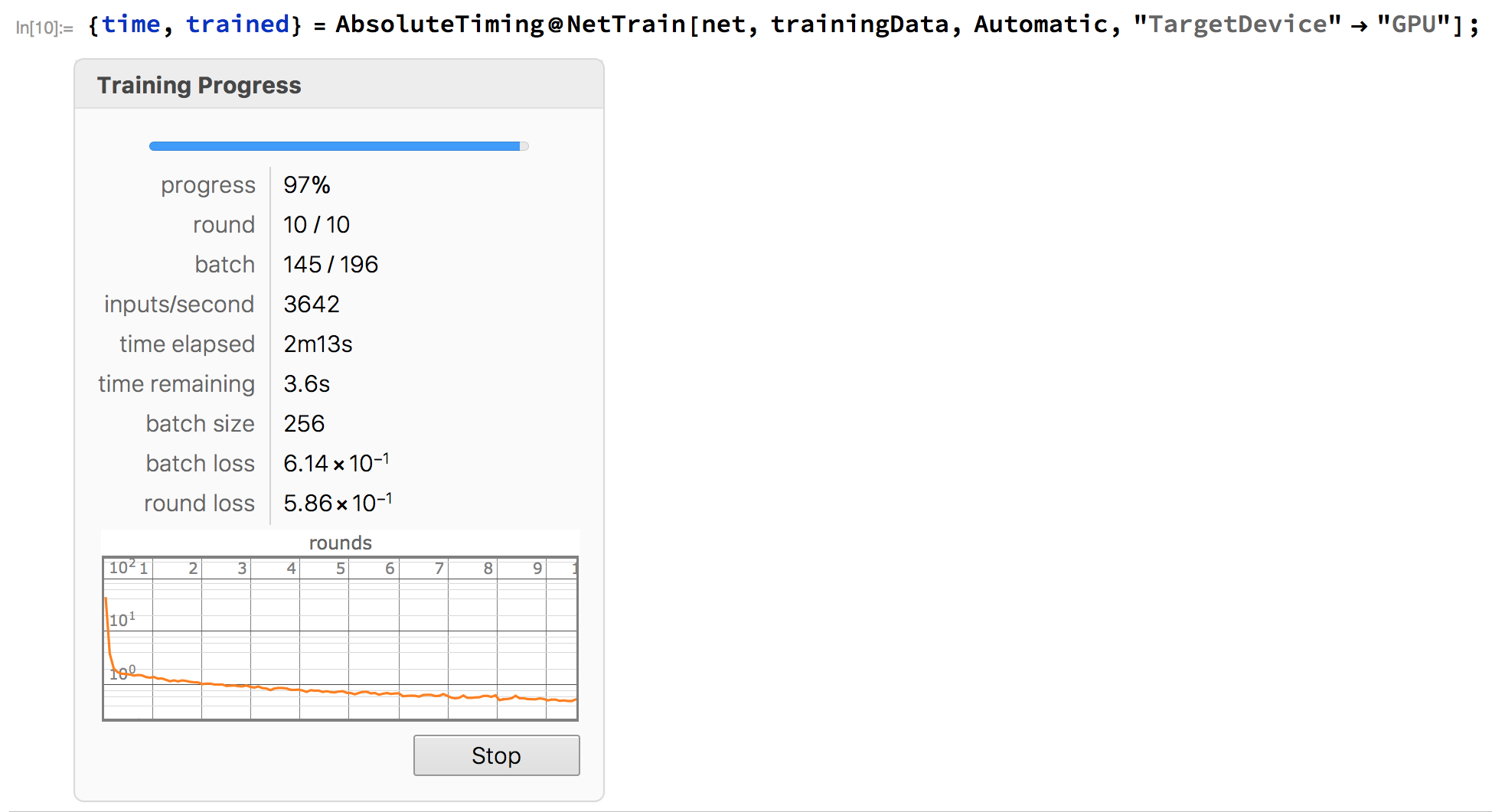

The network training task came in at about 2 minutes 17 seconds withthe 1080 Ti:

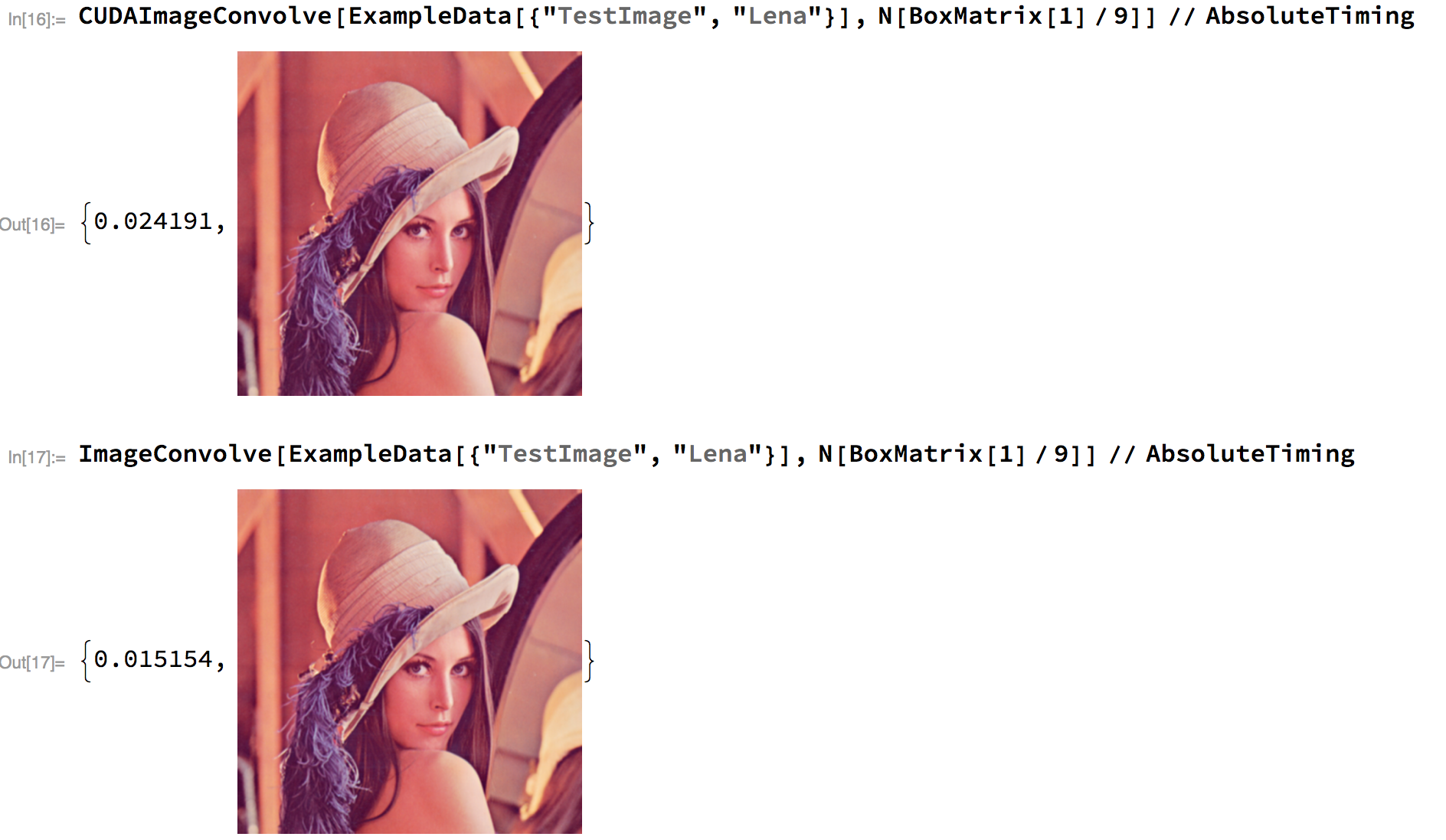

The ImageConvolve was still slower with CUDA, but not a lot slower:

I was not successful in growing a Mandelbulb, but I didn't put any real effort into trying to troubleshoot this.

Thanks again, I never would have attempted this had you not documented your setup... best wishes... Jan

PS: On a related note, Apple appears to be moving towards supporting external GPU's in the upcoming High Sierra OS release, but apparently only with computers that support Thunderbolt 3.

PPS: Some technical details:

Computer:

Model Name: MacBook Pro

Model Identifier: MacBookPro11,4

Processor Name: Intel Core i7

Processor Speed: 2.2 GHz

eGPU:

I have a BizonBox 2S connected by Thunderbolt 2. I first downgraded the Command Line Tools. Then I followed the BizonBox instructions (including having an external monitor plugged into the GPU via HDMI). Then I followed Marco's instructions for the Wolfram setup. I only experienced one minor setback, which was that the CUDAResourcesInstall[] crashed the Wolfram kernel the first time I tried it, but worked fine after launching a new kernel.