In recent investigations of multiway games, we generated more content than was really necessary to get the point across. In addition to RandomSierpinskiMaze (the subject of another post) the list of omissions also includes a few insightful results about the Project L board game. The purpose of this memo is to pose a problem about polyomino tilings in which summer school students have a chance of joining our efforts by reproducing our findings. If verifying reproducibility is not enough for the terribly ambitious, there is also chance for innovation and originality. My personal solution of the following problem did not use an optimal time algorithm, and more comprehensive analysis remains to be done if we can improve efficiency of our methodology.

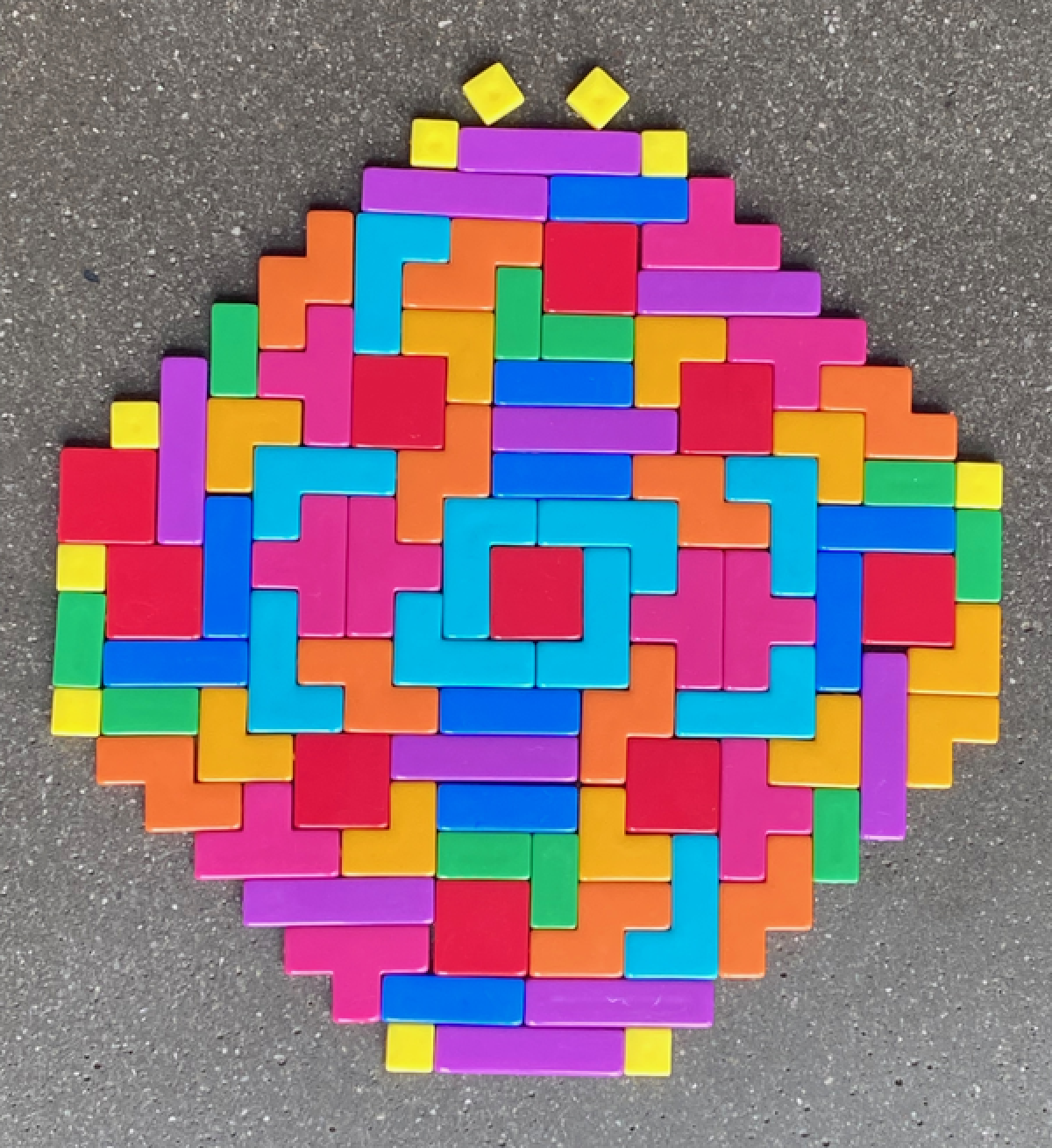

From a mathematical viewpoint the board game "Project L" simply asks players to solve exact cover problems given limited resources and time constraints. It is an "engine building" game in the sense that, as time progresses, players build up a collection of tiles, which are used as resources for completing puzzles. So let's start there, with a complete depiction of all available resources, the polyomino pieces which are used to solve tiling puzzles:

What we see here is a particularly nice, almost-symmetrical tiling comprised of yellow monominos, green dominos, orange and blue trominos, and five colors of tetrominos (yes, the tetris pieces). No one player ever has control over all pieces, so such an aggregation could never be created in game. In fact, the rules state that each player starts with one yellow monomino and one green domino, and it takes time and puzzles solved to build up a larger hand of tiles (probably not exceeding 20 pieces).

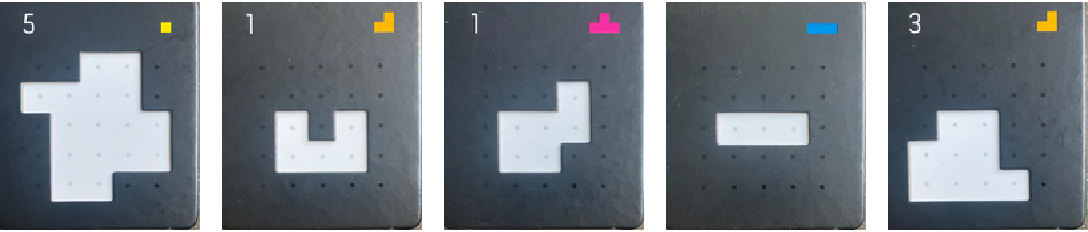

Just to give you readers an idea how small and relatively easy the puzzles are, here is a sample of five taken from the entire set of 52 (digitized by yours truly):

When a player solves such a puzzle by filling it in with polyomino pieces, they are awarded a new polyomino for their collection (top right) and possibly points toward their total score (top left). At the end of the game the player with the highest score wins (but the winning player can also be expected to have collected a pretty nice and diverse protfolio of tiles).

If you look closely, each puzzle is characterized by three weight metrics: The number of points awarded in the top left corner, the grid size (or weight) of the polyomino reward piece, and the total "cost" to solve the puzzle, which is counted by the grid size of the recessed, white, inner regions. There is definitely a cost ~= reward balance in each of these puzzles, which we will return to shortly.

Now before going any farther into game mechanics, let's talk about classical mechanics. When someone gives you a finite, spendable resource, the first physical quantity you should think of is energy. Indeed, during the game, tiles are a form of energy, which are spent toward completing patterns at variable rates of power. When considering points taken per turn, and gauging against power, it also becomes possible to define efficiency. Luck aside, we generally assume that the most efficient players are the ones who should win the engine building games.

Now, let us get to the games basic per turn mechanic. Each player's turn consists of three consecutive actions, which are chosen from a list of energy-rated alternatives:

- A puzzle is drawn from the bank at no cost.

- A tile of weight N is played into a puzzle.

- A tile of weight 1 is drawn from the bank.

- A tile of weight N is traded to the bank for another of max weight N+1.

- Multiple tiles of total weight X can be played into consecutive puzzles.

The fourth alternative is the odd one out, and may only be used once per turn. Since total weight is a summation of up to four individual tile weights (players can have at max four puzzles on board), the play of X per one time interval is usually the player's maximum power action. If used properly, "master actions" should lead to advantageous gains. But dominant play also requires one to know which are the best pieces to have in hand, and which are the best puzzles to have on board.

Now for some insight toward winning the game, let's define efficiency with regard to gaining points.

Assume that every point value P is in fact an expected time T = P to completion. The weight W of the interior puzzle can be considered an energy E = W, such that the nominal power rating of a puzzle is E / T = W / P. Now let's call the actual time to completion T' and the actual completion power W / T', such that the efficiency of solving is simply P / T'. In this definition, efficiency can also be thought of as a scale factor: actual completion power = efficiency * nominal completion power [W/T' = (P/T')*W/P ].

According to my comprehensive data analysis, most puzzles in the game are balanced such that P / T' is less than or equal to one. However, all 5-point puzzles (and a few more at 3, 4 points) allow that P / T' can be greater than one. Quite obviously, such puzzles are desirable to have on board during the end game when "engine building" is no longer as important as "engine spending".

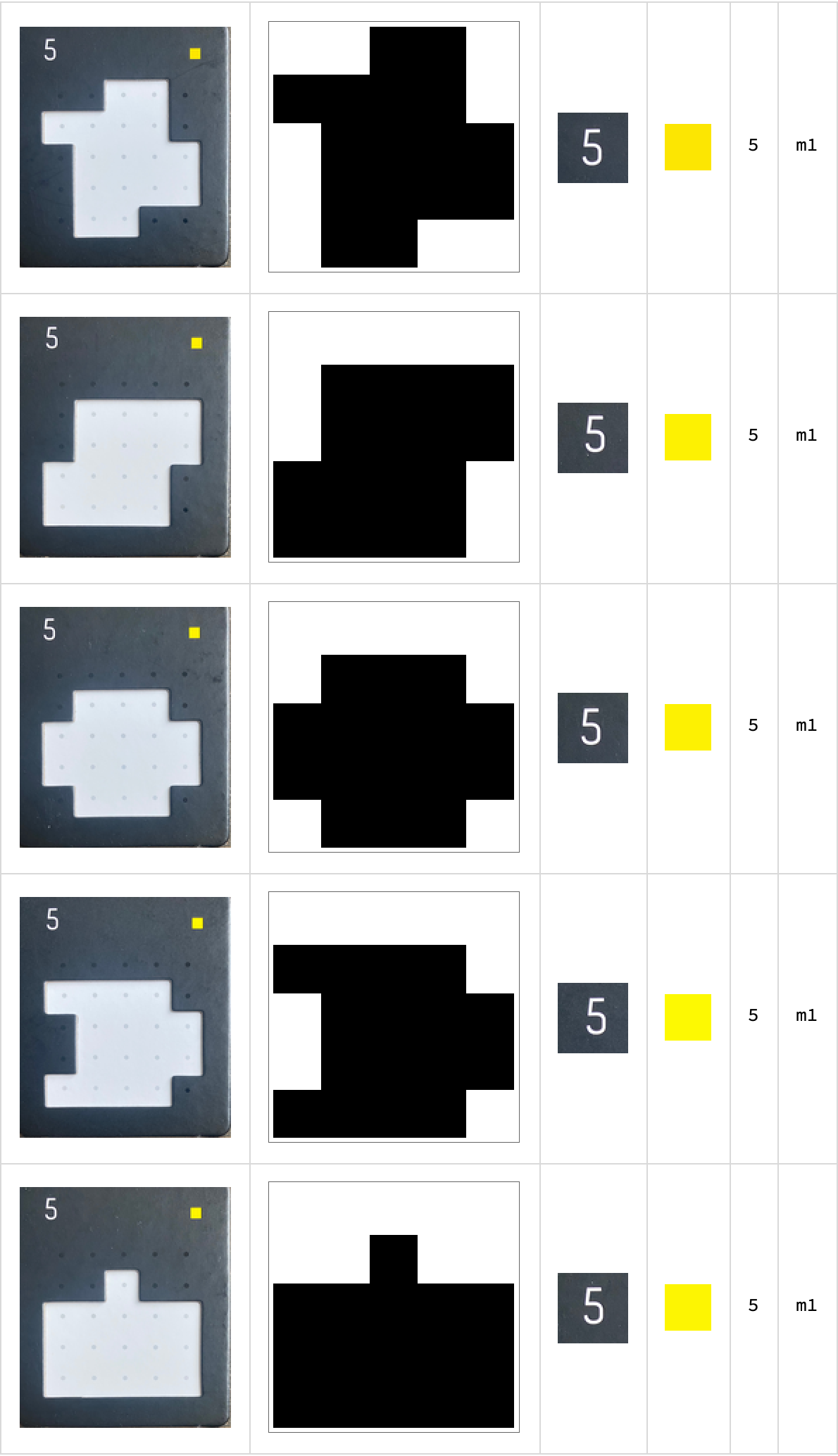

So finally, we're done with a practical, real-life example set up, and we are motivated to find out all the possible ways that five point puzzles from Project L can be solved with a relative efficiency greater than one. Here they are, the particular five point puzzles:

rawData={{{1, 0, 0, 1, 1}, {1, 0, 0, 0, 0}, {1, 0, 0, 0, 0}, {0, 0, 0, 0,

1}, {1, 1, 0, 0, 1}}, {{0, 0, 0, 0, 1}, {0, 0, 0, 0, 1}, {1, 0, 0,

0, 0}, {1, 0, 0, 0, 0}, {1, 1, 1, 1, 1}}, {{1, 0, 0, 0, 1}, {0, 0,

0, 0, 0}, {0, 0, 0, 0, 0}, {1, 0, 0, 0, 1}, {1, 1, 1, 1, 1}}, {{0,

0, 0, 0, 1}, {1, 0, 0, 0, 0}, {1, 0, 0, 0, 0}, {0, 0, 0, 0, 1}, {1,

1, 1, 1, 1}}, {{0, 0, 0, 0, 0}, {0, 0, 0, 0, 0}, {0, 0, 0, 0,

0}, {1, 1, 0, 1, 1}, {1, 1, 1, 1, 1}}};

But the challenge is not only to solve these puzzles in all possible ways (assuming maximum efficiency), but also to give a quantitative comparison of the most useful tetrominos when attempting to do so! Which tetromino is most useful in the end game of Project L?

HINT: it is possible to modify MultiwayDeletionsGraph to solve this problem (what I did), but it would probably be faster to implement D. Knuth's Dancing Links. Extra Credit: If it is possible to implement DLX, what is the graph structure on which the DLX backtracker operates?